Alerting Architecture & Third-Party Integration

Introduction to Stateful Alerting in TrackMe

Hint

TrackMe Stateful alerting is a major enhancement in TrackMe alerting concepts and management, with state-aware alerts, advanced Email thread alert notifications, and active command execution.

With Stateful alerting, TrackMe automatically maintains cycles of incidents in a persistent and state-aware manner, used in conjunction with sophisticated and powerful capabilities.

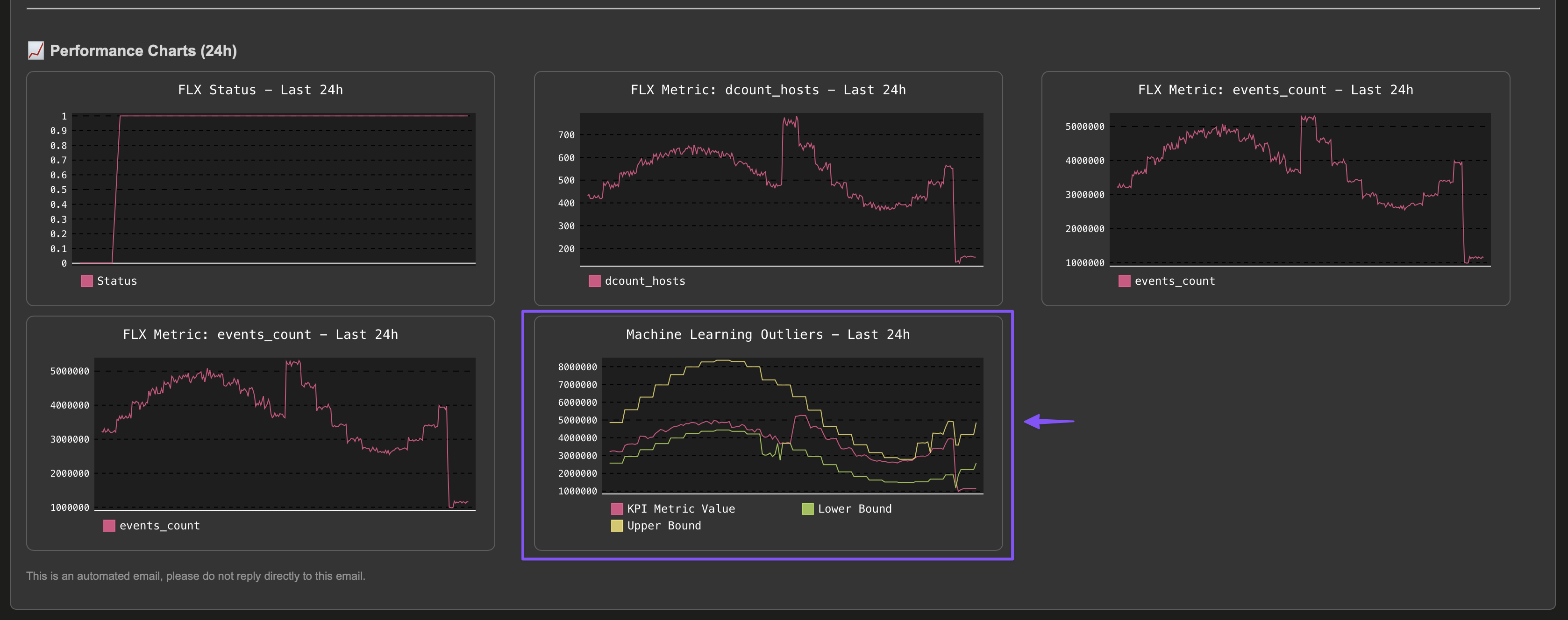

Notably, TrackMe can deliver rich email notifications, in HTML format and with automated and embedded metrics charts.

It also generates stateful events, which can be used to trigger advanced third-party notifications, starting with the incident creation, incident updates, and incident closure when the entity returns to a normal state.

These features allow TrackMe to provide a complete, powerful, and flexible state-aware alerting architecture.

Email charts are not available in Splunk 9.1/9.2 environments due to Python 3.8.x and later requirements.

Since TrackMe 2.1.16, TrackMe can also execute active commands (generating or streaming commands) depending on whether the incident is opened, updated, or closed, allowing flexible and powerful state-aware actions such as incident creation, updating, and closing.

Since TrackMe 2.1.18, Stateful Alerts can be configured to automatically acknowledge entities when the incident is opened.

TrackMe 2.1.19 has addressed all compatibility issues with emails clients and notably embedded charts, this is the case for instance for Outlook and Web Gmail.

TrackMe 2.1.19 also simplifed the configuration and added granular priority levels selections management of emails and commands notifications as the level of the handler, allowing to simply create the stateful alert which handles incidents for all entities, and selecting which entities would lead to the generation of emails and commands notifications.

Since TrackMe 2.1.20 the charts time range window is configurable at the alert level, with a default of 24 hours.

Sophisticated Alerting Made Easy - TrackMe Alerting in a Nutshell

In short:

Once enabled, when a given entity turns into an alerting state, a new incident is created.

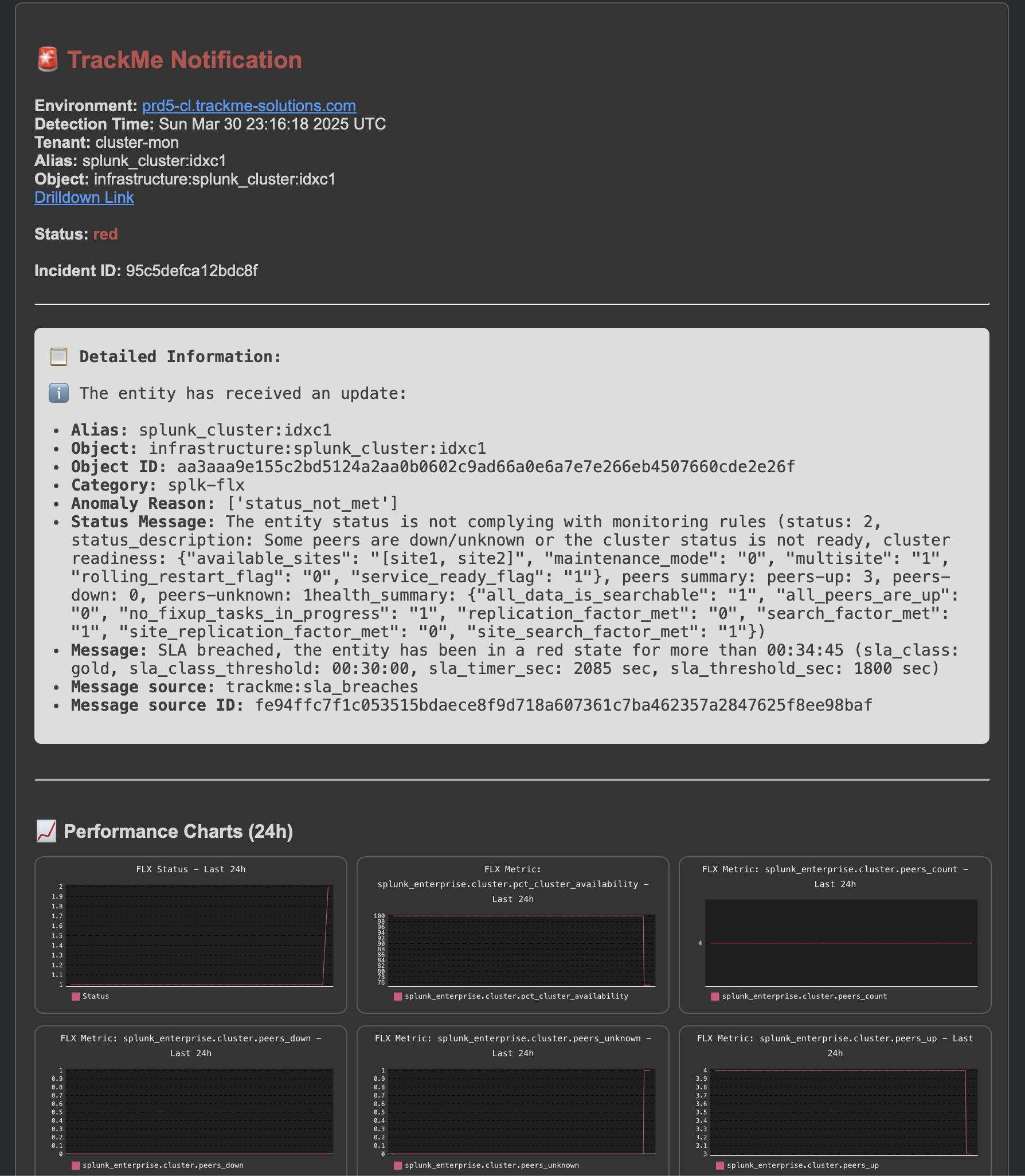

When new events are detected, such as Notable events or SLA breaches, the incident is updated.

Eventually the entity returns to non alerting state, the incident is closed.

Rich HTML emails notifications can be delivered by TrackMe, including automated and embedded metrics charts.

Events are also generated, in the

sourcetype=trackme:stateful_alerts.Therefore, TrackMe can generate Opening / Updating / Closing events, and/or rich Emails notifications.

Active commands (generating or streaming commands) can also be executed, depending on the incident status.

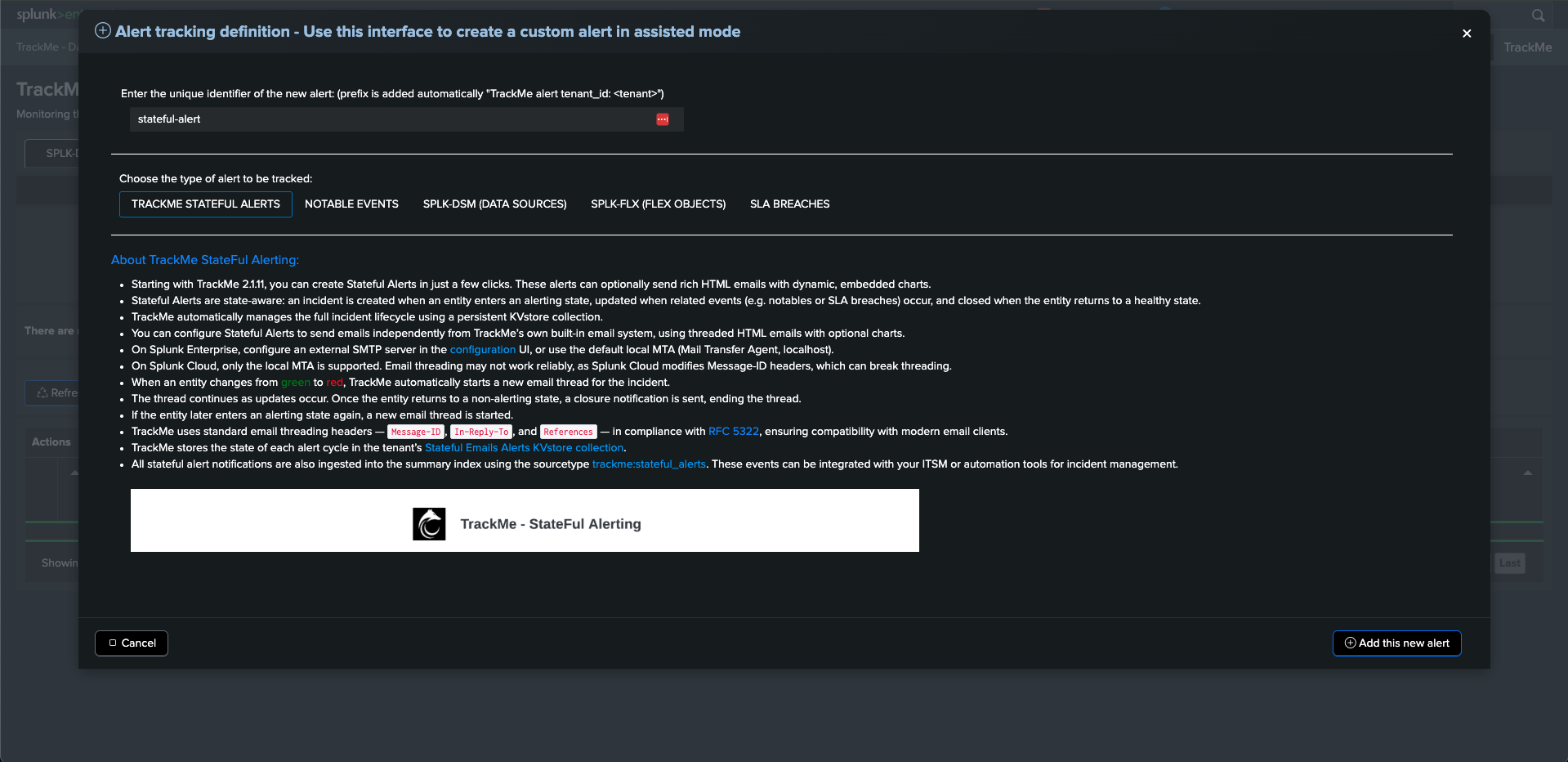

Creating a Stateful Alert in a Few Easy Steps

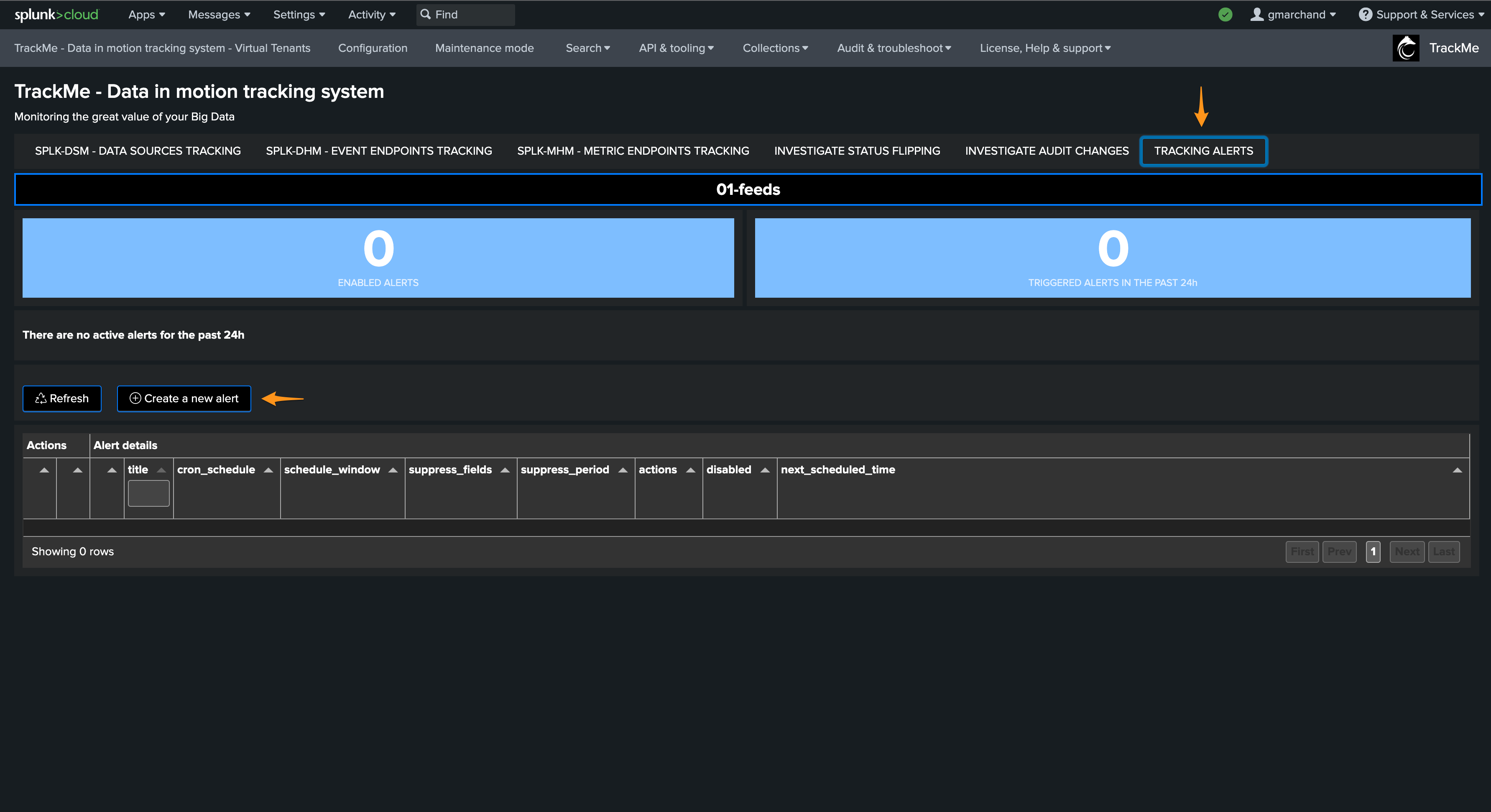

In the TrackMe Virtual Tenant, go to the “TRACKING ALERTS” tab, and use the wizard to create a new alert:

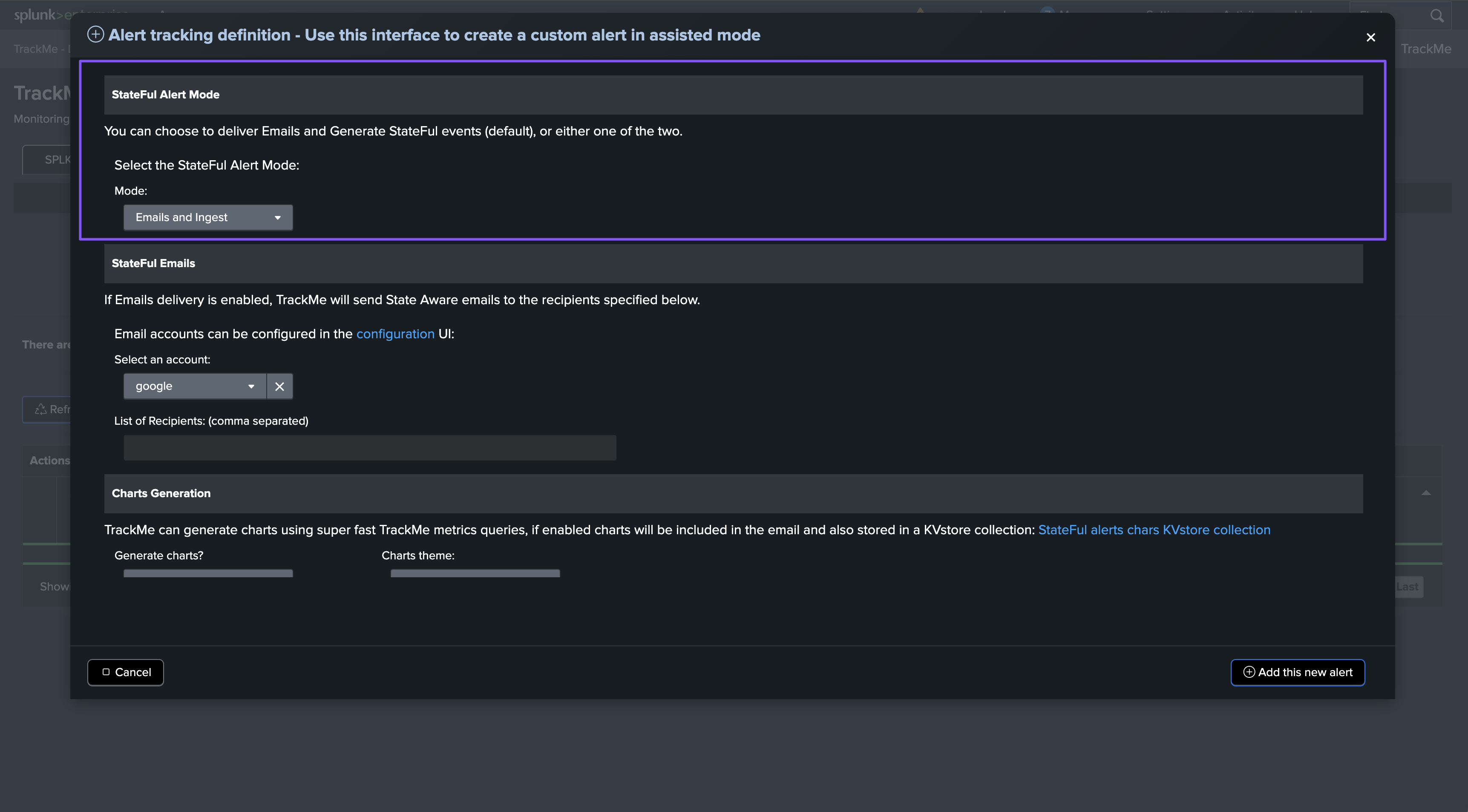

Step 1: Select the Stateful Alert Mode

The first step is to select the alert mode, available options are:

Emails and Ingest: generate both emails deliveries and ingest eventsEmails, Commands and Ingest: generate emails deliveries, active commands and ingest eventsCommands and Ingest: generate active commands and ingest eventsCommands and Emails: generate active commands and emails deliveriesEmails only: generate emails deliveries onlyIngest only: generate ingest events onlyCommands only: generate active commands only

Ingest actions

We recommend to always have the ingest actions enabled, unless you have a specific use case with multiple alerts.

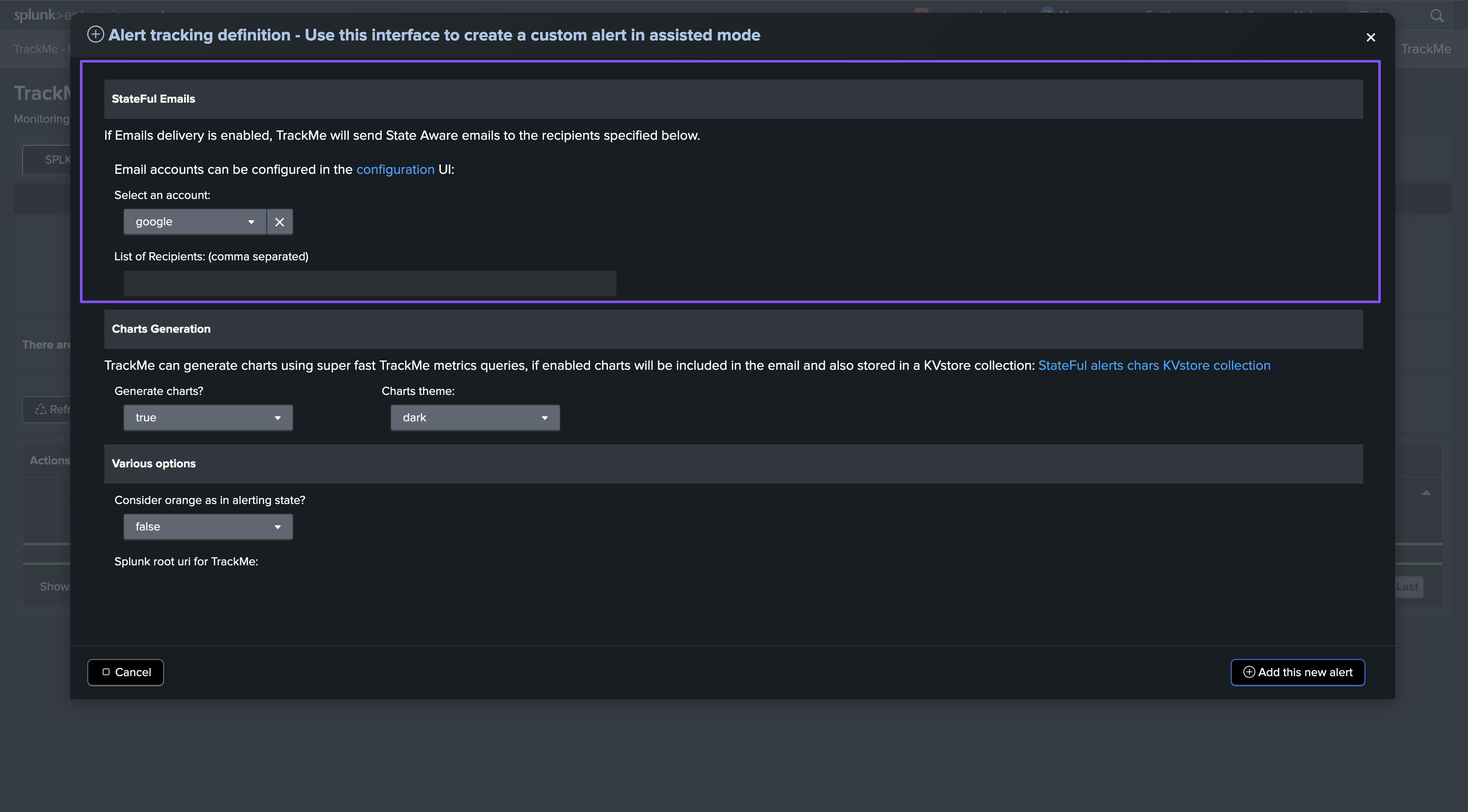

Step 2: Configure emails delivery, if wanted

If you selected the Emails and Ingest mode, you can configure the emails delivery options:

For Splunk Cloud environments:

You cannot configure an external SMTP destination in Splunk Cloud; the local MTA is mandatory.

Therefore, for Splunk Cloud, you do not need any further configuration; TrackMe uses the local MTA as a default.

For Splunk Enterprise environments:

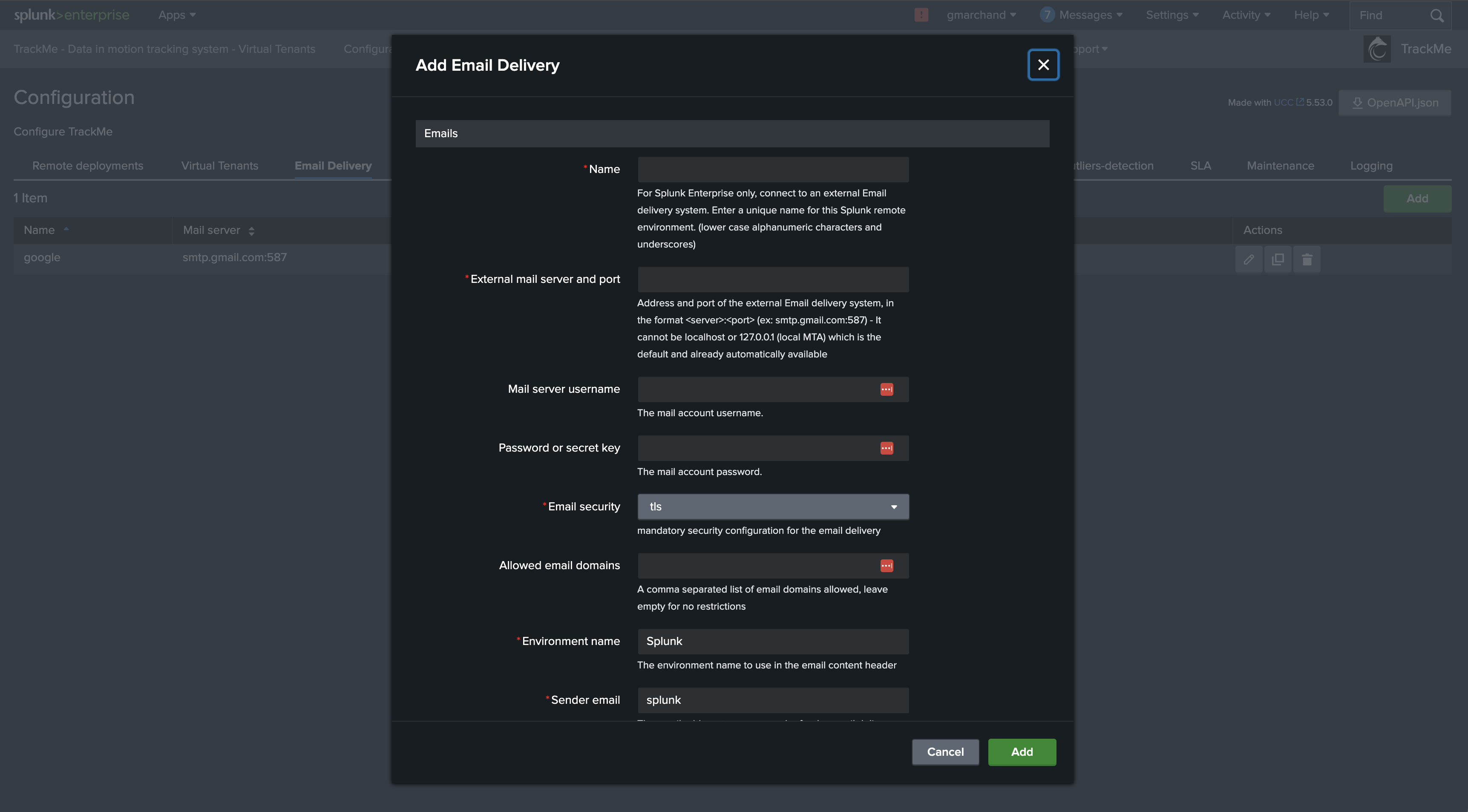

You can choose to use the local MTA or configure an external SMTP destination.

You can configure an external SMTP destination in Splunk Enterprise, and TrackMe will use it to deliver emails.

In the Configuration UI, go to the Emails tab and configure the SMTP destination, note that using SSL or TLS is mandatory:

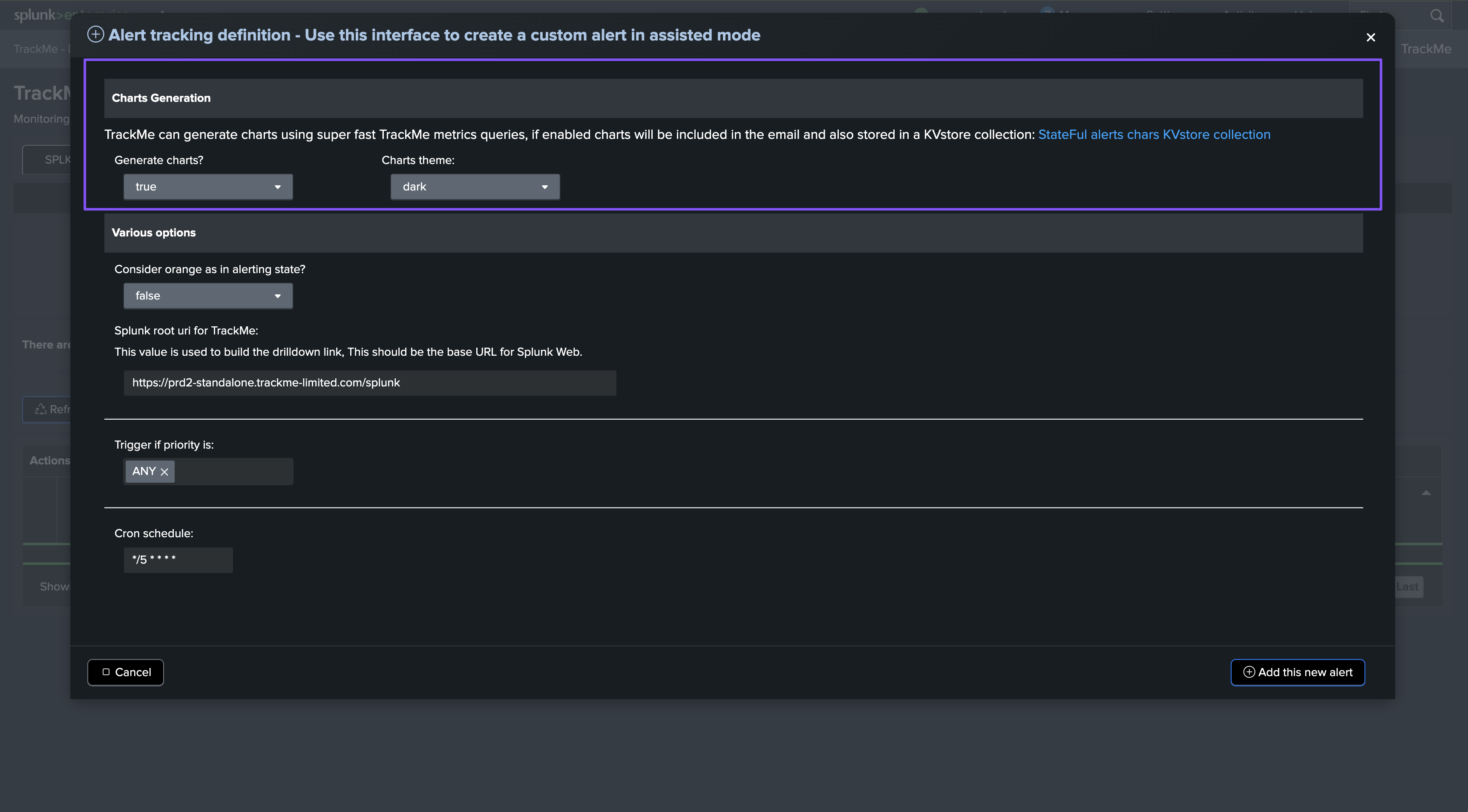

Step 3: Charts generation

About Emails clients compatibility

Emails charts use SVG images format, some limited email clients such as Web Gmail do not support this format and charts are not displayed.

By default, TrackMe generates dynamic charts using super fast TrackMe metrics queries and indexes, and embeds them in the emails notifications:

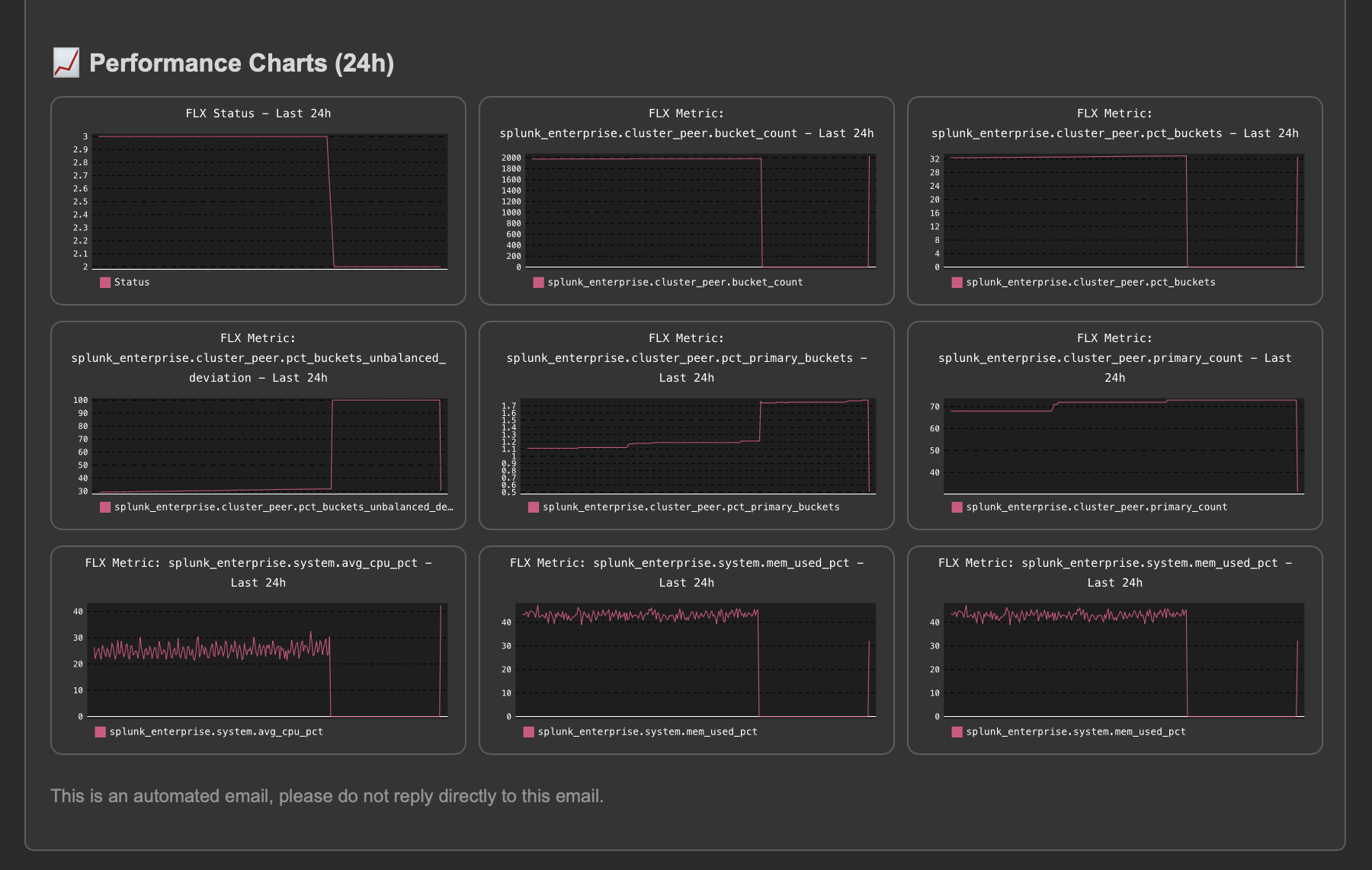

What does this look like? Very cool!:

TrackMe also stores the charts in base64 format in the persistent KVstore collection, so you can access them later on if needed notably for third party integrations.

Replace mytenant with the name of your Virtual Tenant:

| inputlookup trackme_stateful_alerting_charts_tenant_mytenant | eval key=_key, _time=mtime

You could link stateful alerts events to the charts in the KVstore collection, in a Splunk search:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id=mytenant

| lookup trackme_stateful_alerting_charts_tenant_mytenant incident_id OUTPUT chart_svg_base64

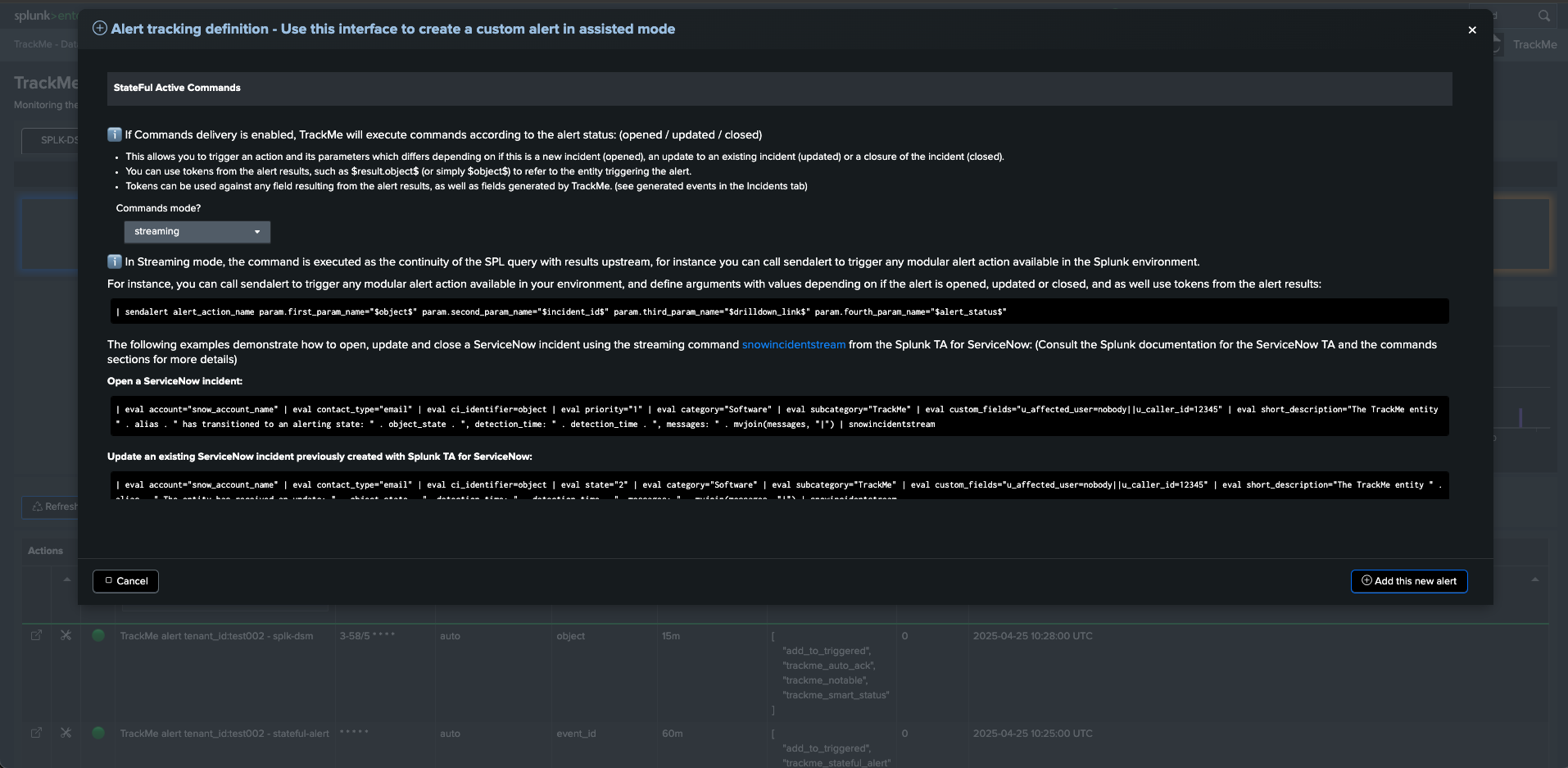

Step 4: Active commands

In TrackMe, active commands can be generated, depending on the incident status.

Streaming commands example: Creating / Updating / Closing incidents in ServiceNow

This implementation example uses the Splunk TA for ServiceNow application and its built-in command to create, update, and close incidents.

For more details about the command usage, consult the ServiceNow TA documentation.

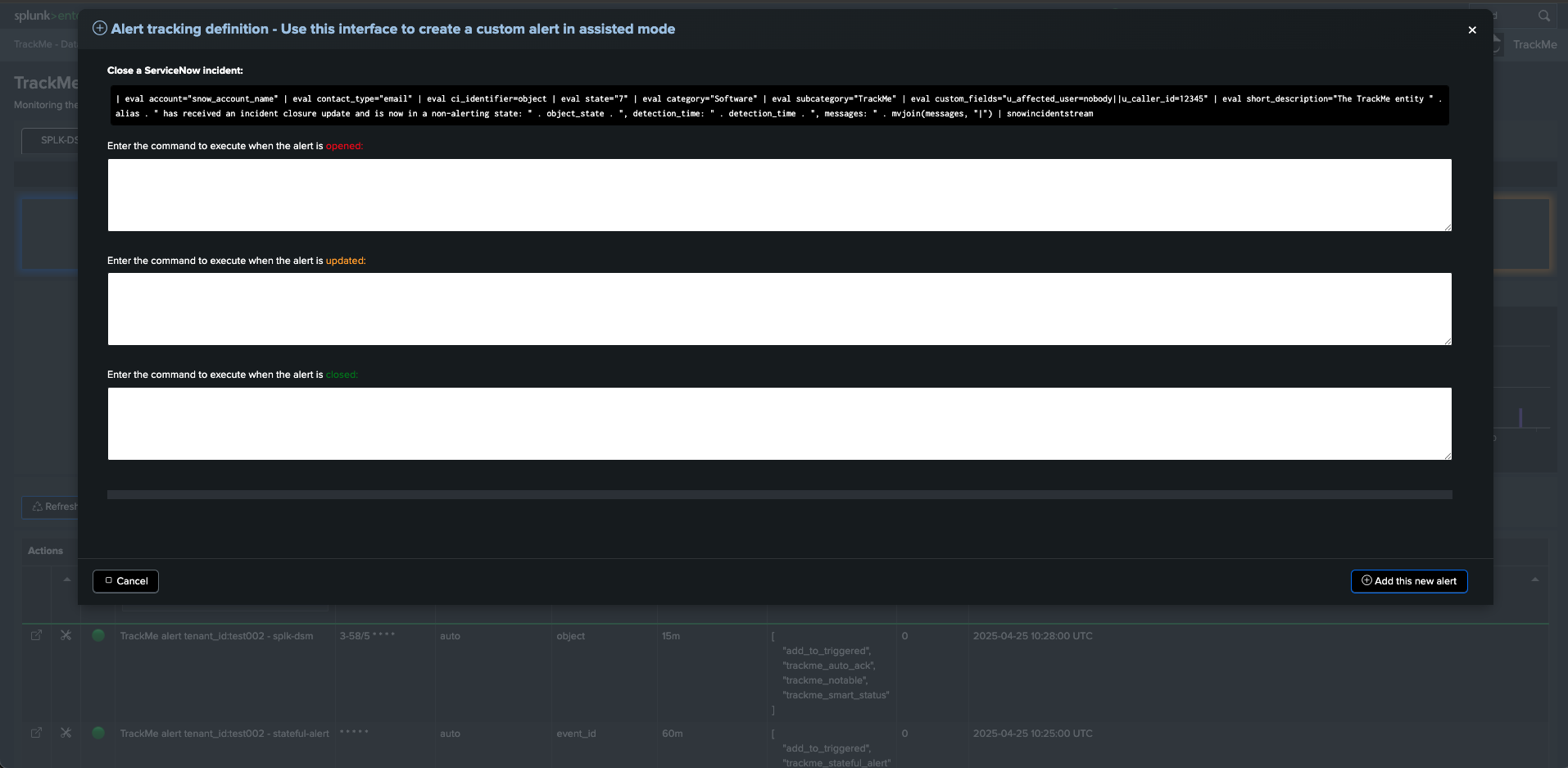

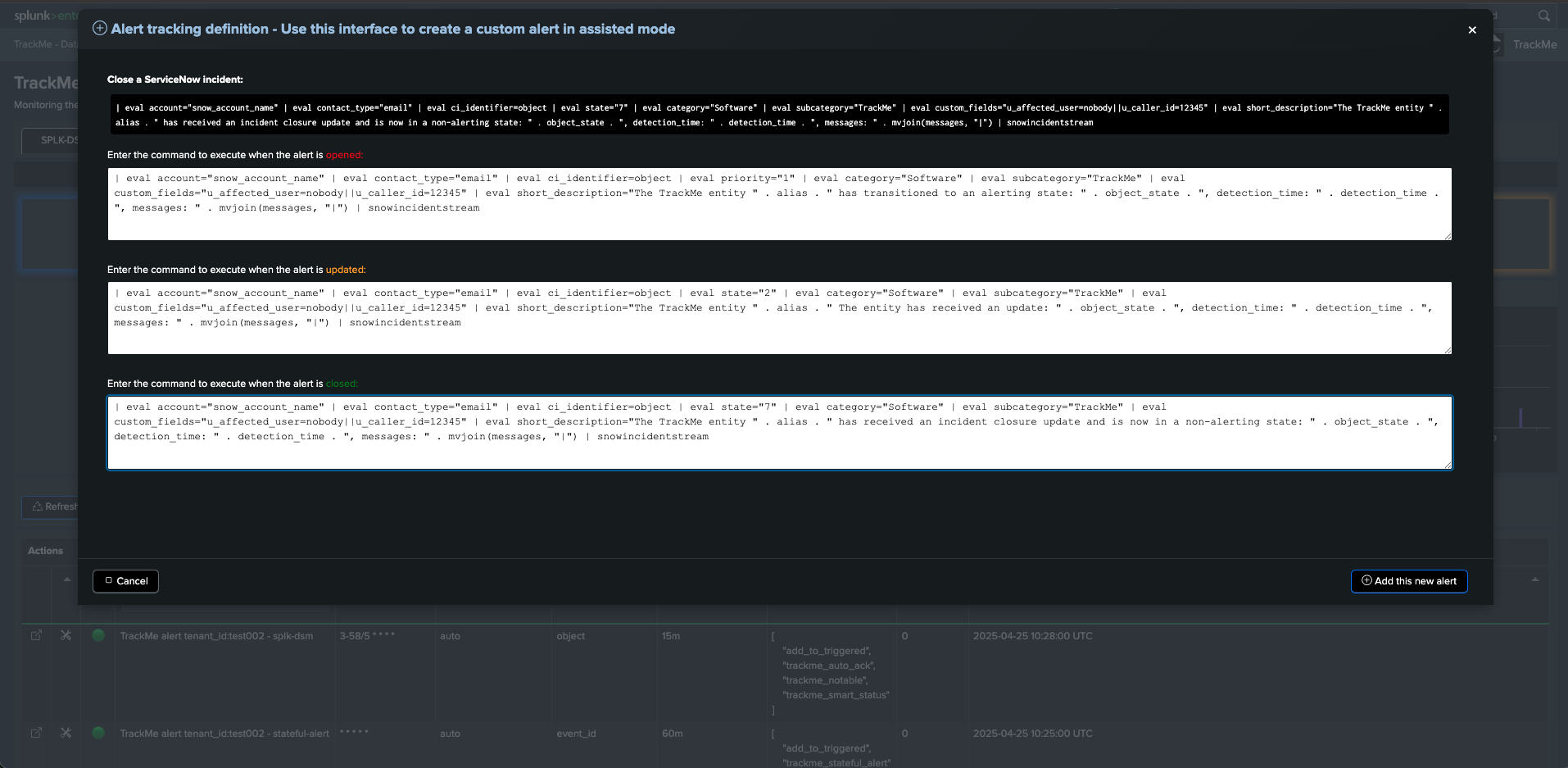

When creating the TrackMe alert, setup the active commands for each state of the alert: (opened, updated, closed)

These are examples of commands that need to be adapted to your ServiceNow context:

commands_created:

| eval account="snow_account_name" | eval contact_type="email" | eval ci_identifier=object | eval priority="1" | eval category="Software" | eval subcategory="TrackMe" | eval custom_fields="u_affected_user=nobody||u_caller_id=12345" | eval short_description="The TrackMe entity " . alias . " has transitioned to an alerting state: " . object_state . ", detection_time: " . detection_time . ", messages: " . mvjoin(messages, "|") | snowincidentstream

commands_updated:

| eval account="snow_account_name" | eval contact_type="email" | eval ci_identifier=object | eval state="2" | eval category="Software" | eval subcategory="TrackMe" | eval custom_fields="u_affected_user=nobody||u_caller_id=12345" | eval short_description="The TrackMe entity " . alias . " The entity has received an update: " . object_state . ", detection_time: " . detection_time . ", messages: " . mvjoin(messages, "|") | snowincidentstream

commands_closed:

| eval account="snow_account_name" | eval contact_type="email" | eval ci_identifier=object | eval state="7" | eval category="Software" | eval subcategory="TrackMe" | eval custom_fields="u_affected_user=nobody||u_caller_id=12345" | eval short_description="The TrackMe entity " . alias . " has received an incident closure update and is now in a non-alerting state: " . object_state . ", detection_time: " . detection_time . ", messages: " . mvjoin(messages, "|") | snowincidentstream

You can call tokens at any stage of the command, using the format:

field_name="$result.field_name$"

Or:

field_name="$field_name$"

Tokens are applicable against any field resulting from the alert, as well as fields added by TrackMe, you can consult an example of an incident event in the Incidents tab, see the anatomy of StateFul Incidents Events section for more details.

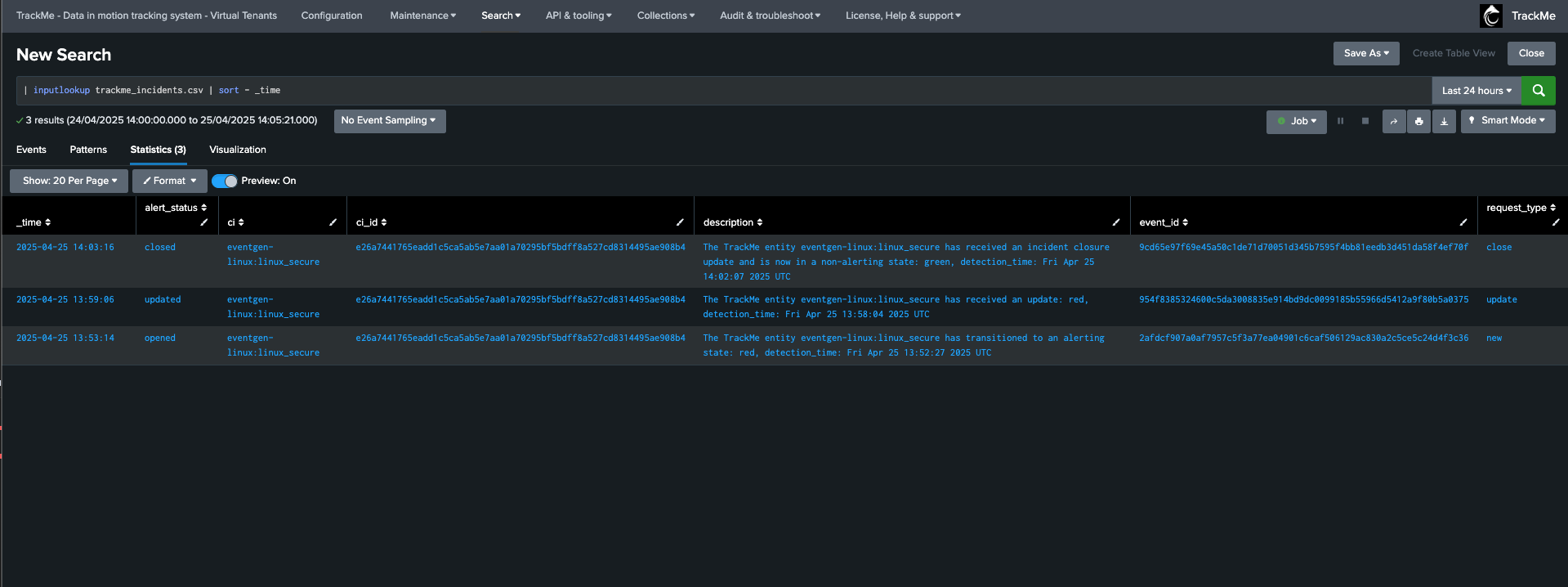

Generating commands example

TrackMe can also call a generating command instead, which you can leverage to tackle your specific use case.

The following example shows a simple usage for demonstration purposes using the Splunk makeresults command, where we would add a subset of the content to a lookup file, which could then be consumed by a third-party logic.

commands_created:

| makeresults | eval request_type="new", ci="$object$", ci_id="$object_id$", alert_status="$alert_status$", event_id="$event_id$",description="The TrackMe entity $alias$ has transitioned to an alerting state: $object_state$, detection_time: $detection_time$" | outputlookup append=t trackme_incidents.csv

commands_updated:

| makeresults | eval request_type="update", ci="$object$", ci_id="$object_id$", alert_status="$alert_status$", event_id="$event_id$", description="The TrackMe entity $alias$ has received an update: $object_state$, detection_time: $detection_time$" | outputlookup append=t trackme_incidents.csv

commands_closed:

| makeresults | eval request_type="close", ci="$object$", ci_id="$object_id$", alert_status="$alert_status$", event_id="$event_id$", description="The TrackMe entity $alias$ has received an incident closure update and is now in a non-alerting state: $object_state$, detection_time: $detection_time$" | outputlookup append=t trackme_incidents.csv

Example of a cycle of incidents for a same entity:

Troubleshooting active commands execution

Hint

About the user context

It is important to remember that the command is executed in the context of the owner of the alert.

When it comes to TrackMe, the owner of the alert is handled automatically by TrackMe to correspond to the owner of the Virtual Tenant.

By default, the owner when creating Virtual Tenants in TrackMe is nobody. (splunk-system-user)

Therefore, keep this in mind while troubleshooting the command execution, as a third party command might potentially require specific permissions and capabilities to be available for the user context.

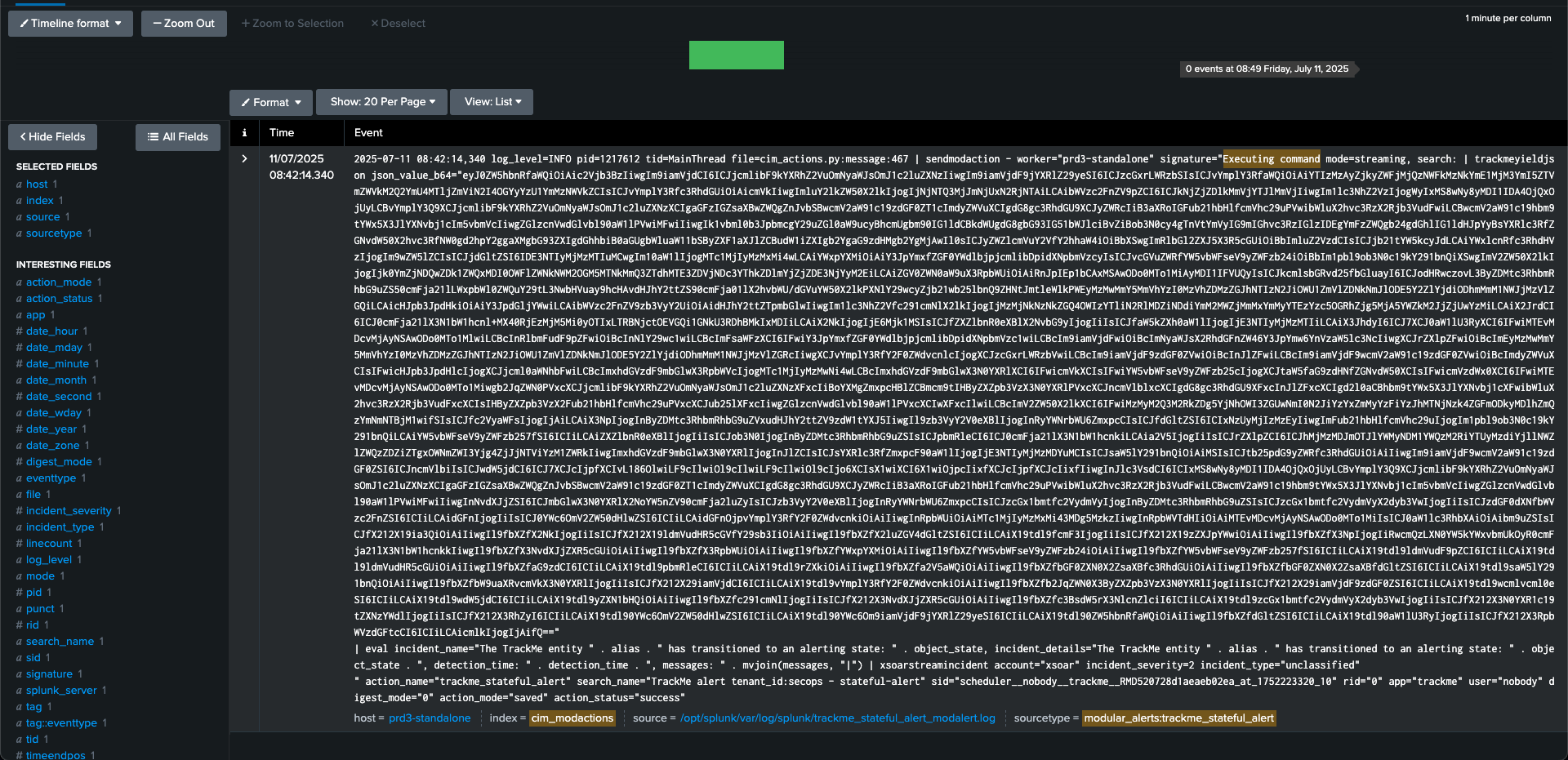

Consult the logs of the Stateful alert action to review the execution of the active commands:

index=cim_modactions sourcetype=modular_alerts:trackme_stateful_alert "Command"

Especially, the command execution will be logged as:

index=cim_modactions sourcetype=modular_alerts:trackme_stateful_alert "Executing command"

If the active command is executed, but does not return any results, the logs will show an error message, otherwise an informational message will be shown.

TrackMe processes active commands by converting the upstream results in a base64 encoded string, then executing the trackMe command trackmeyieldjson followed by the requested search logic, such as:

This is a very powerful and flexible approach, you can easily re-execute the command at anytime with 100% of the original context and easily troubleshoot any issue:

| trackmeyieldjson json_value_b64="<encoded_base64_string"

example:

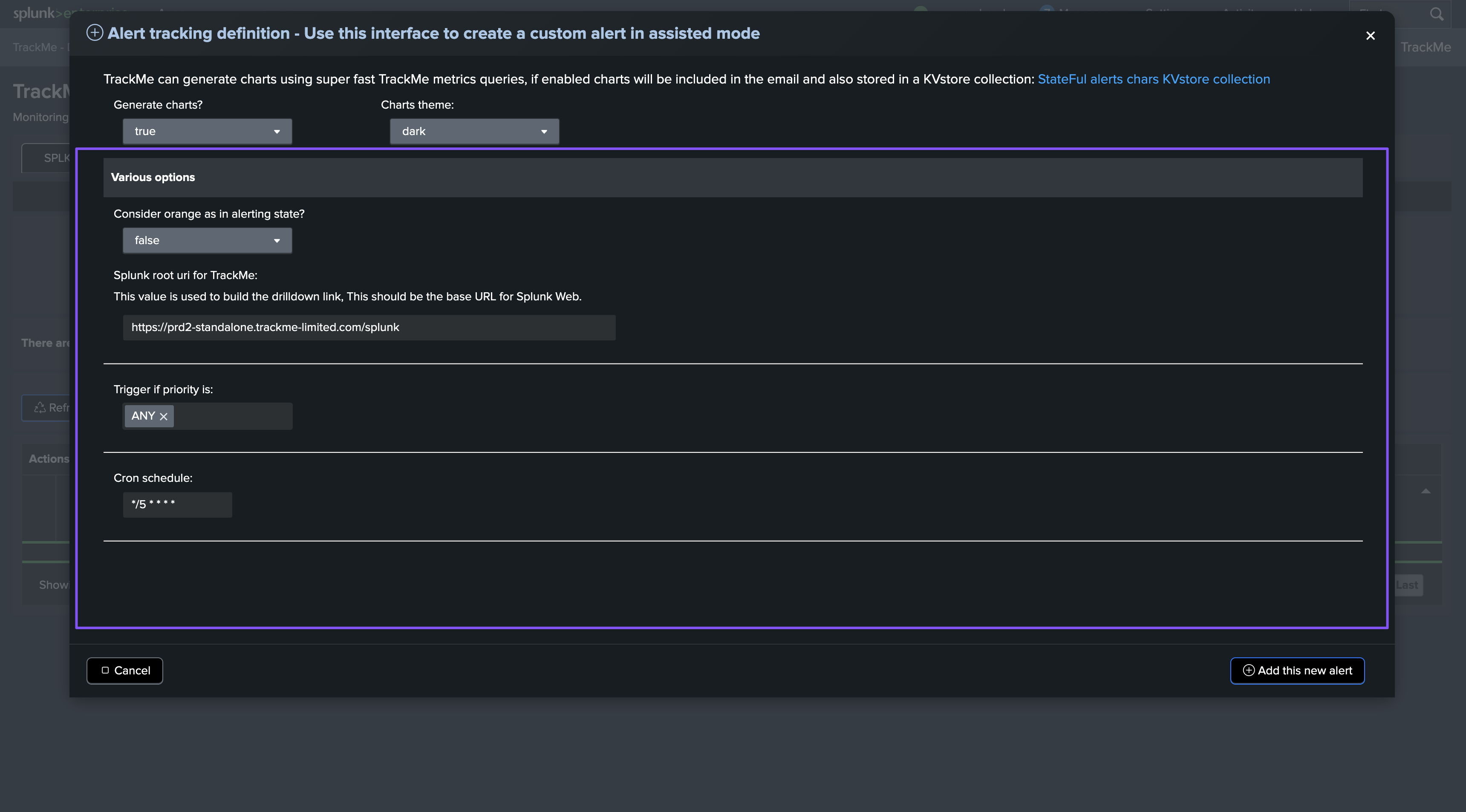

Step 5: Various options

Hint

About the cron schedule: the cron schedule is not shown as configurable anymore, this is made on purpose because the stateful alerts is meant to be execute on a frequent basis of ideally every 5 minutes:

The stateful handlers tracks changes in the entities from different angles, and then decides if the notification means the beginning of an incident, an update or a closure.

For this to work as expected, the alert must be execute as soon as possible and it then handles the incident life cycle.

Therefore, it is not recommeded to change the default cron schedule which should be set to every 5 minutes.

Finally, there are various options that you can configure for the alert, such as:

Consider orange as an alerting state: In TrackMe, orange is typically used to indicate a warning state; you can configure TrackMe to consider this as an alerting state.Drilldown URI: The drilldown URI is used to build the drilldown link in the email notifications.Priority: The priority of entities for triggering the alert; you may, for instance, want to trigger the alert only on high or critical priority entities.

Additional information

TrackMe implements various dynamic actions, depending on the circumstances or the type of entities it is dealing with, to adapt automatically the behavior of Stateful alerts.

Time detection, deduping and backfilling: TrackMe automatically identifies if events should be skipped according to the entity cycle, this means that it will skip events if necessary and will never send duplicated alerts, whatever the triggers of the alerts are. (so you can have an overlapped search approach safely and TrackMe does the hard work!)

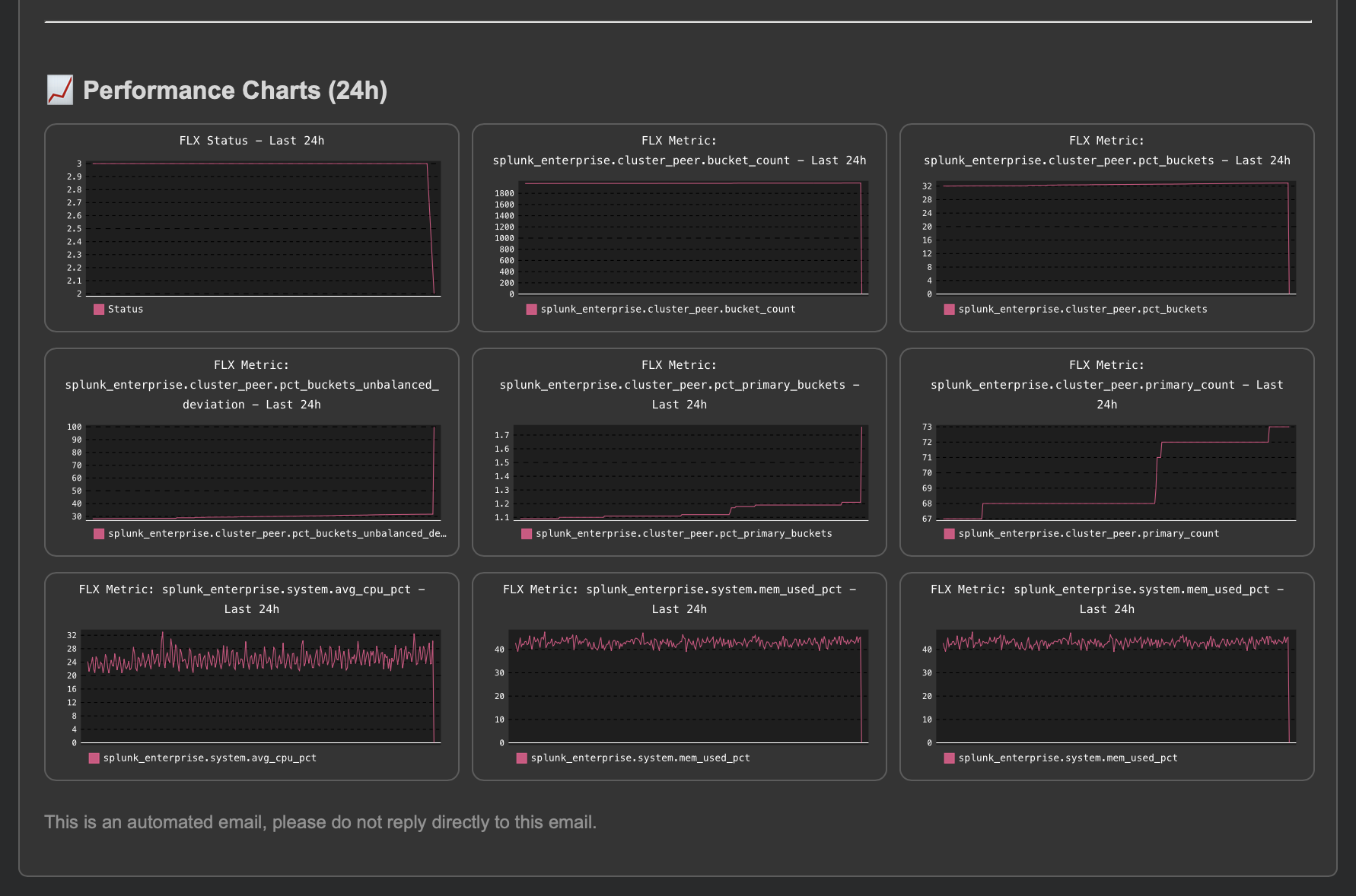

Dynamic charting: TrackMe automatically chooses which metrics should be charted, and how, based on the entity type and metrics available.

For instance, for the splk-dsm component, it will automatically add component specific charts such as data sampling charts. (quality issues)

For components like splk-flx and splk-wlk, TrackMe will automatically verify metrics availability for the entity, and will generate charts accordingly.

Status flexibility: TrackMe has different statuses, you can influence for instance if orange should be considered as an alerting state or not. On the other hand, “blue” which is a special status (notably for logical grouping) is automatically considered as a non alerting state, eligible for incident closures.

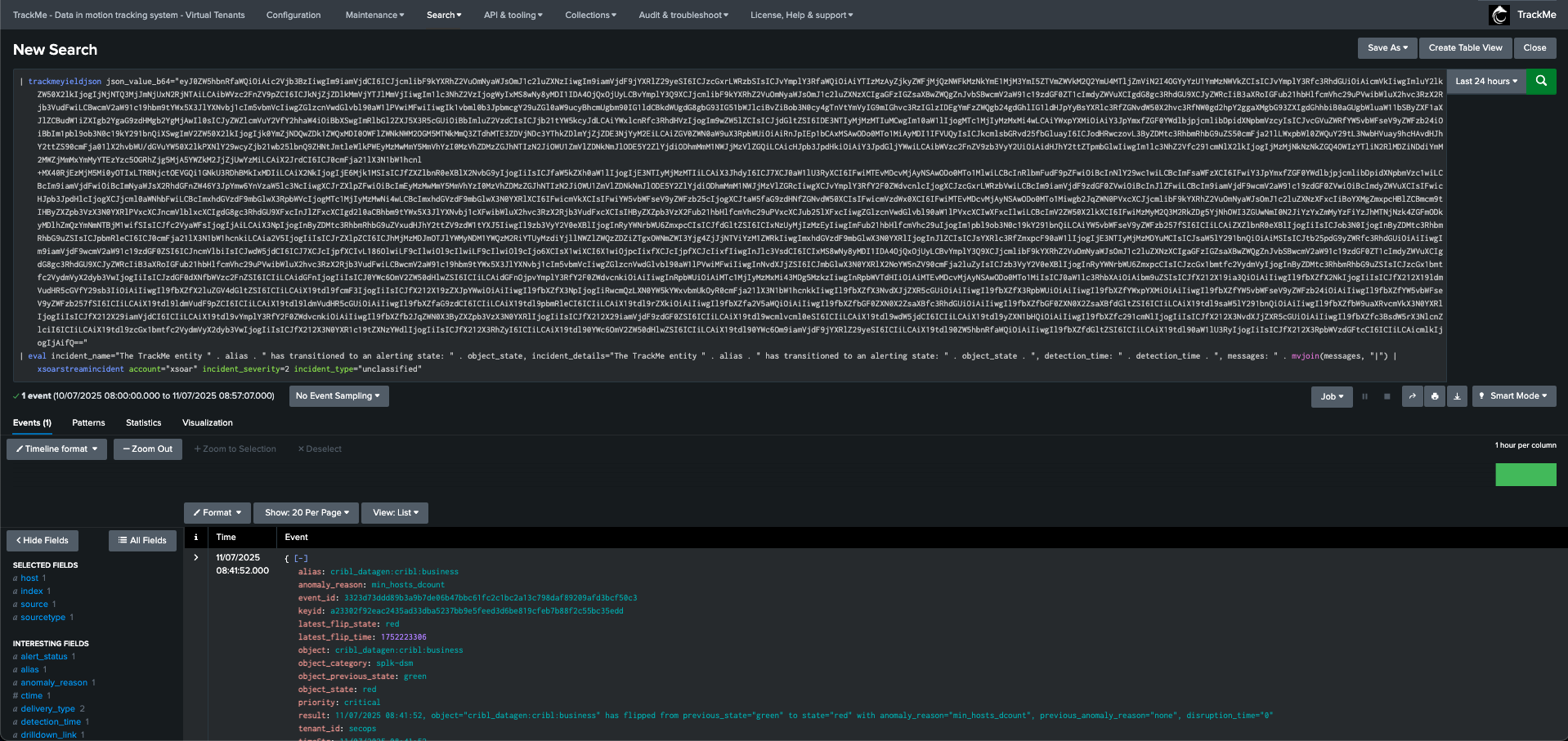

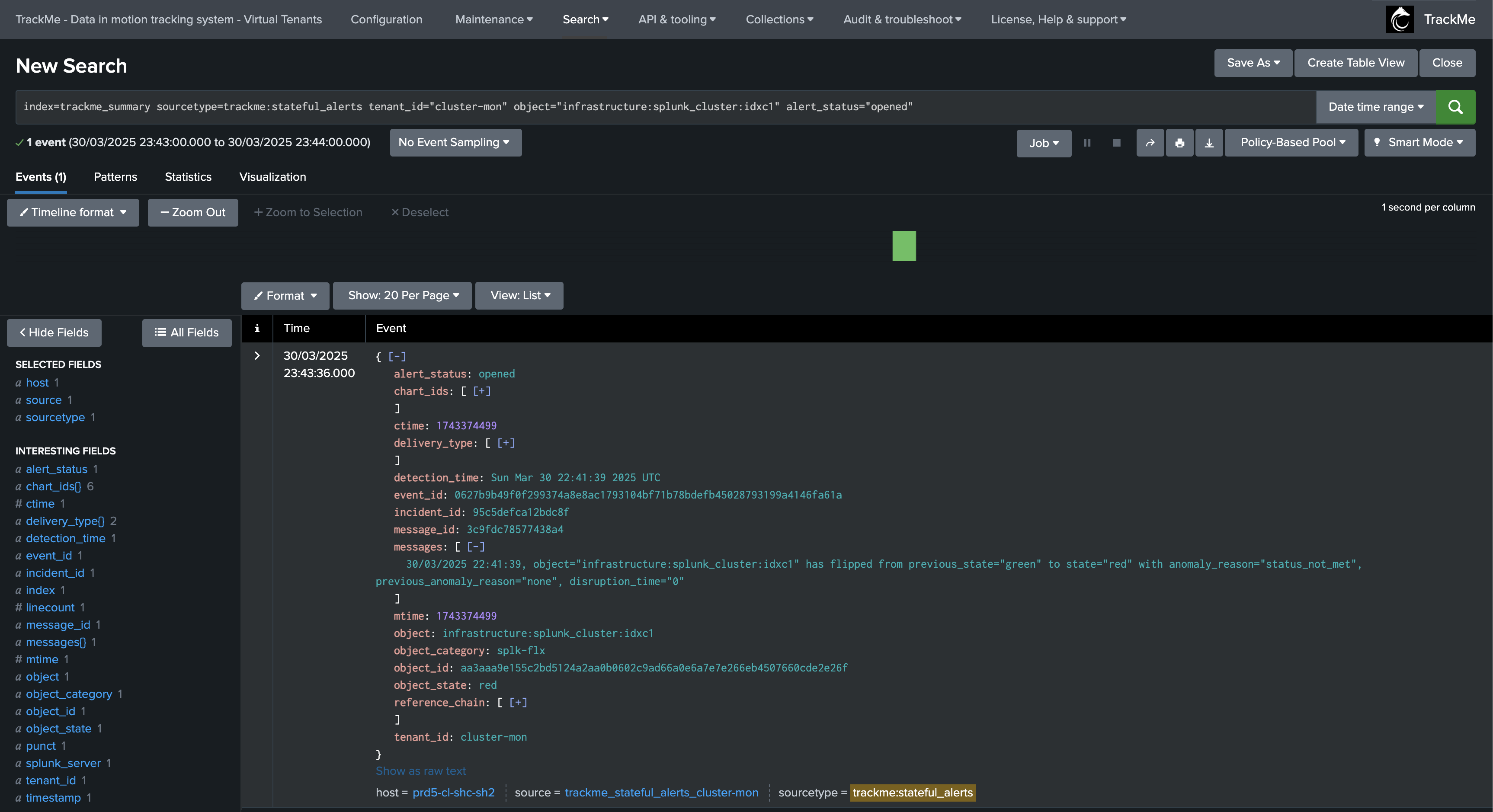

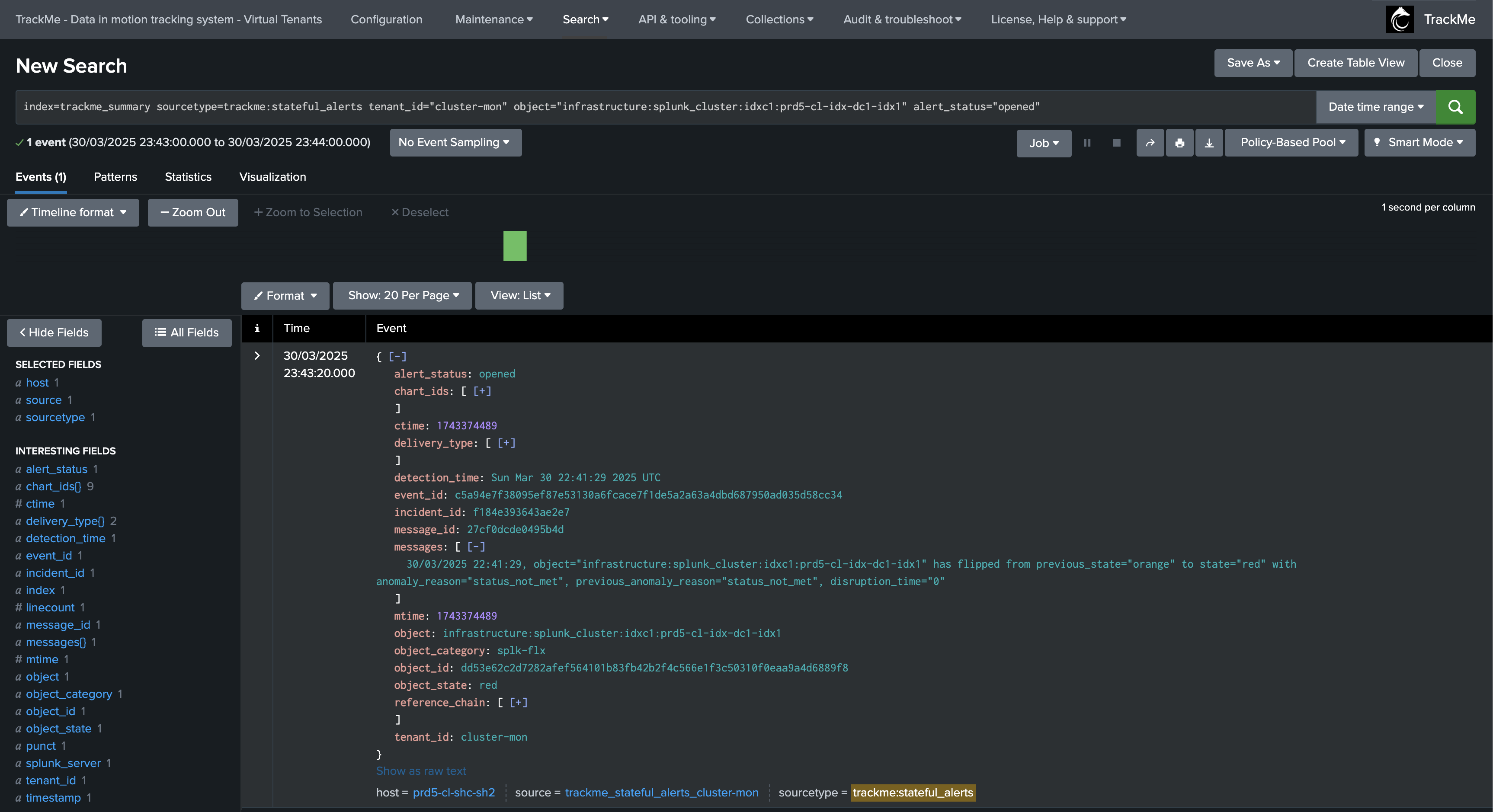

Anatomy of Stateful Incidents Events

In addition with rich email notifications, TrackMe also generates incident events, which can easily be used to trigger third party integrations.

alert_status:

opened- This is the start of the incidentalert_status:

updated- This is an update of the incidentalert_status:

closed- This is the closure of the incident

Example of an opening incident event:

index=trackme_summary sourcetype="trackme:stateful_alerts" alert_status=opened tenant_id=* object_category=* object=*

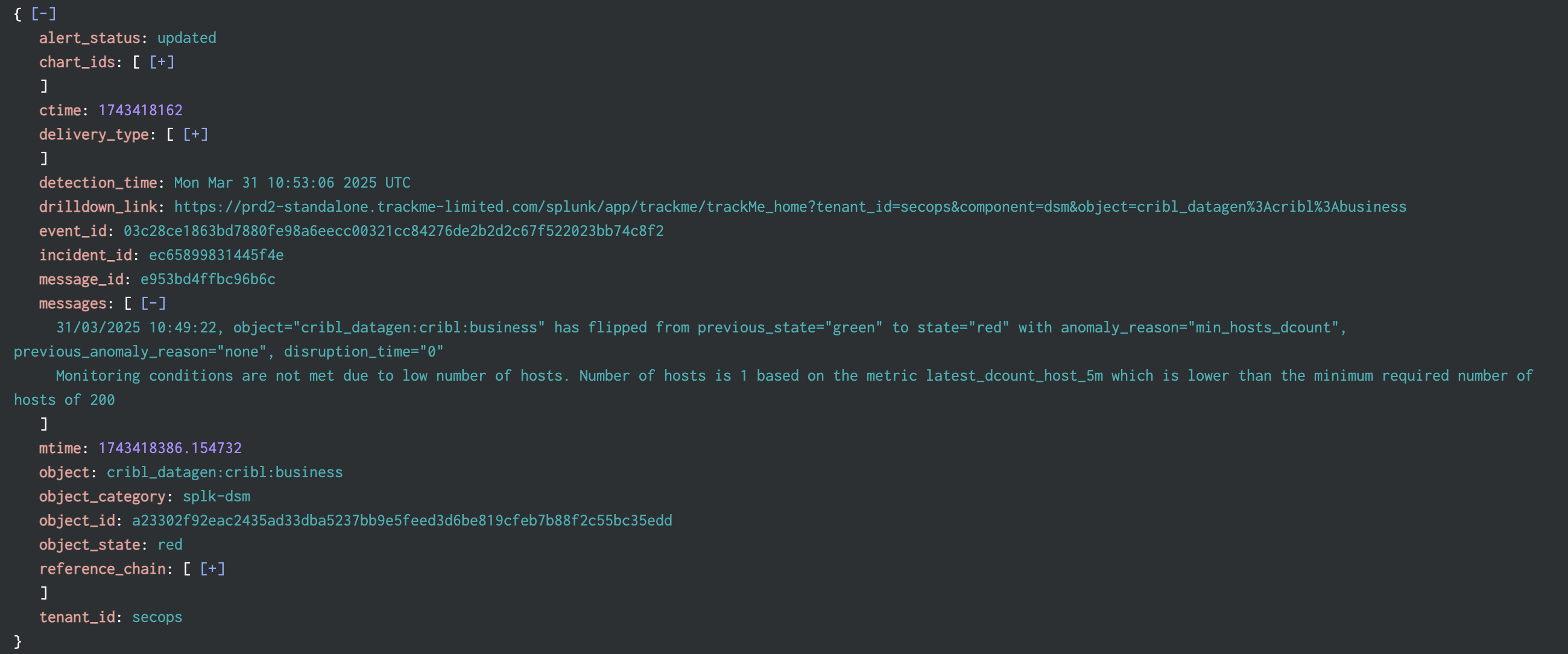

Example of an updating incident event:

index=trackme_summary sourcetype="trackme:stateful_alerts" alert_status=updated tenant_id=* object_category=* object=*

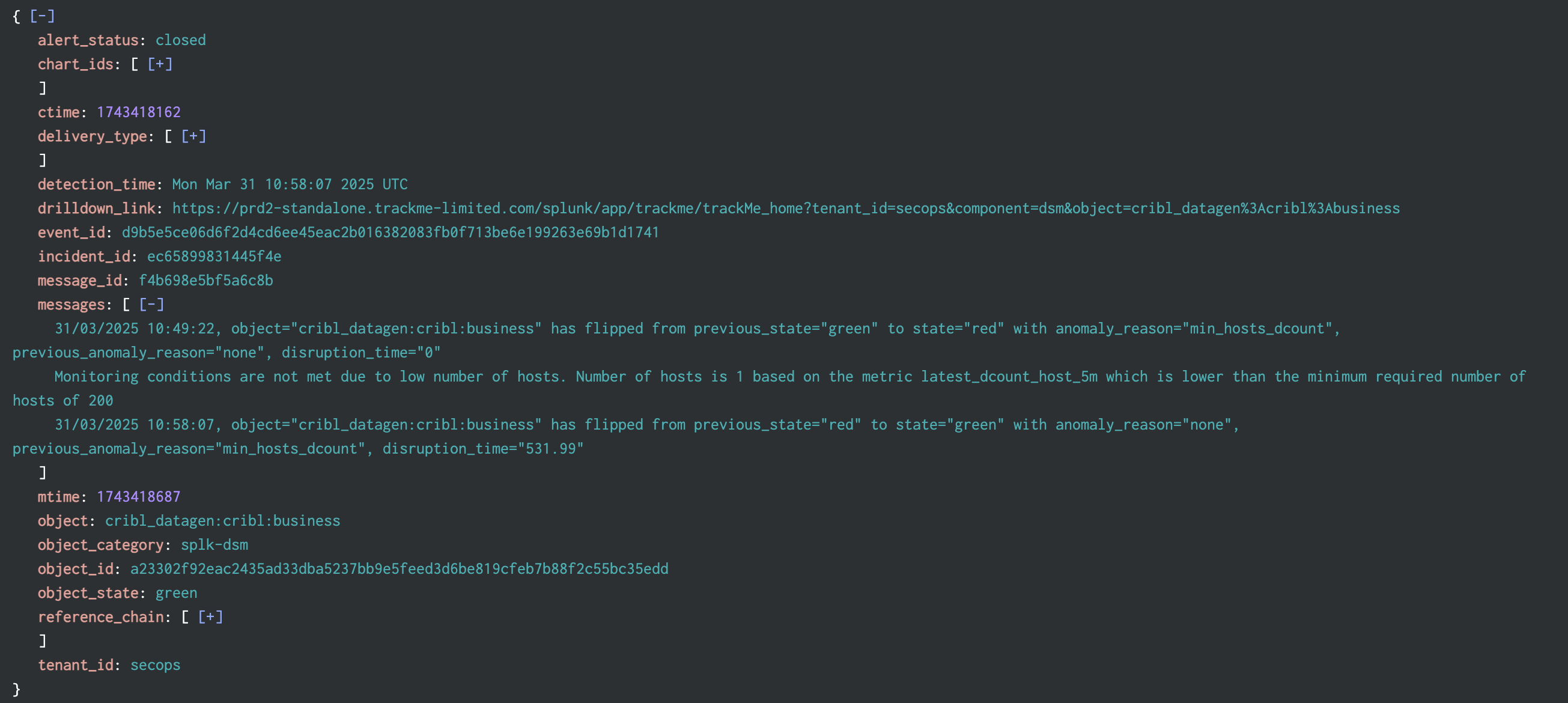

Example of a closing incident event:

index=trackme_summary sourcetype="trackme:stateful_alerts" alert_status=closed tenant_id=* object_category=* object=*

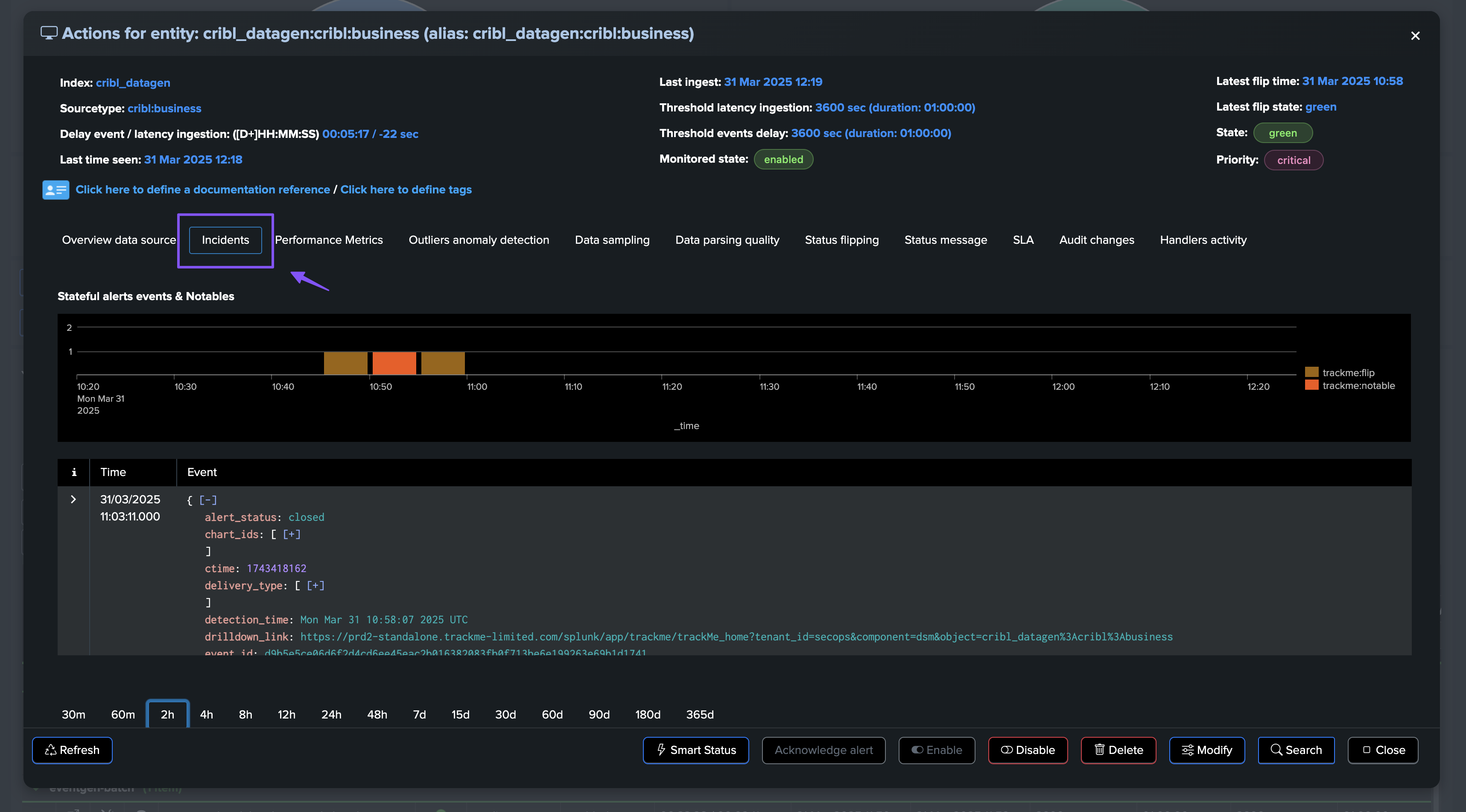

In TrackMe, incidents events can be accessed through the UI and the Incidents tab (which was renamed from Notables to Incidents in this release):

TrackMe Stateful alerts events are JSON formatted events, these contain the following key information:

Field |

Description |

|---|---|

alert_status |

The status of the alert, this can be |

chart_ids |

The list of chart ids associated with the incident, these can be used to retrieve the charts from the KVstore collection |

ctime |

The creation time of the incident (epoch) |

delivery_type |

The list of delivery types that were called for this incidents, such as |

detection_time |

The time of the detection of the alert in human readable format (strftime %c %Z) |

drilldown_link |

A link to the TrackMe Home user interface which filters on the tenant, component and entity, and automatically opens the entity overview screen (from TrackMe 2.0.88) |

event_id |

The unique identifier for this notable event, as the sha-256 hash of the notable event content (from TrackMe 2.1.0) |

incident_id |

The unique identifier for this incident, this is shorter identifier which is common to events and objects that related to the same incident |

message_id |

A unique identifier for this message, this is principally used for emails |

messages |

The list of messages from TrackMe that occur during the incident life cycle, this related to the TrackMe status_message field |

mtime |

The last modification time of the incident (epoch) |

object |

The object name for this entity, this basically is the name of entities in TrackMe |

object_category |

A TrackMe identifier for each component, this describes which type of TrackMe component this notable is related to. |

object_id |

The unique identifier for this object in the tenant / component central KVstore collection |

object_state |

The current state of the object (blue / green / orange / red) |

reference_chain |

The list of message_id that relate to the same incident, this is used for emails threading purposes |

tenant_id |

The tenant identifier this Notable event is related to. |

priority |

The priority definition for this object (low / medium / high / critical) |

source_message |

The TrackMe sourcetype at the origin of the incident, such as trackme:flip |

source_message_id |

The unique identifier (event_id) of the original event that triggered the incident |

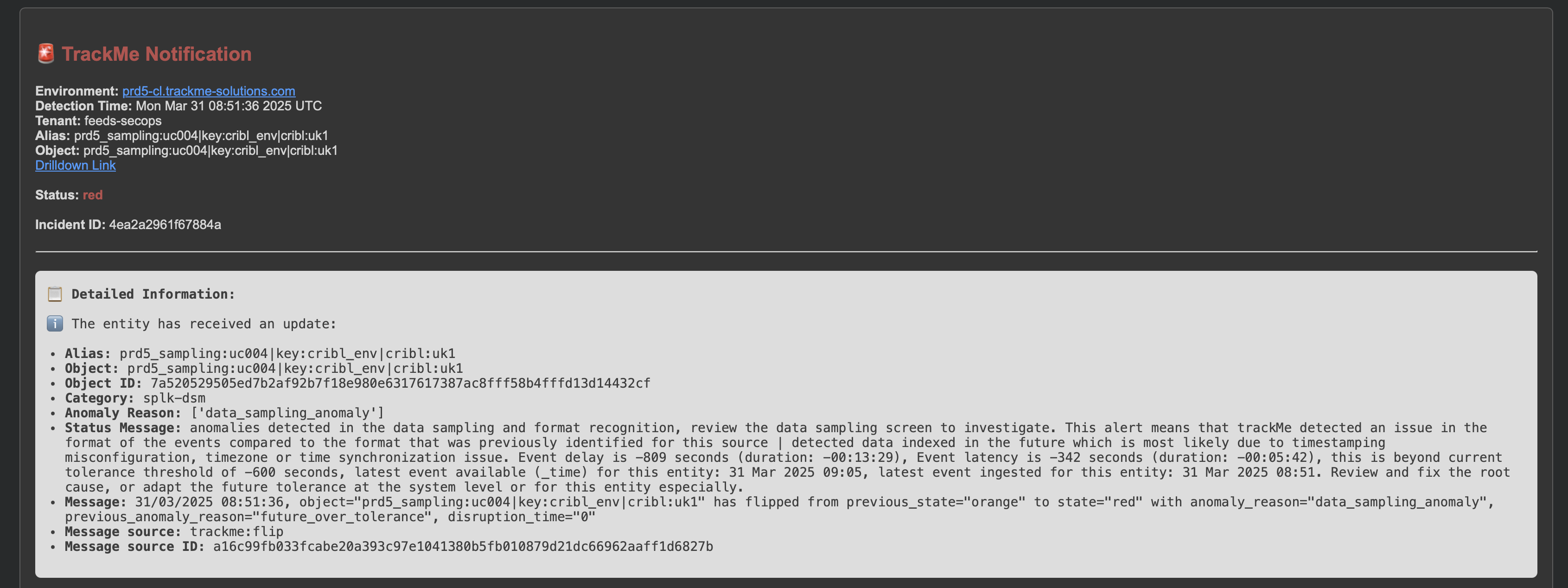

Example: Feed tracking entity (splk-dsm)

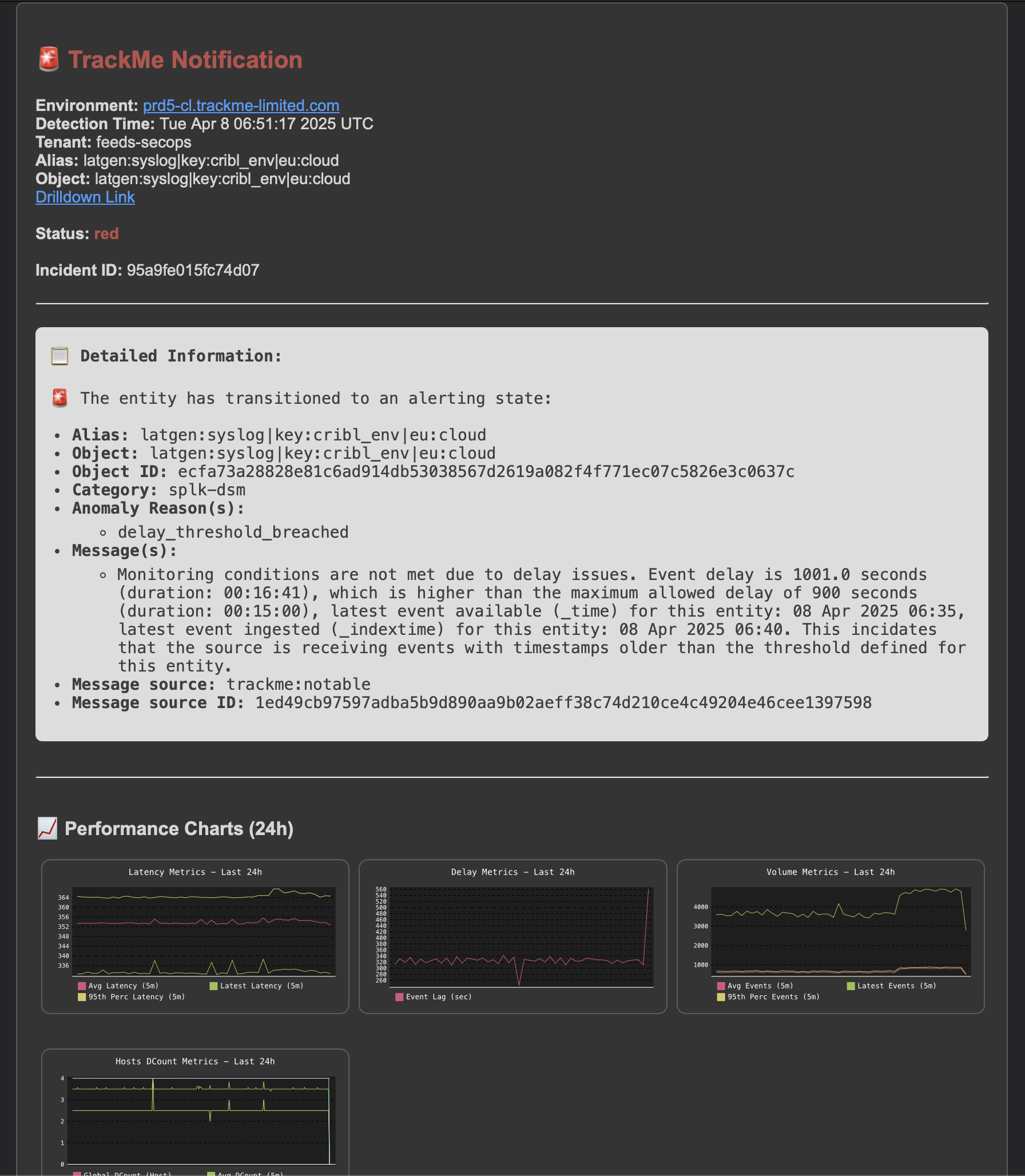

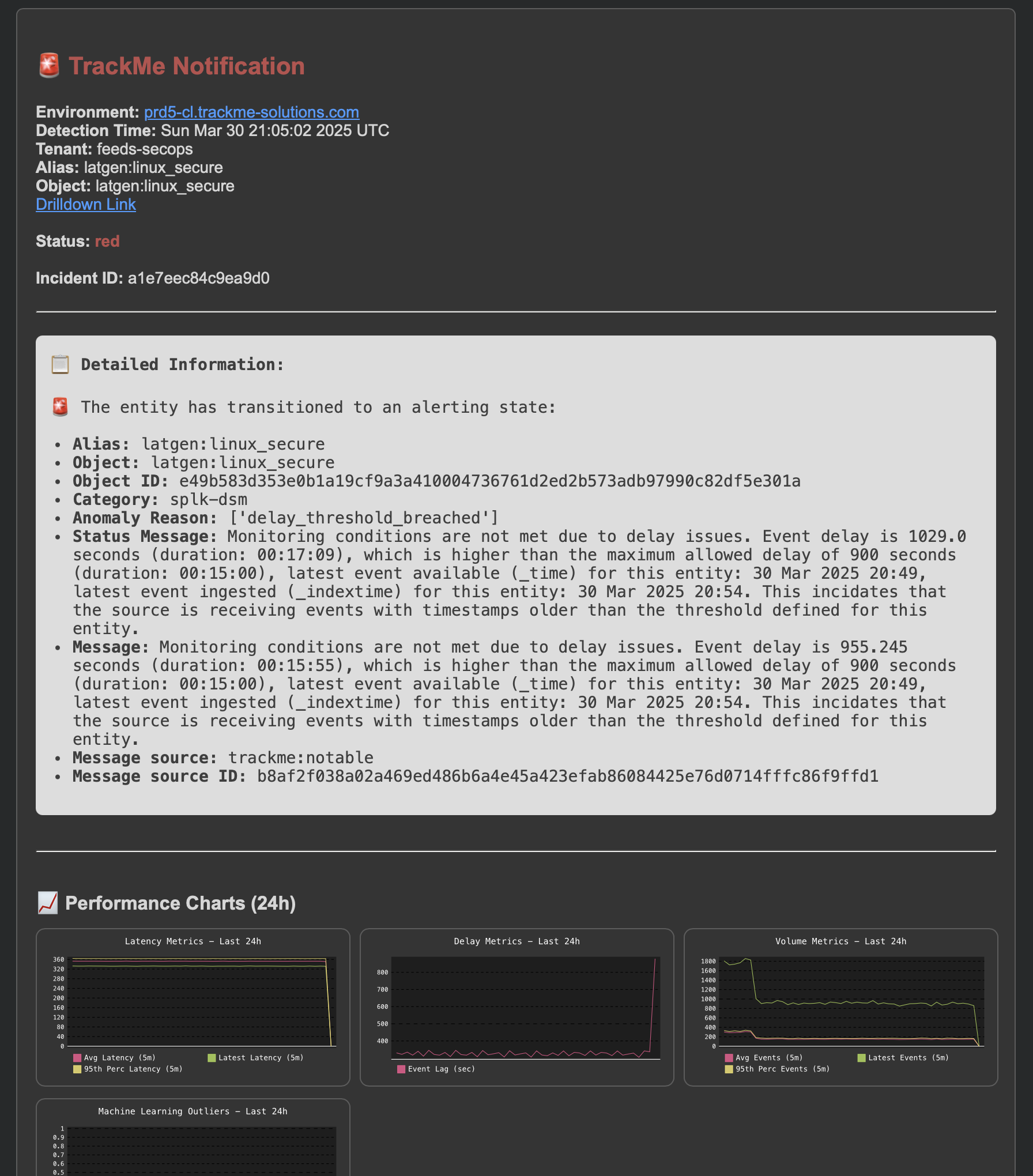

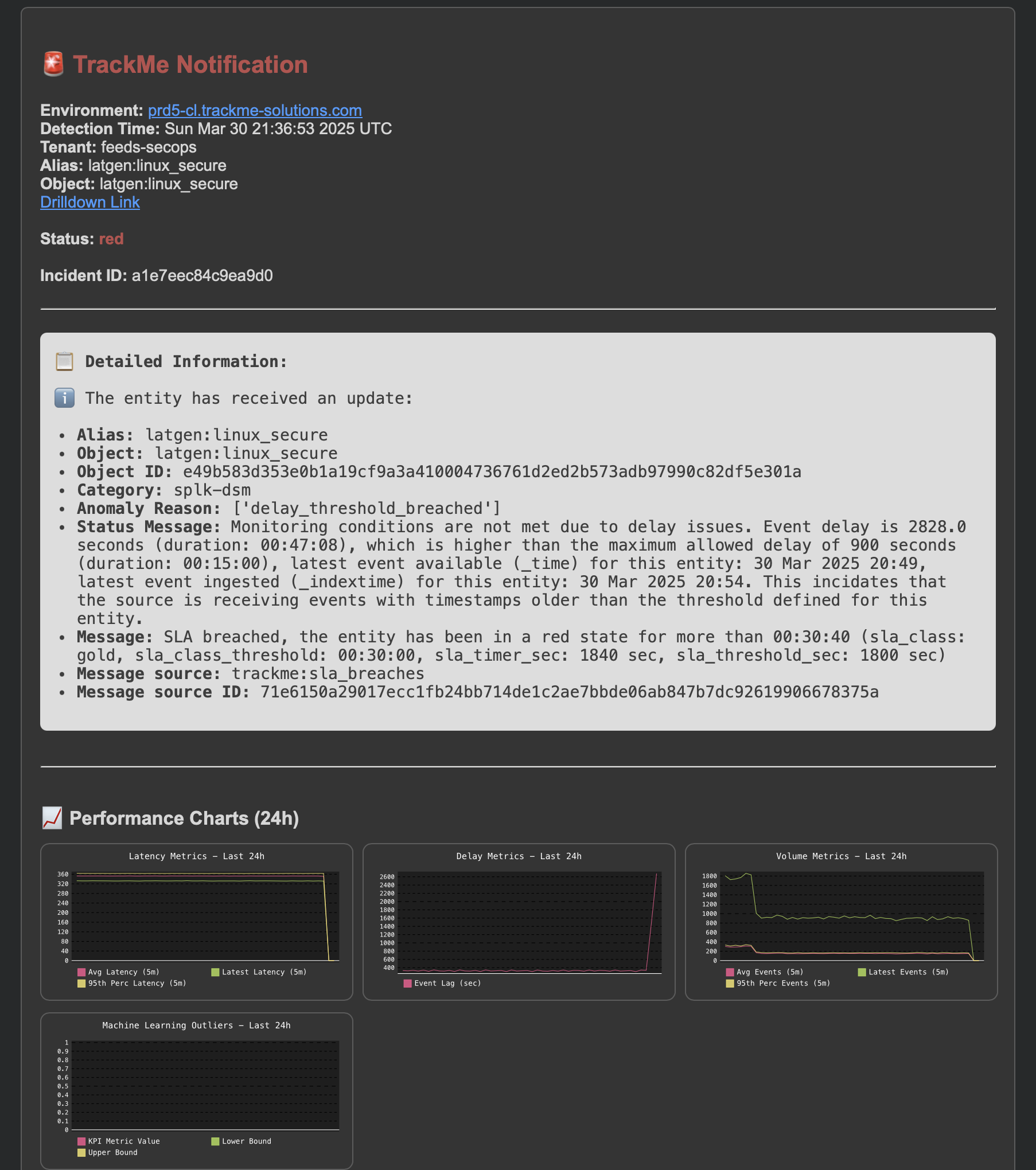

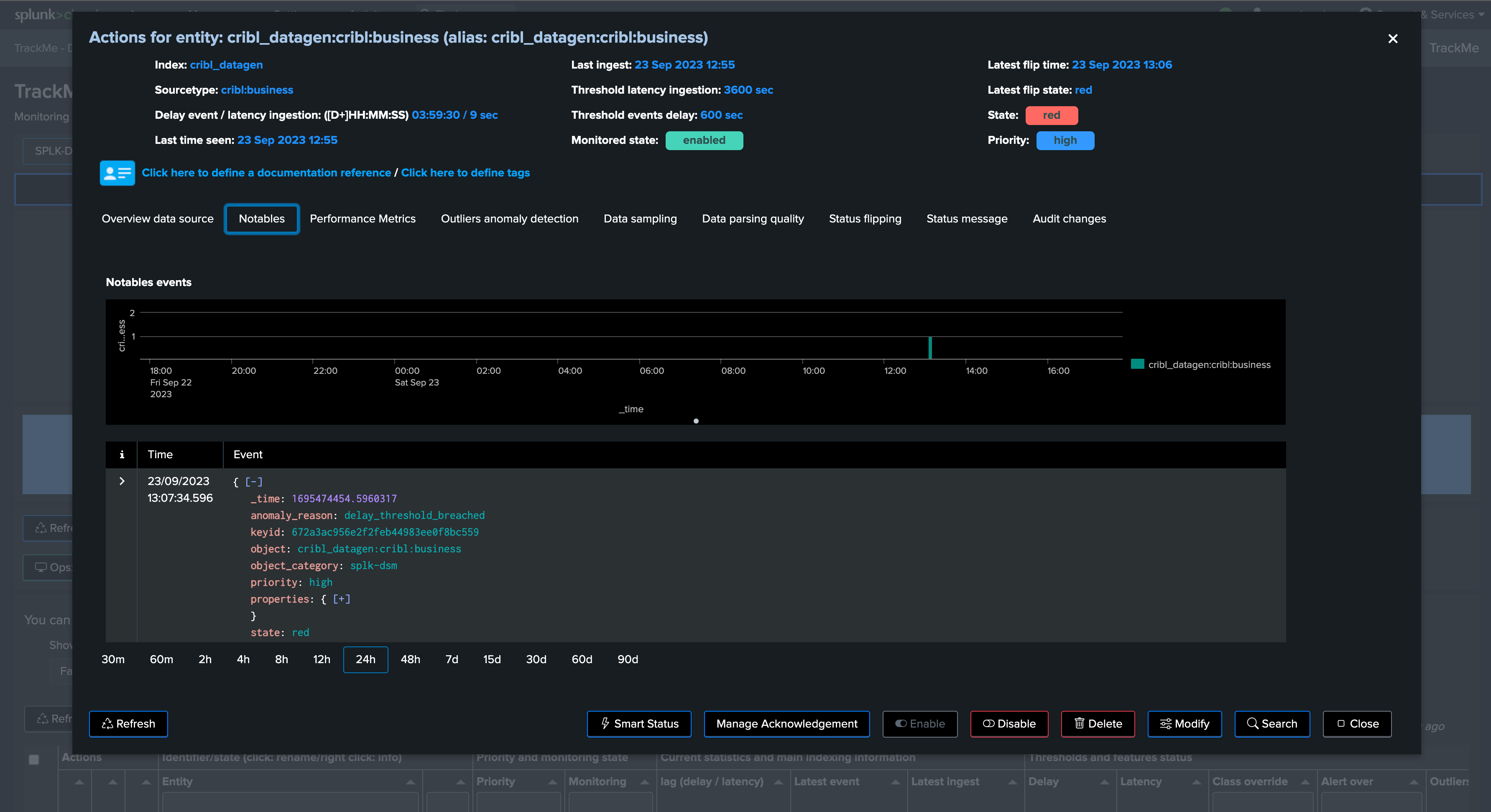

In this example, a sourcetype is affected by high latency and/or delay, a first notification is generated:

A first notification is generated, either through a notable event of a flip event:

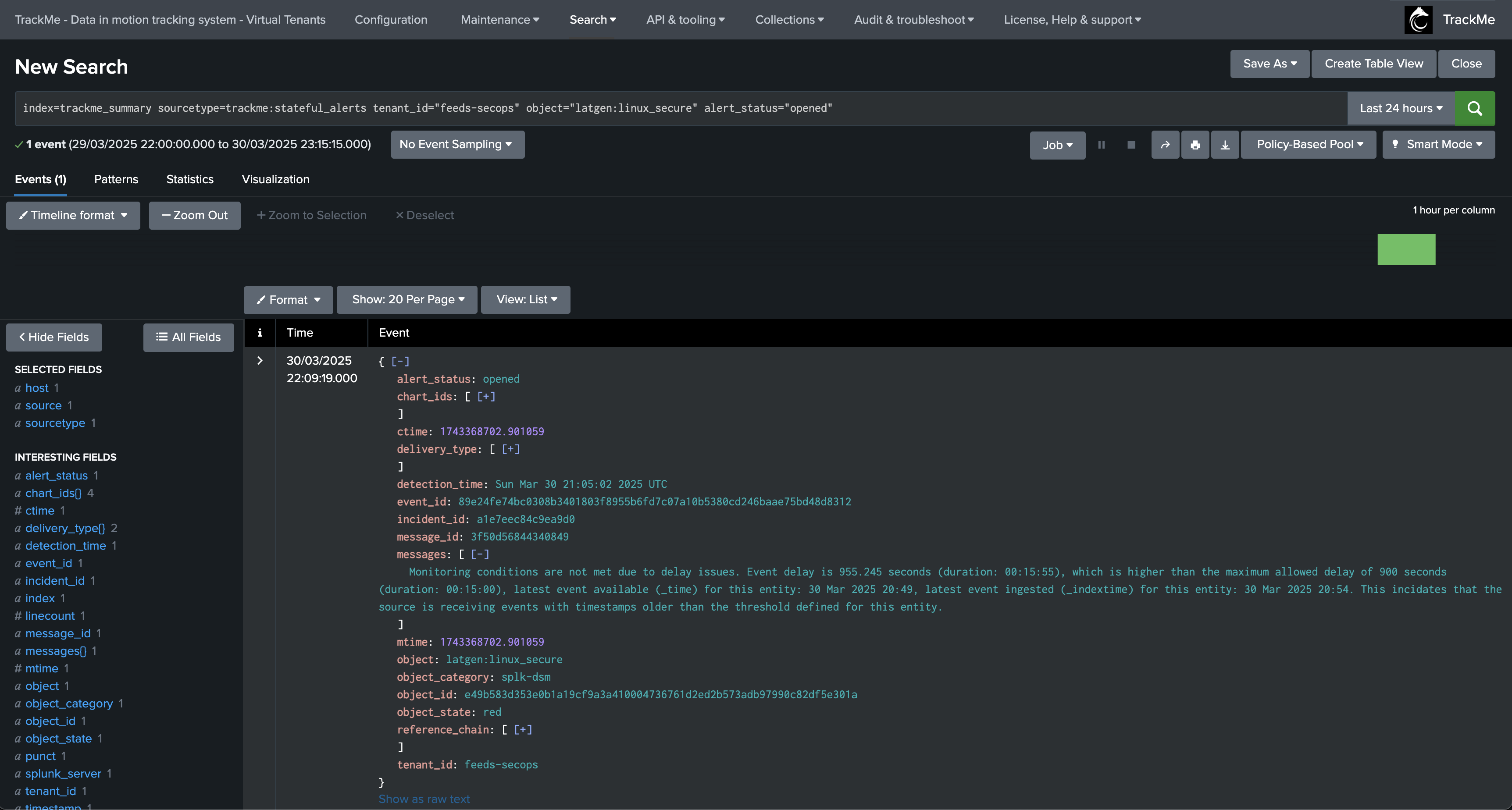

An opening incident event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="mytenant" object="myobject" alert_status="opened"

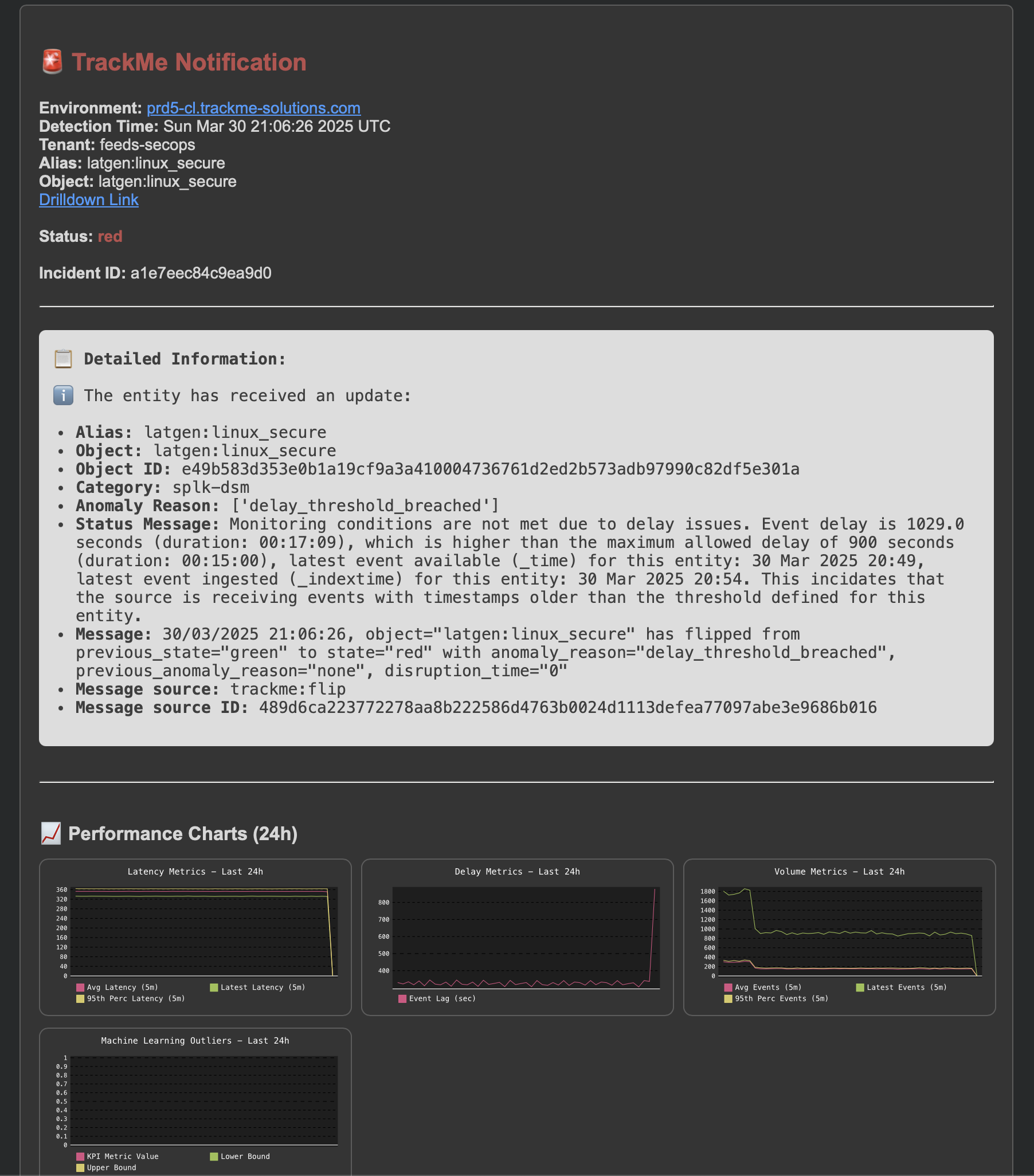

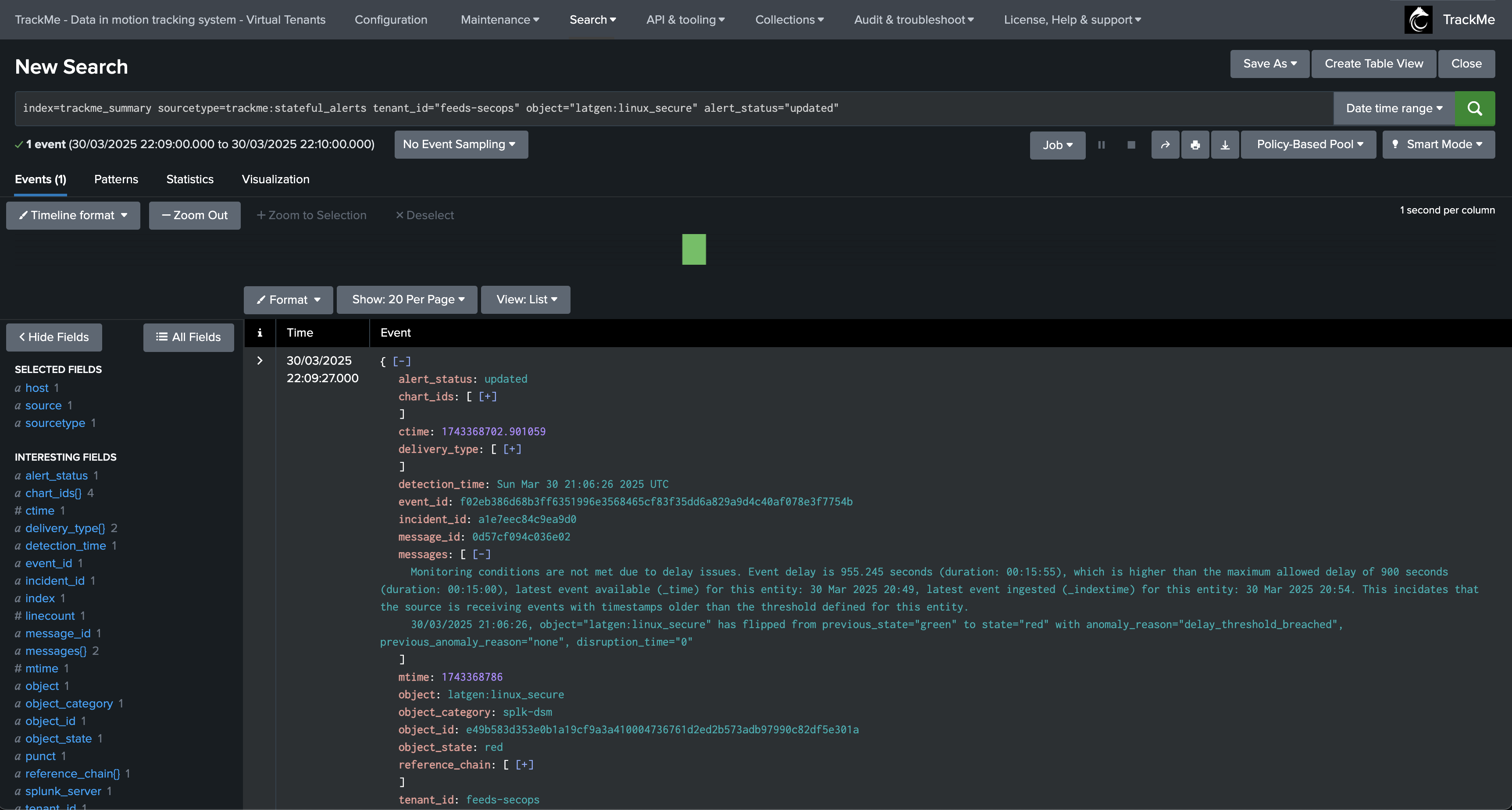

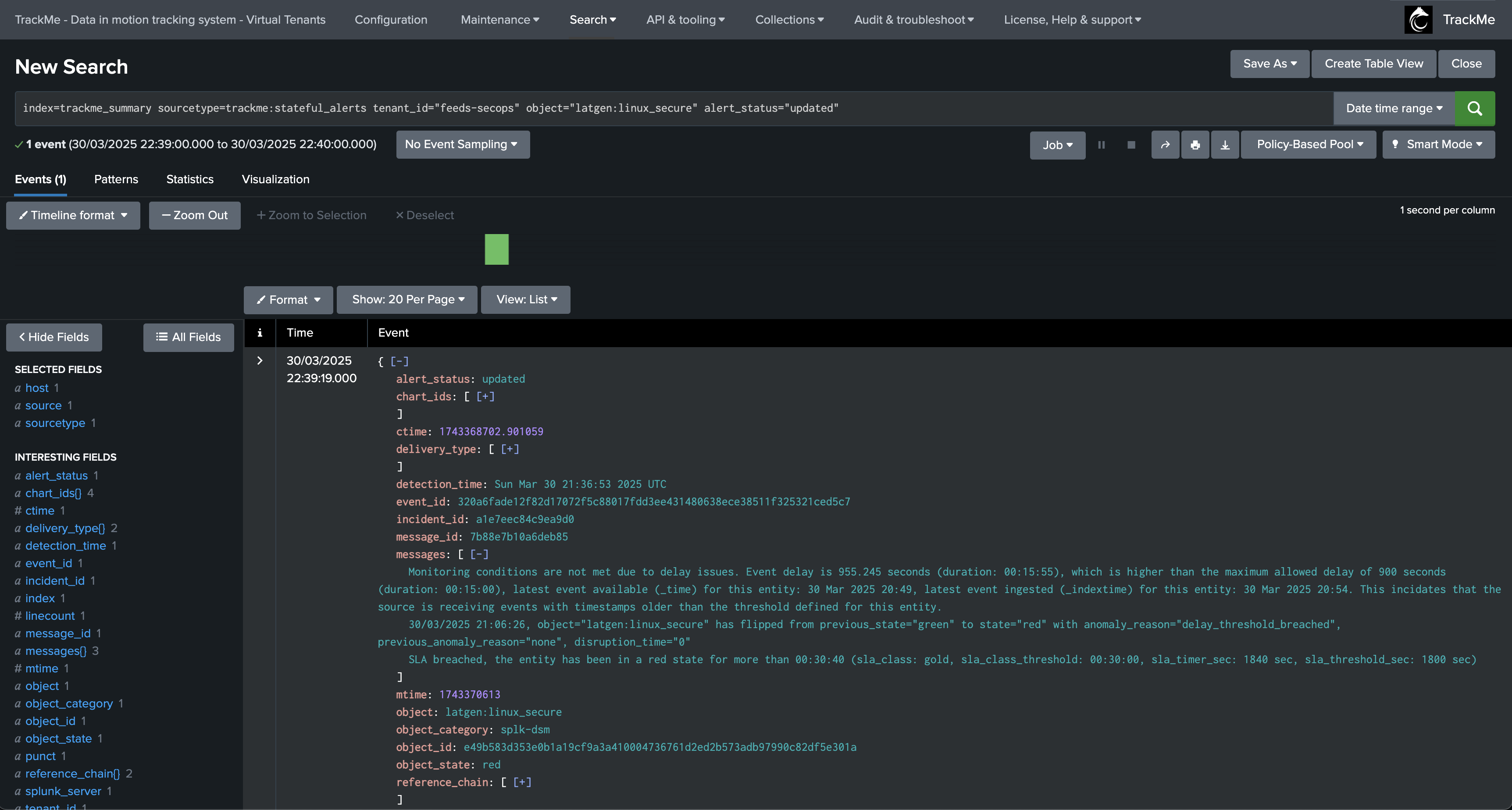

Eventually, an update will be issued if a new event is generated:

An update incident event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="mytenant" object="myobject" alert_status="updated"

Later on, the SLA is breached, a new notification is generated:

Another update incident event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="mytenant" object="myobject" alert_status="updated"

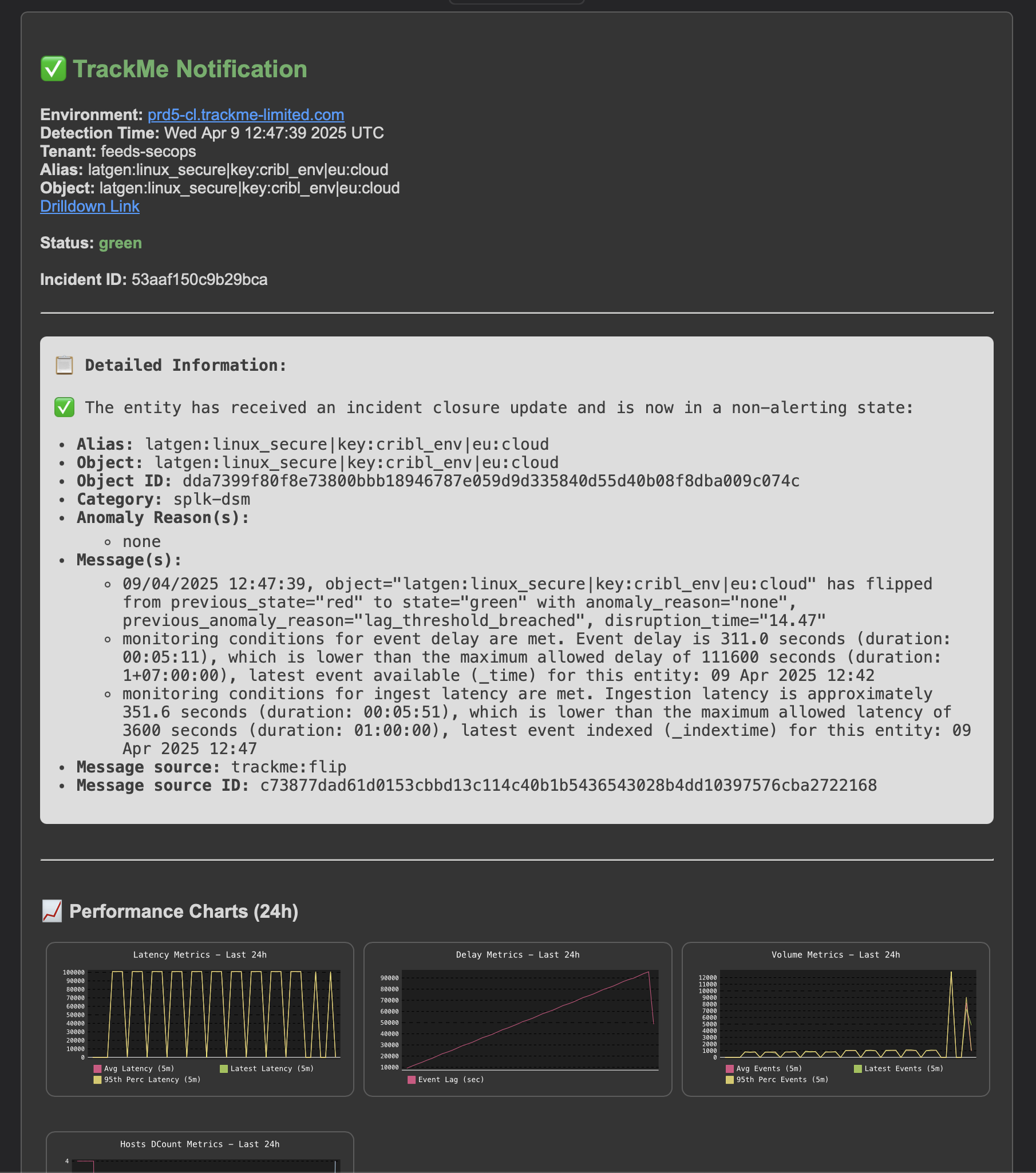

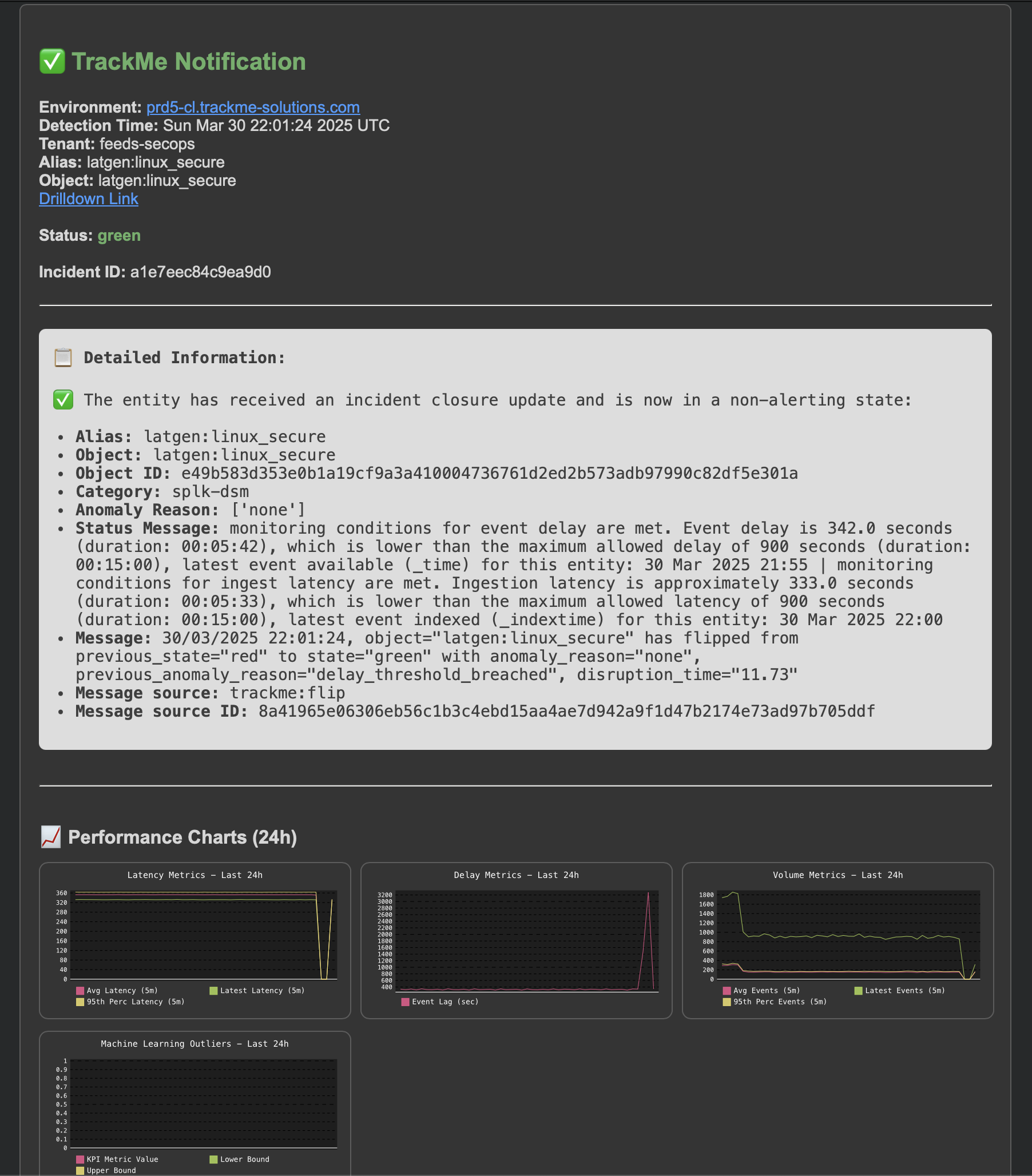

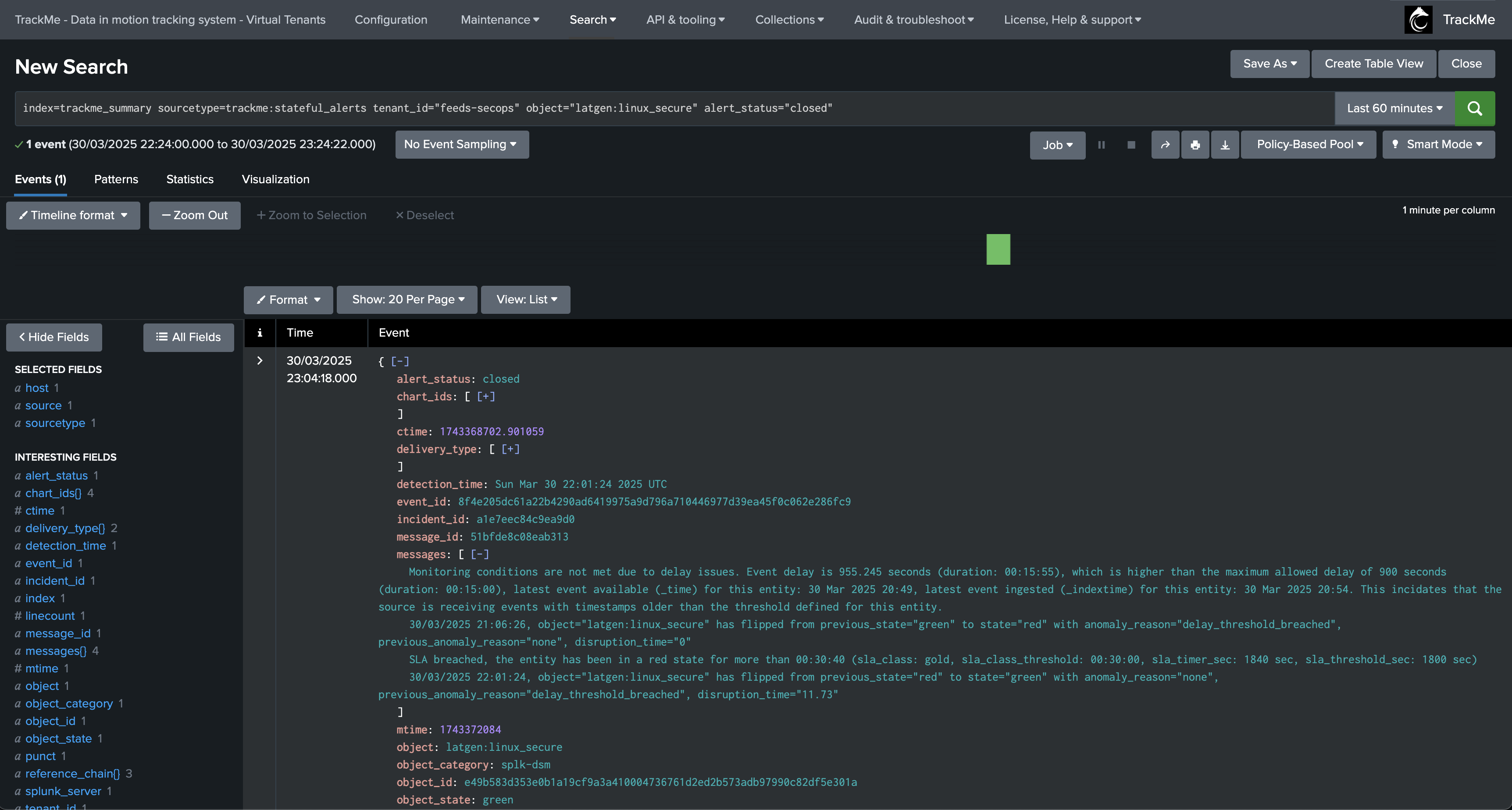

Finally, the issue is resolved, an incident closure notification is generated:

An incident closure event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="mytenant" object="myobject" alert_status="closed"

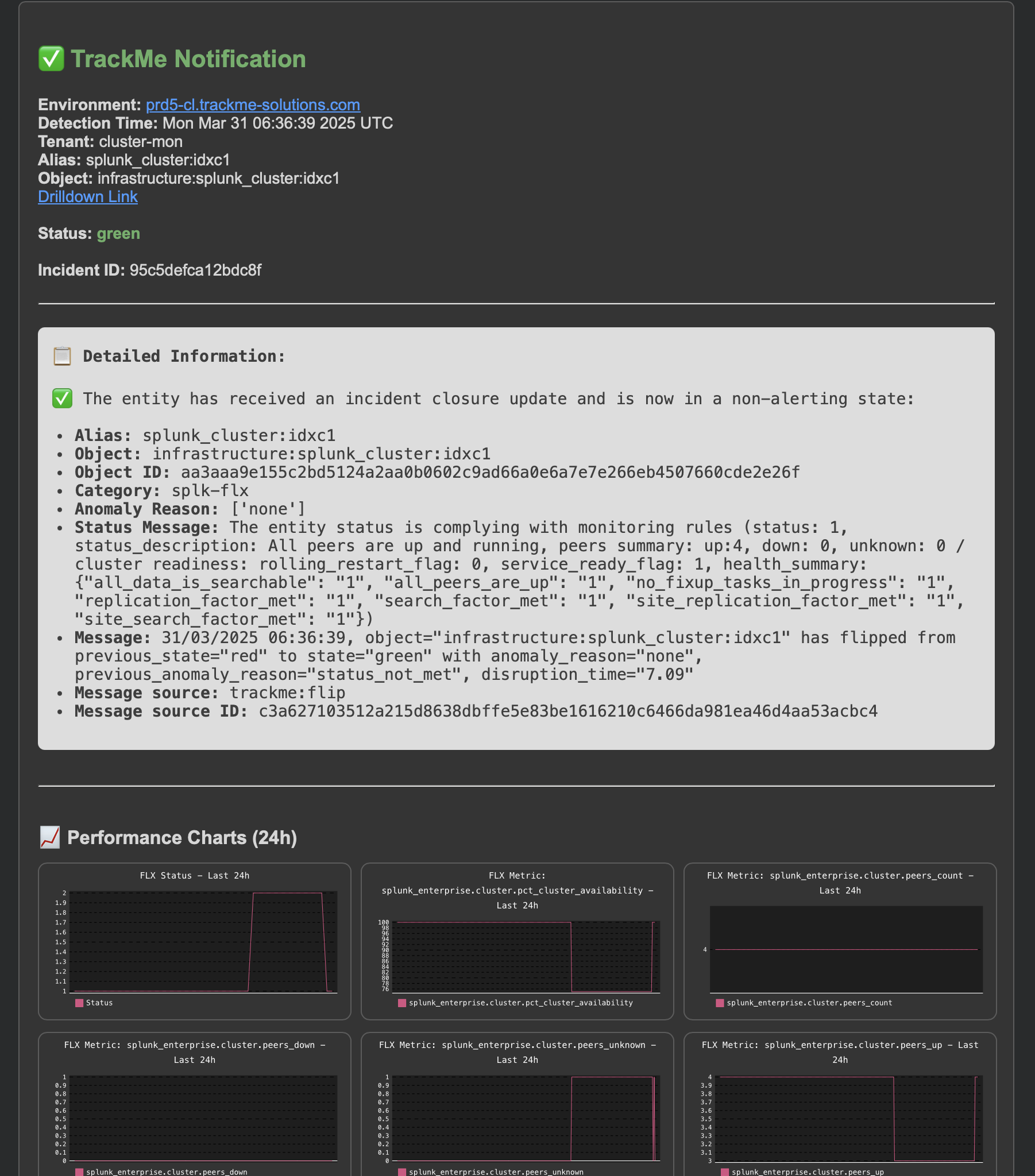

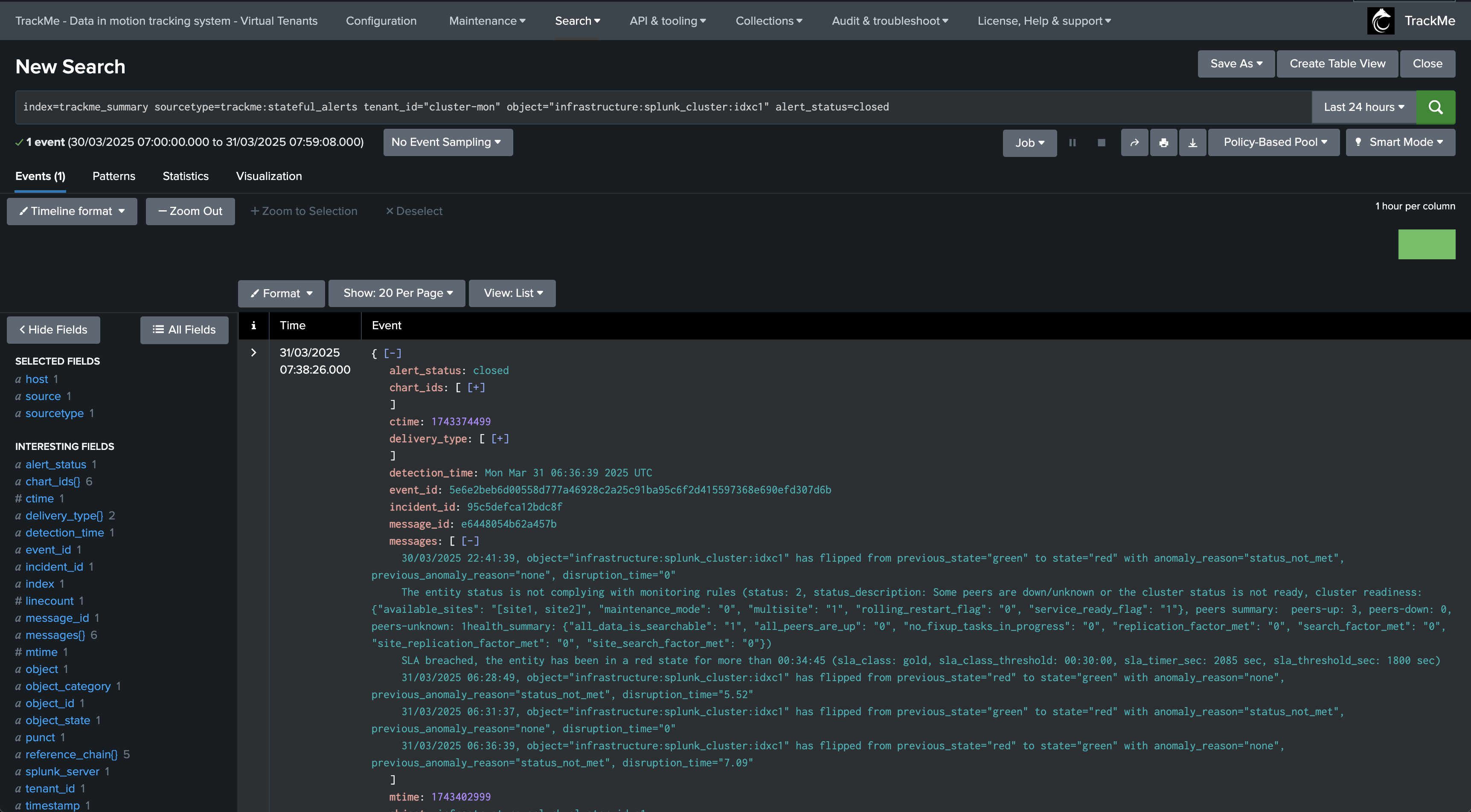

Example: Splunk Cluster Monitoring with splk-flx

In this example, we monitor a Splunk cluster and Splunk indexers through the Flex object component.

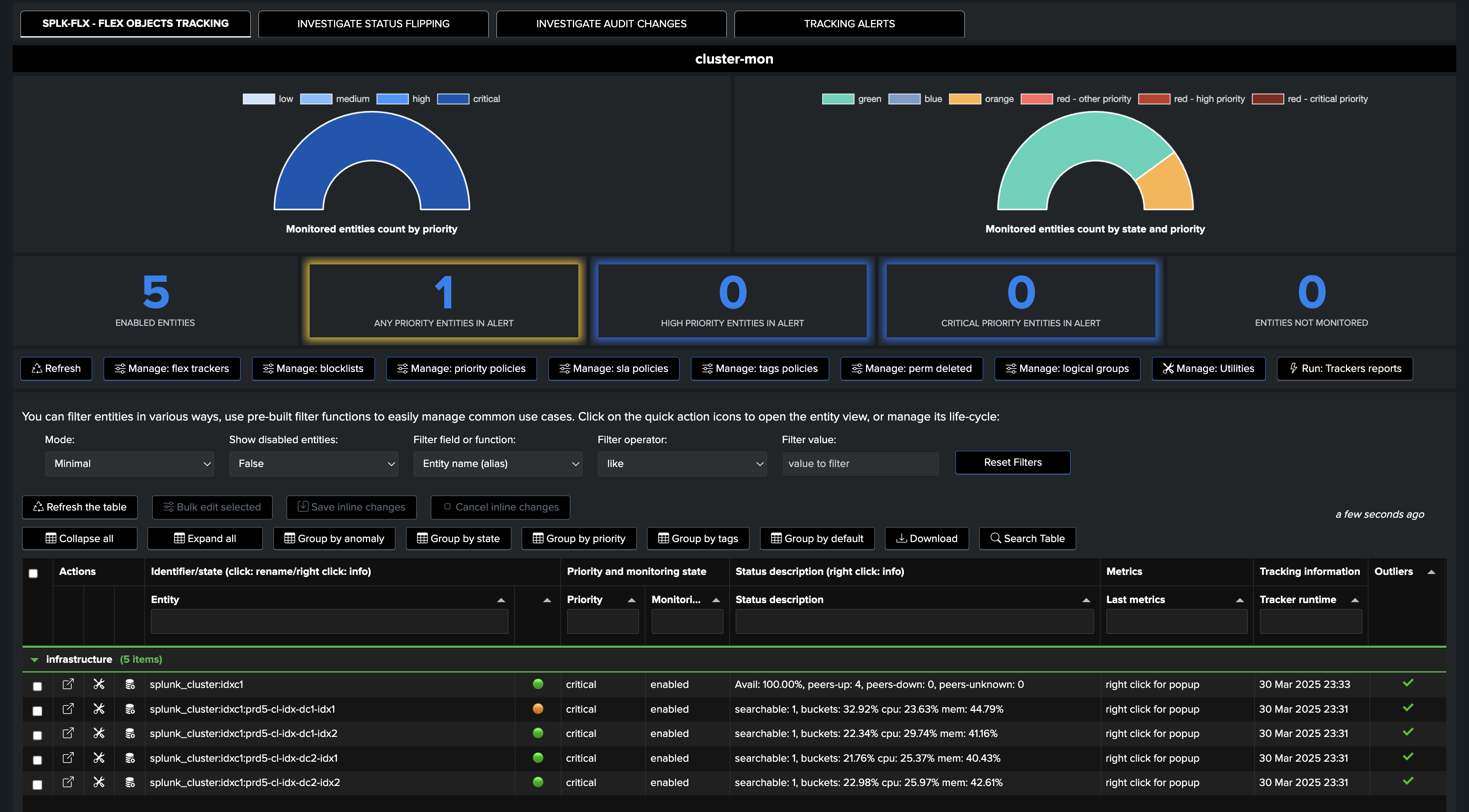

Initial status: The cluster global status is healthy, all peers are up and running: (although one peer is reported as orange due to bucket imbalance)

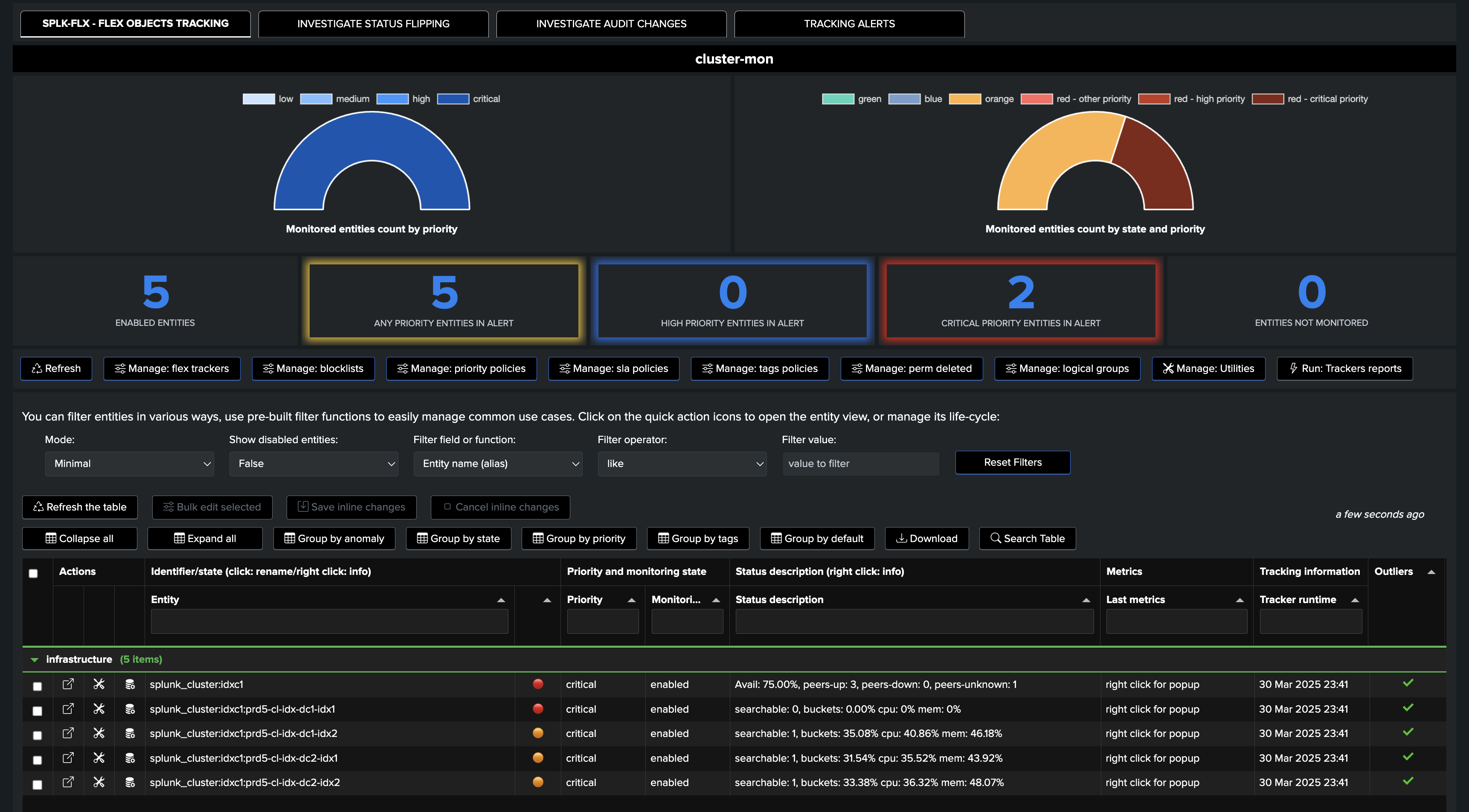

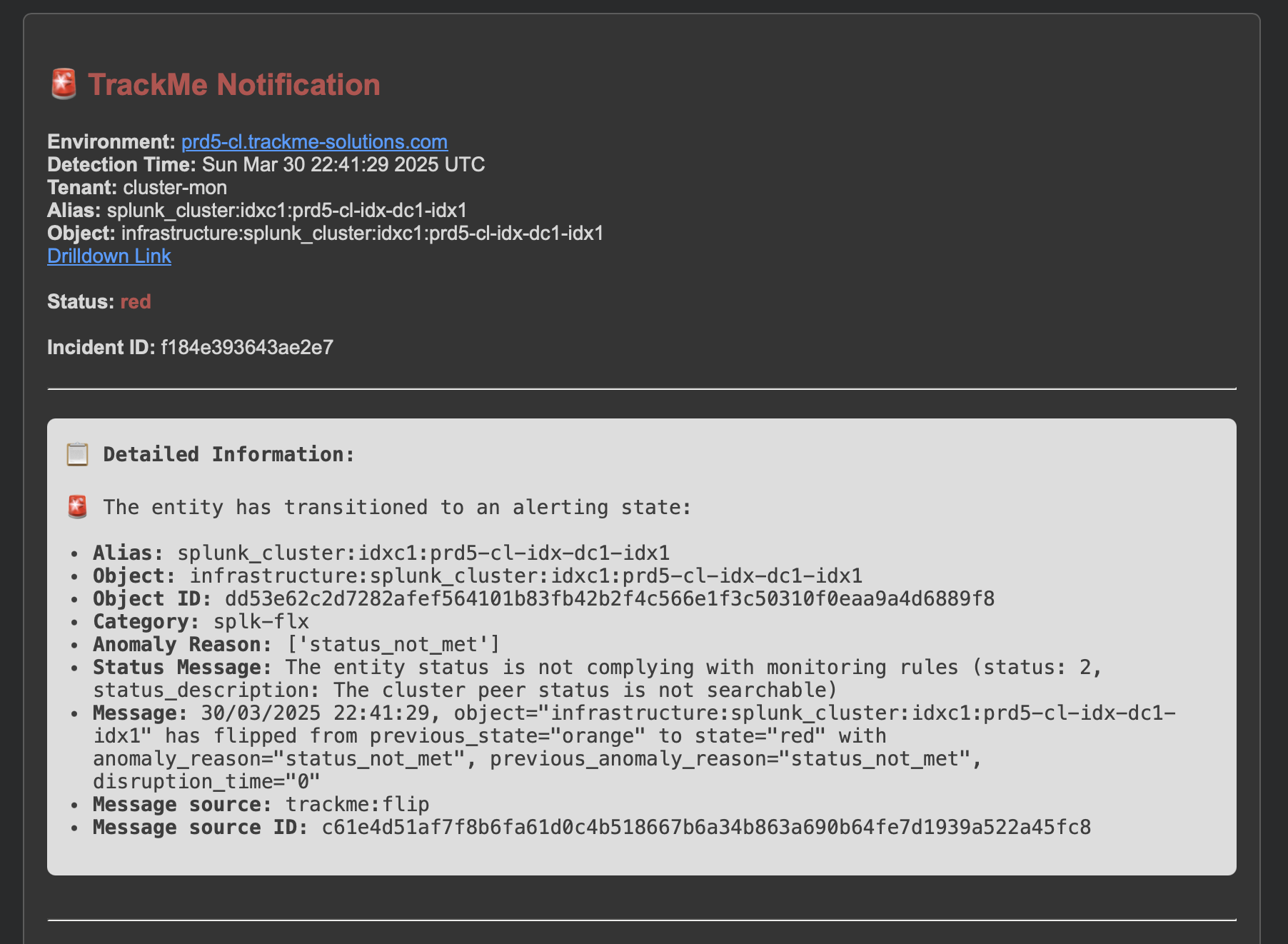

An incident affects an indexer, the global cluster status as well as the indexer turn into alerting state:

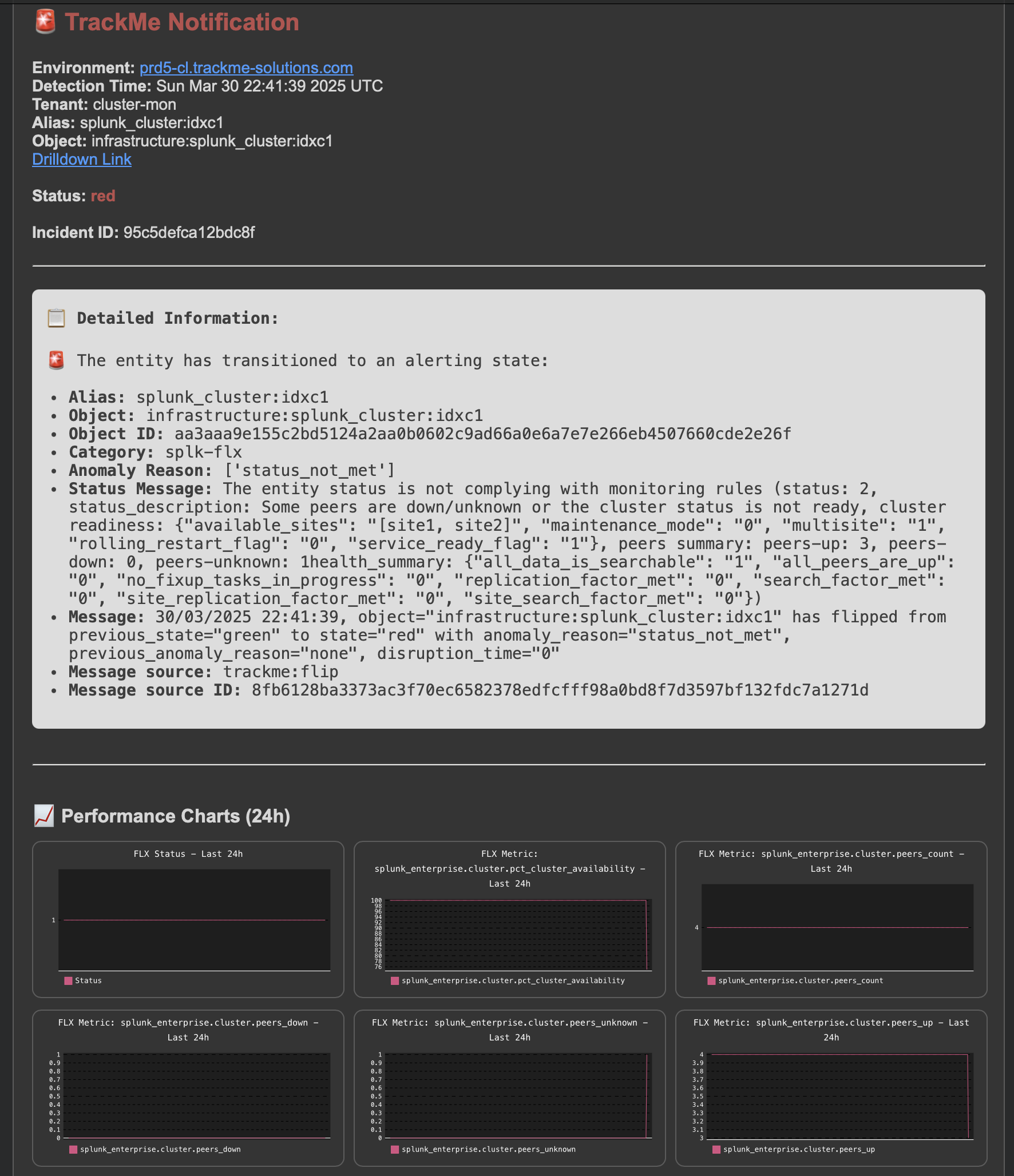

A first notification is generated for the cluster status:

An opening incident event is created:

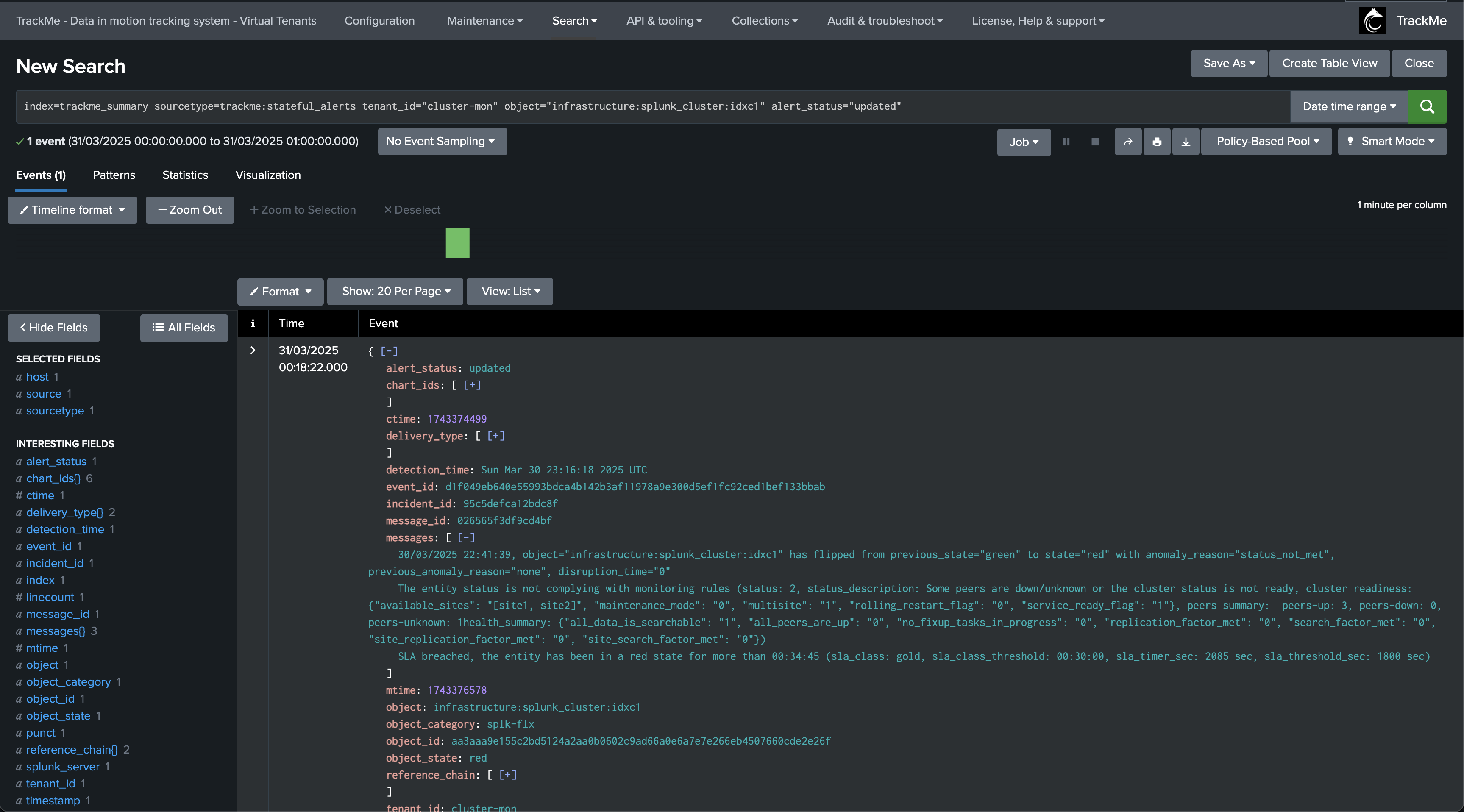

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="cluster-mon" object="infrastructure:splunk_cluster:idxc1" alert_status="opened"

Notifications are also generated for the indexer:

An update incident event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="cluster-mon" object="infrastructure:splunk_cluster:idxc1" alert_status="updated"

As the incident continues, several notifications can be generated, for instance when the SLA is breached:

An update incident event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="cluster-mon" object="infrastructure:splunk_cluster:idxc1" alert_status="updated"

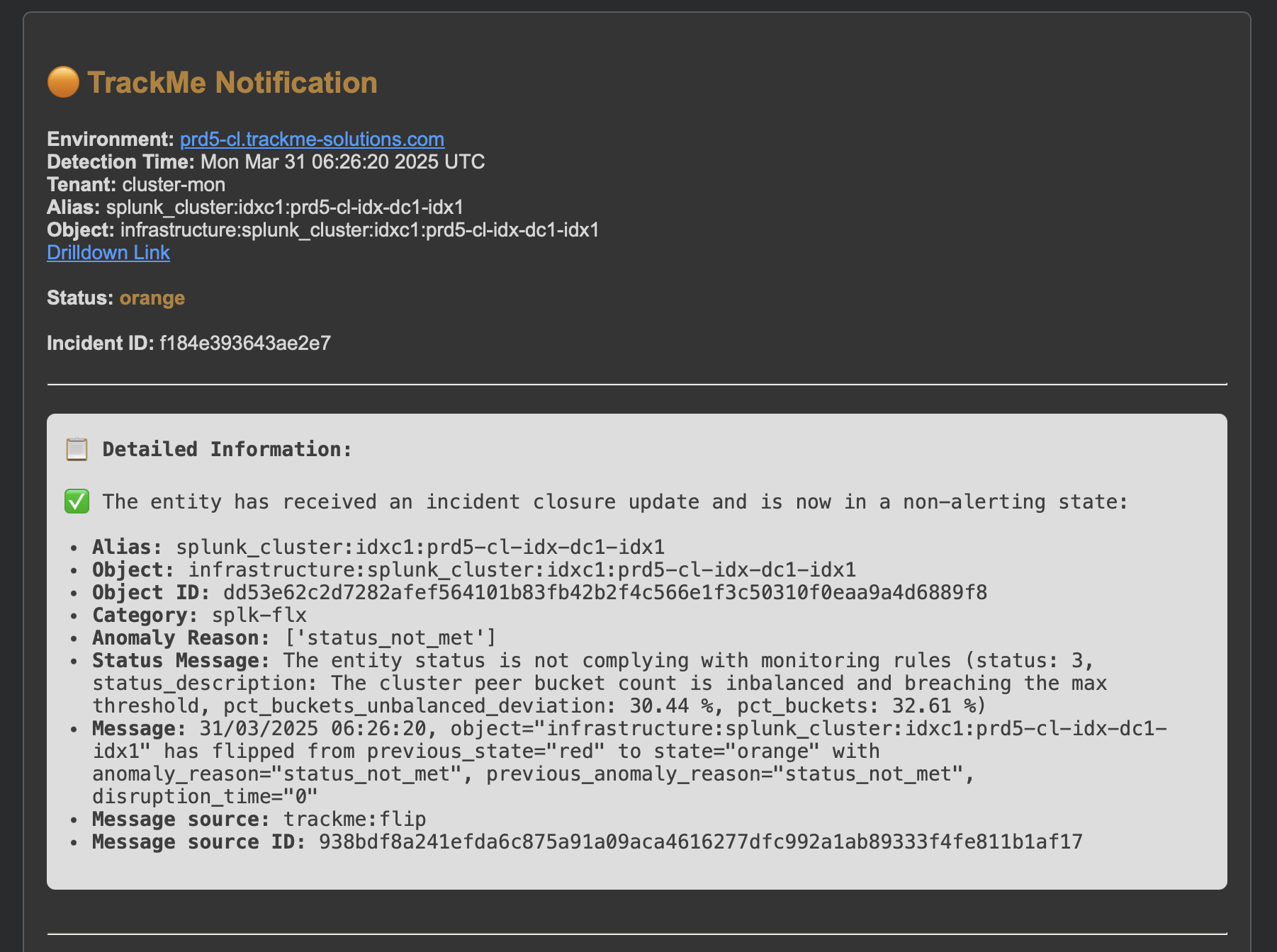

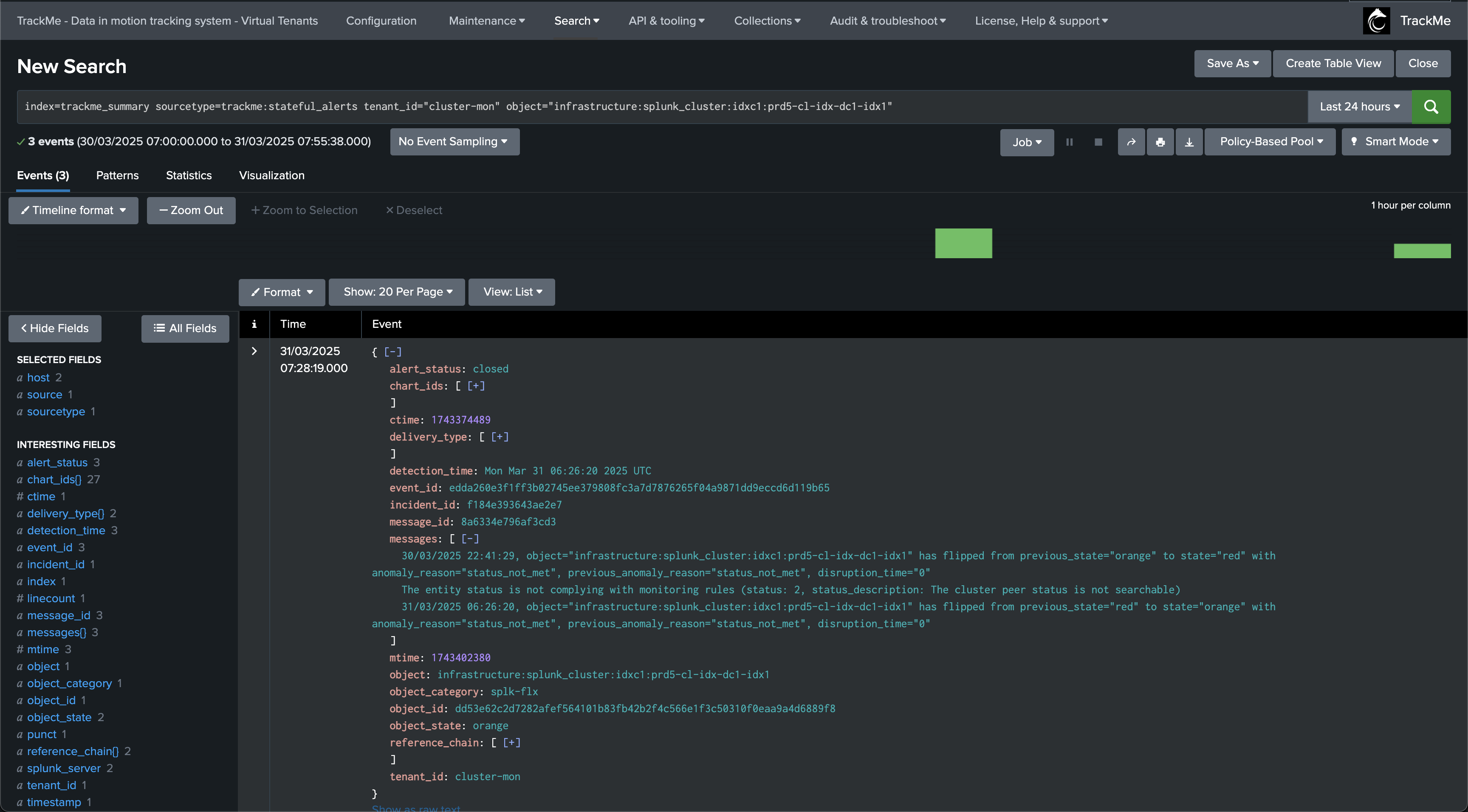

At some point, the issue on our indexer is resolved, the entity returned to a non alerting state (here orange due to bucket imbalance), the incident is closed:

An incident closure event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="cluster-mon" object="infrastructure:splunk_cluster:idxc1:prd5-cl-idx-dc1-idx1" alert_status="closed"

Finally, after some time, our indexer cluster catches up and the entity returns to a healthy state, the incident is closed:

A final incident closure event is created:

index=trackme_summary sourcetype=trackme:stateful_alerts tenant_id="cluster-mon" object="infrastructure:splunk_cluster:idxc1" alert_status=closed

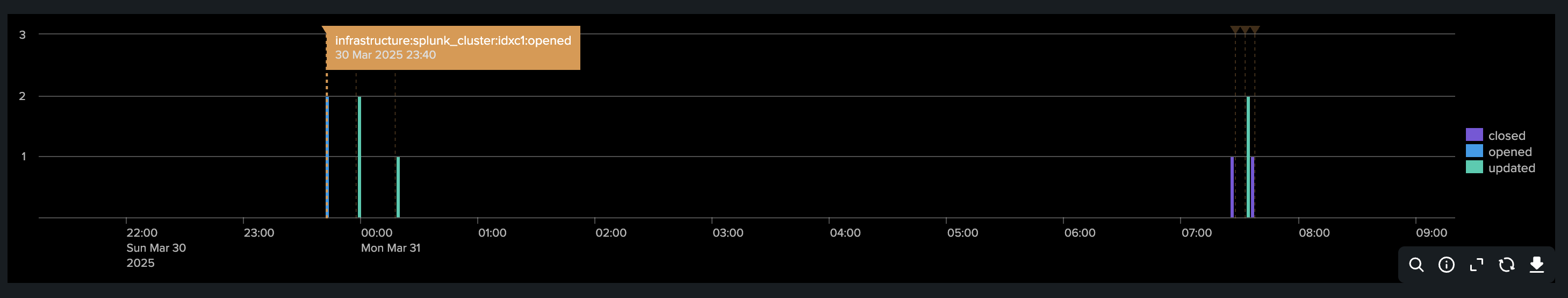

Visualizing the incident life cycle in a Splunk chart with annotations:

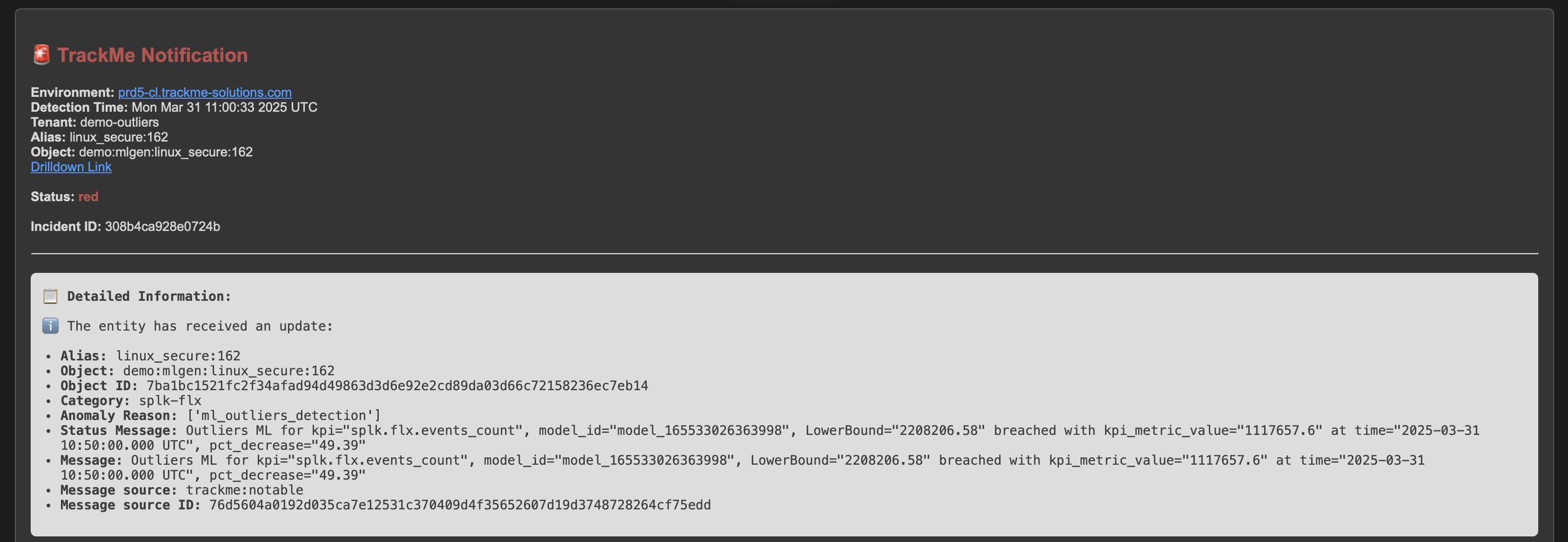

Example of a notification due to Machine Learning Outliers detection:

Below is an example of a notification due to Machine Learning Outliers detection:

Note: TrackMe will automatically add a data sampling related chart in the notification, to help you visualise the issue.

Example of a notification due to a feed data quality issue (data sampling):

Below is an example of a notification due to a feed data quality issue (data sampling):

Note: TrackMe will automatically add a data sampling related chart in the notification, to help you visualise the issue.

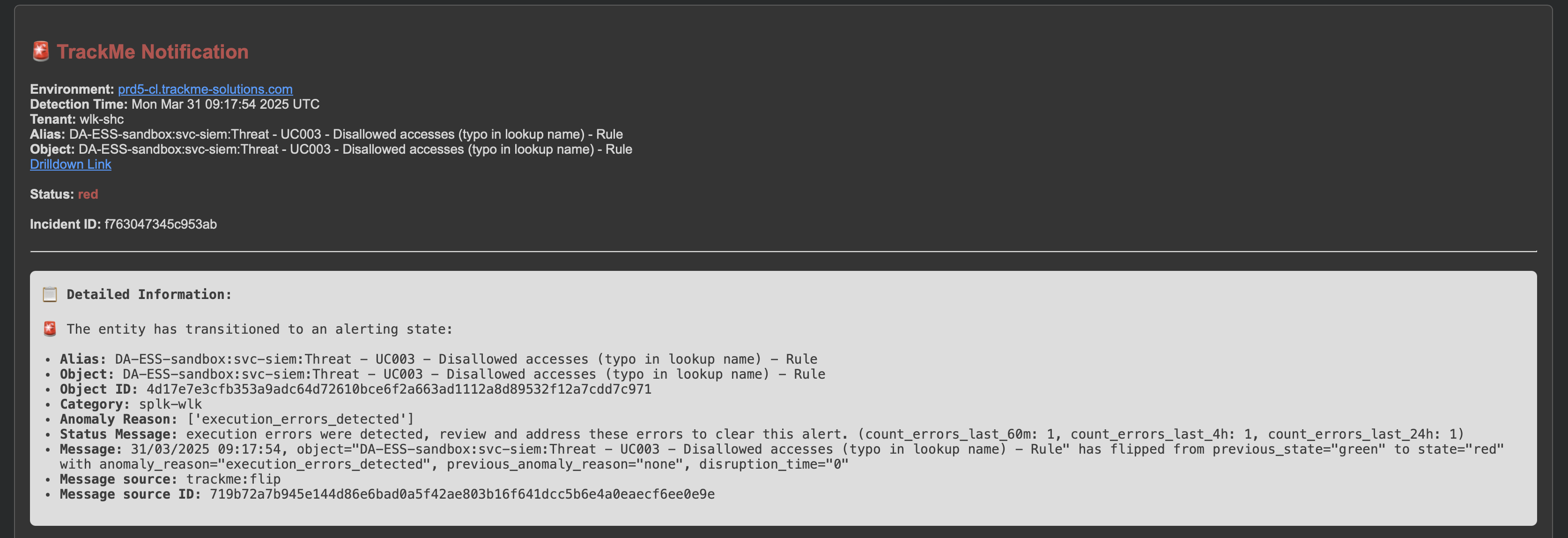

Example of a notification for the Workload component (Splunk scheduled searches and alerts monitoring):

Below is an example of a notification for the Workload component (splk-wlk):

Note: For this component, TrackMe will automatically generate charts per metrics, depending on metrics available in the entity.

Legacy Alerting with Notables or direct alerts

Legacy Alerting is based on Notables or direct alerts:

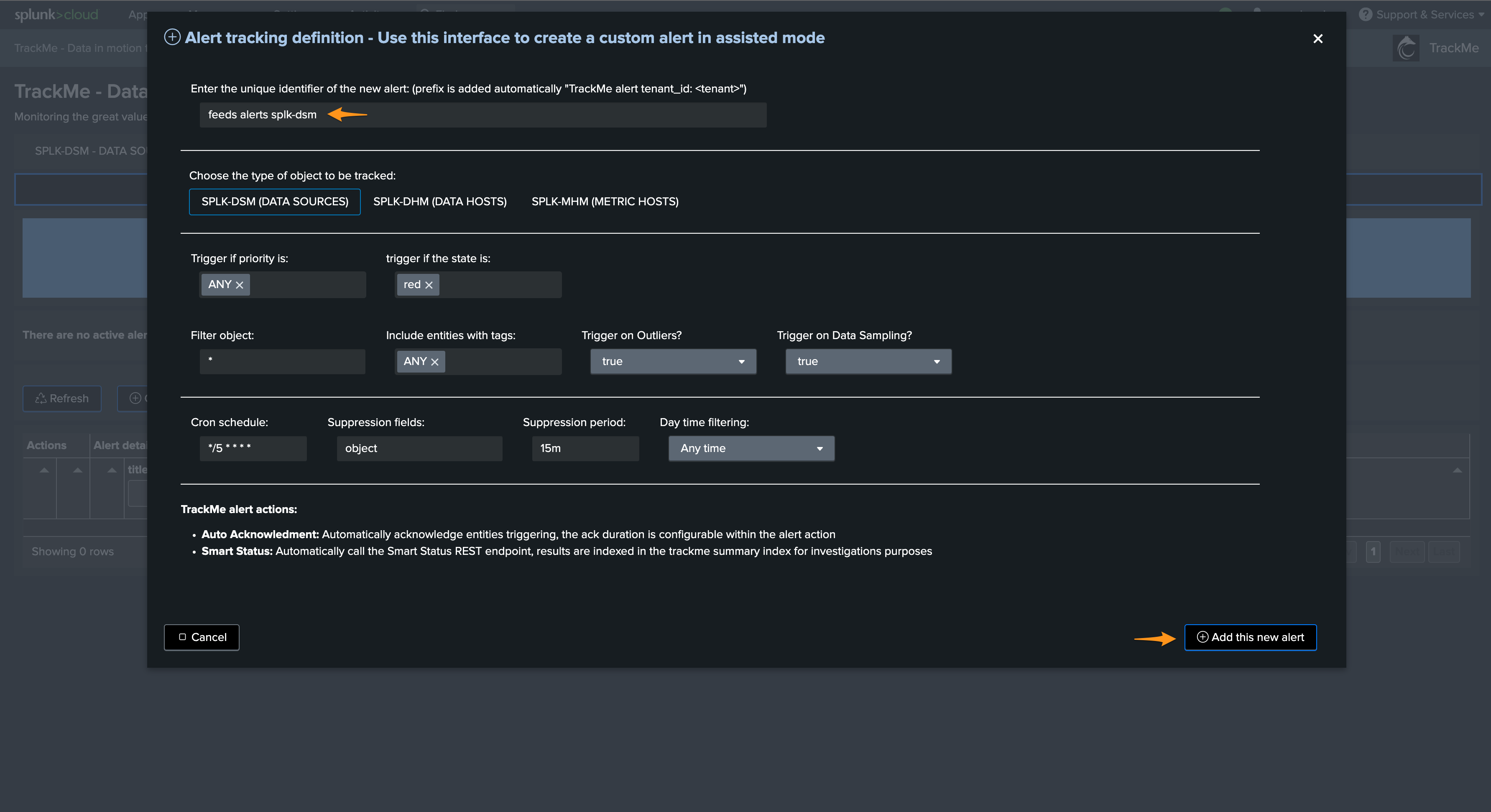

Creating an alert in TrackMe

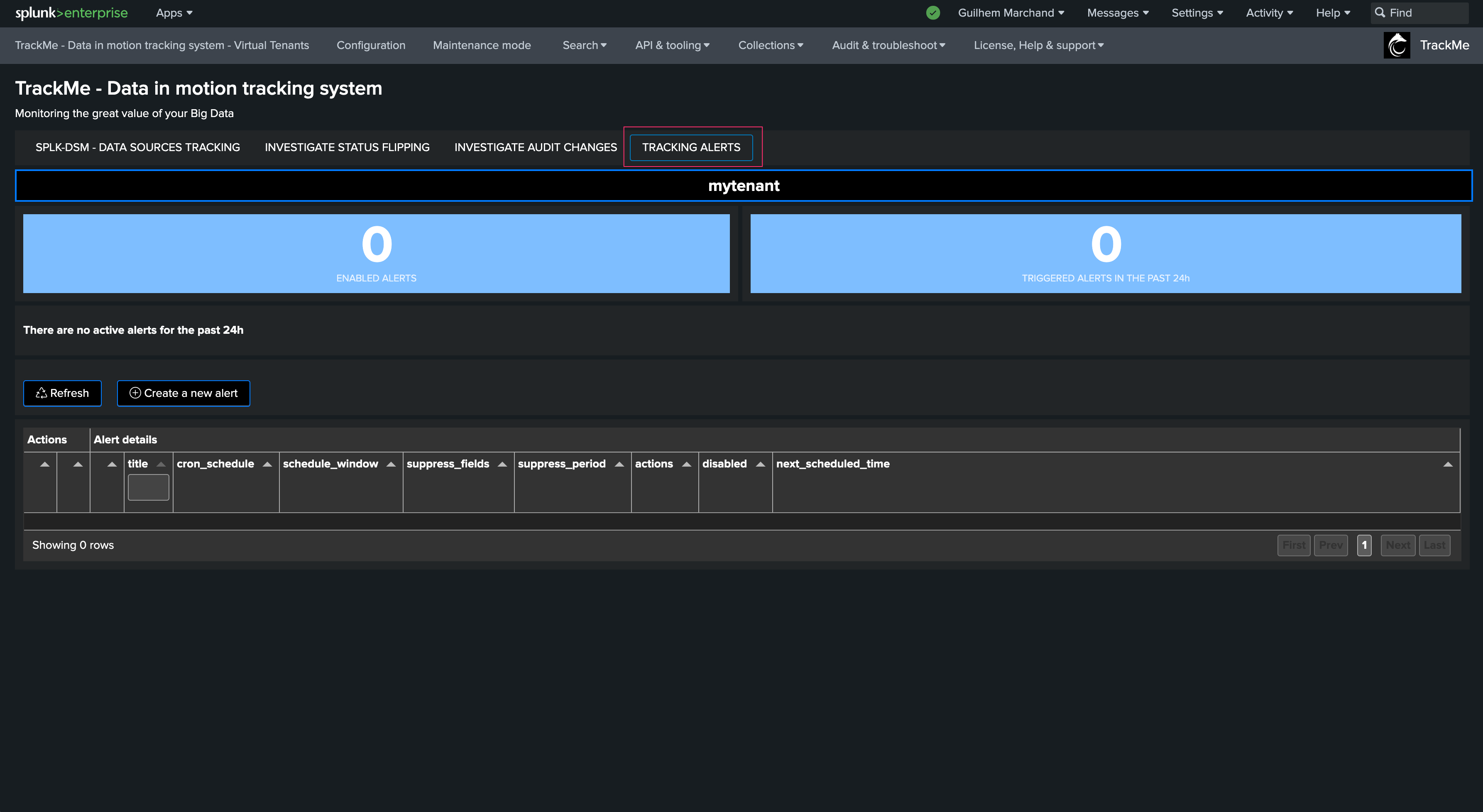

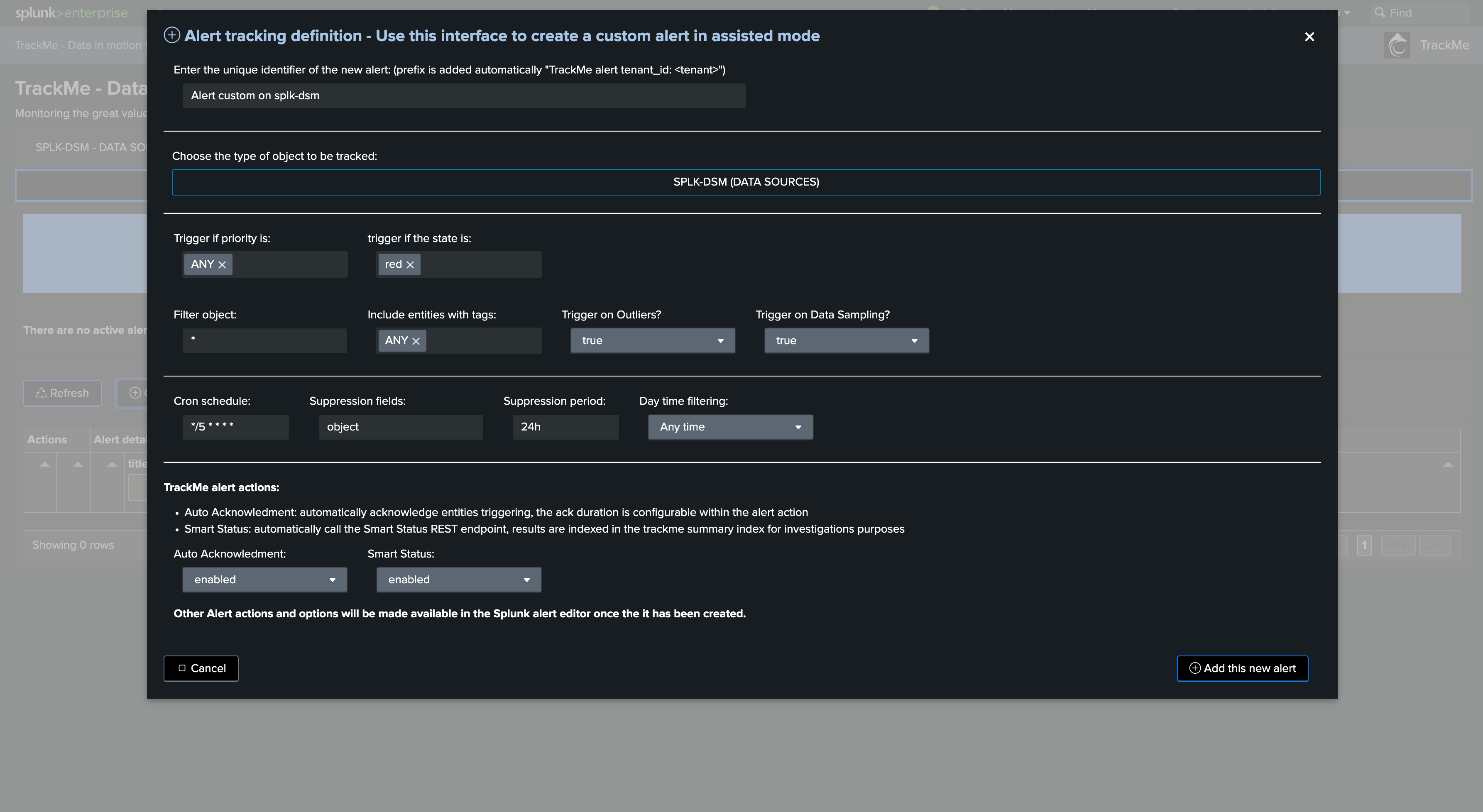

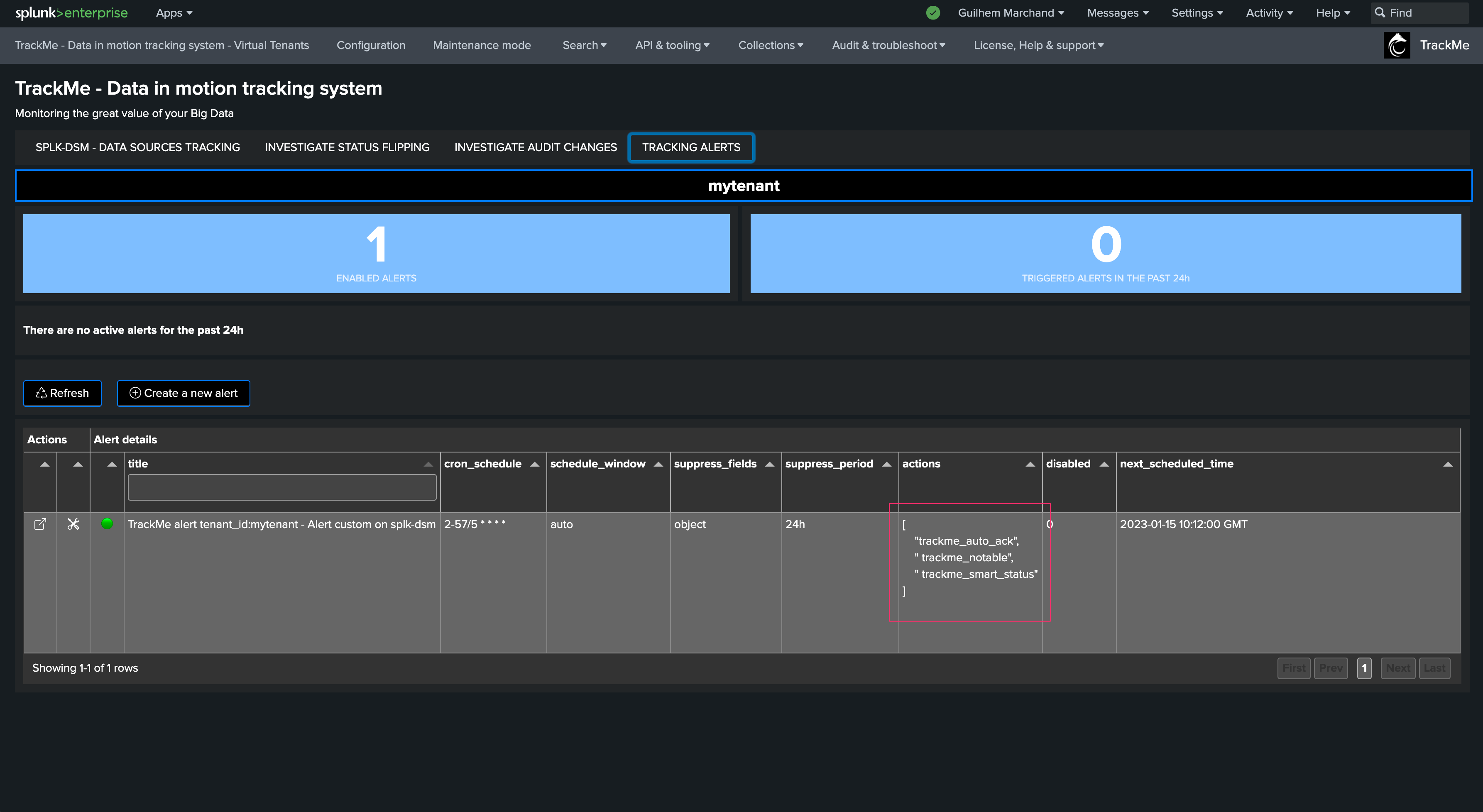

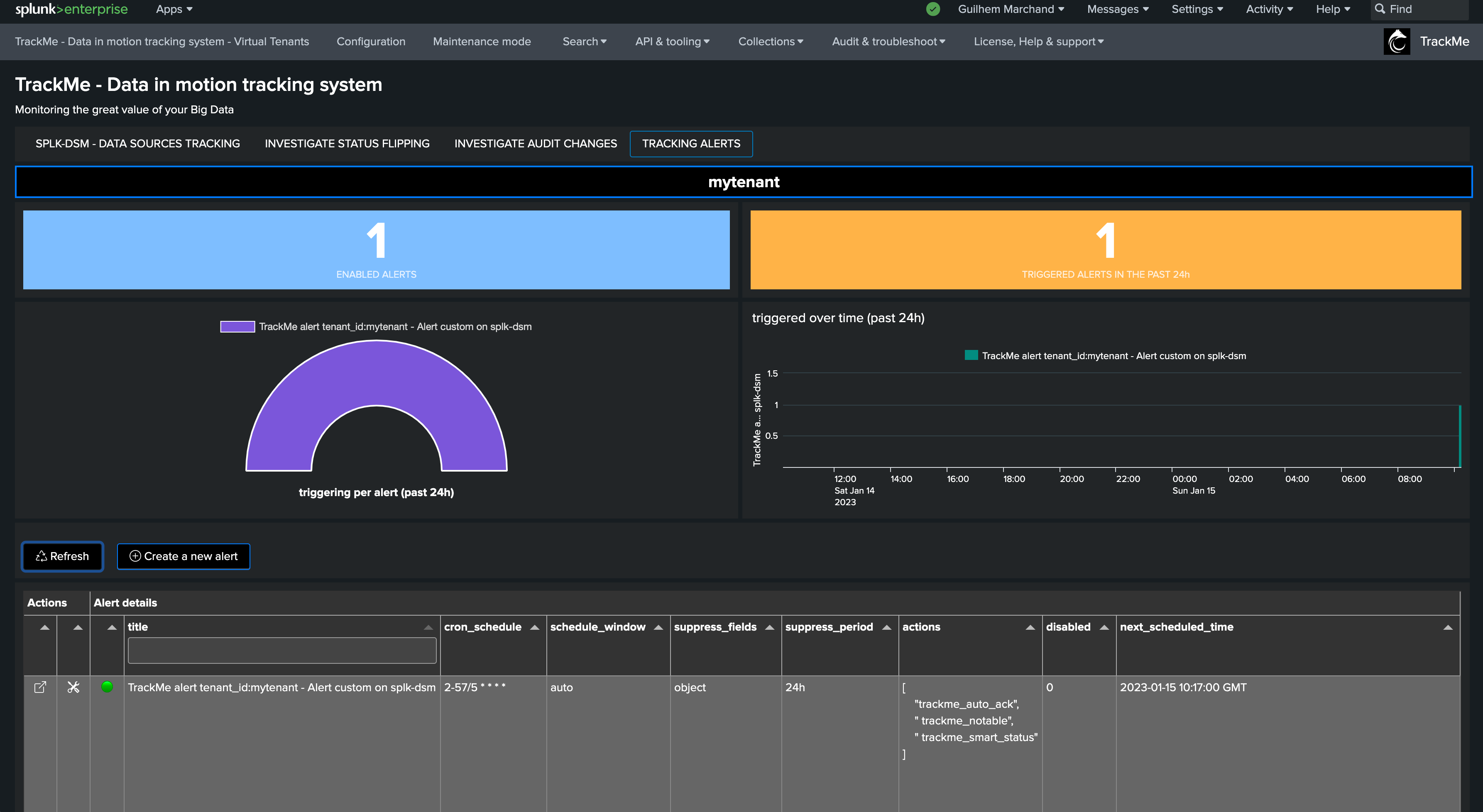

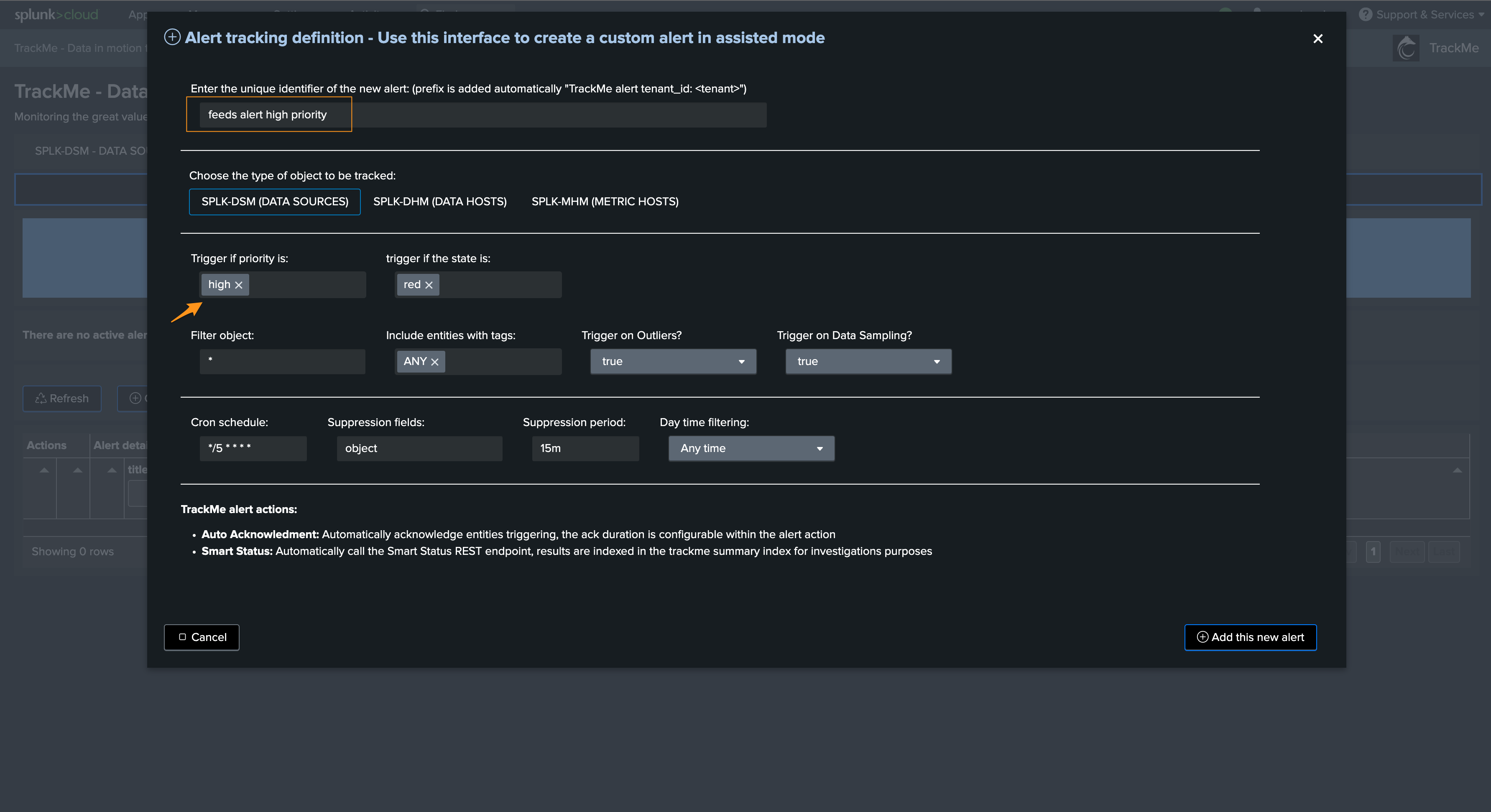

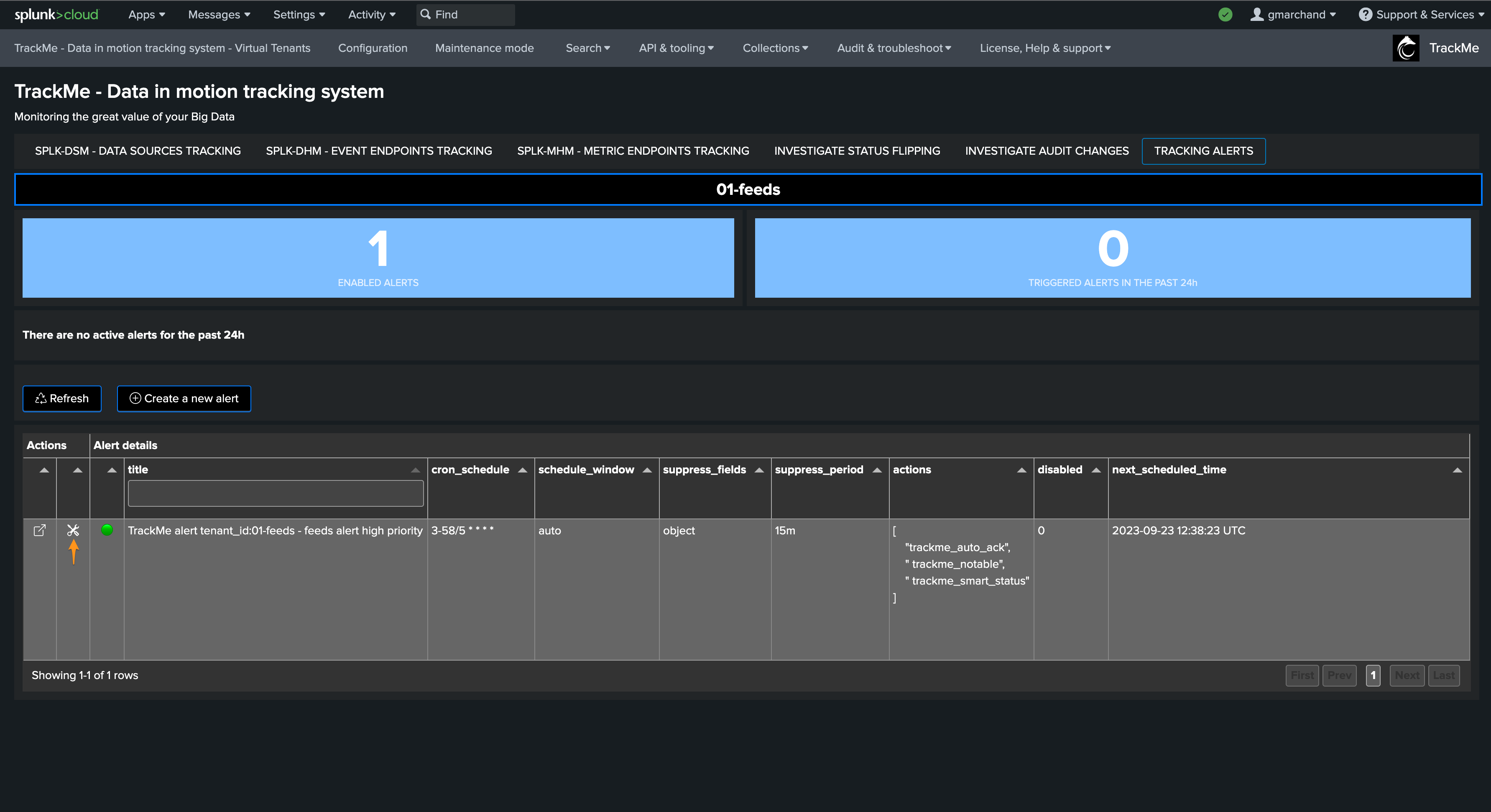

You can create a new alert in the scope of any Virtual Tenant:

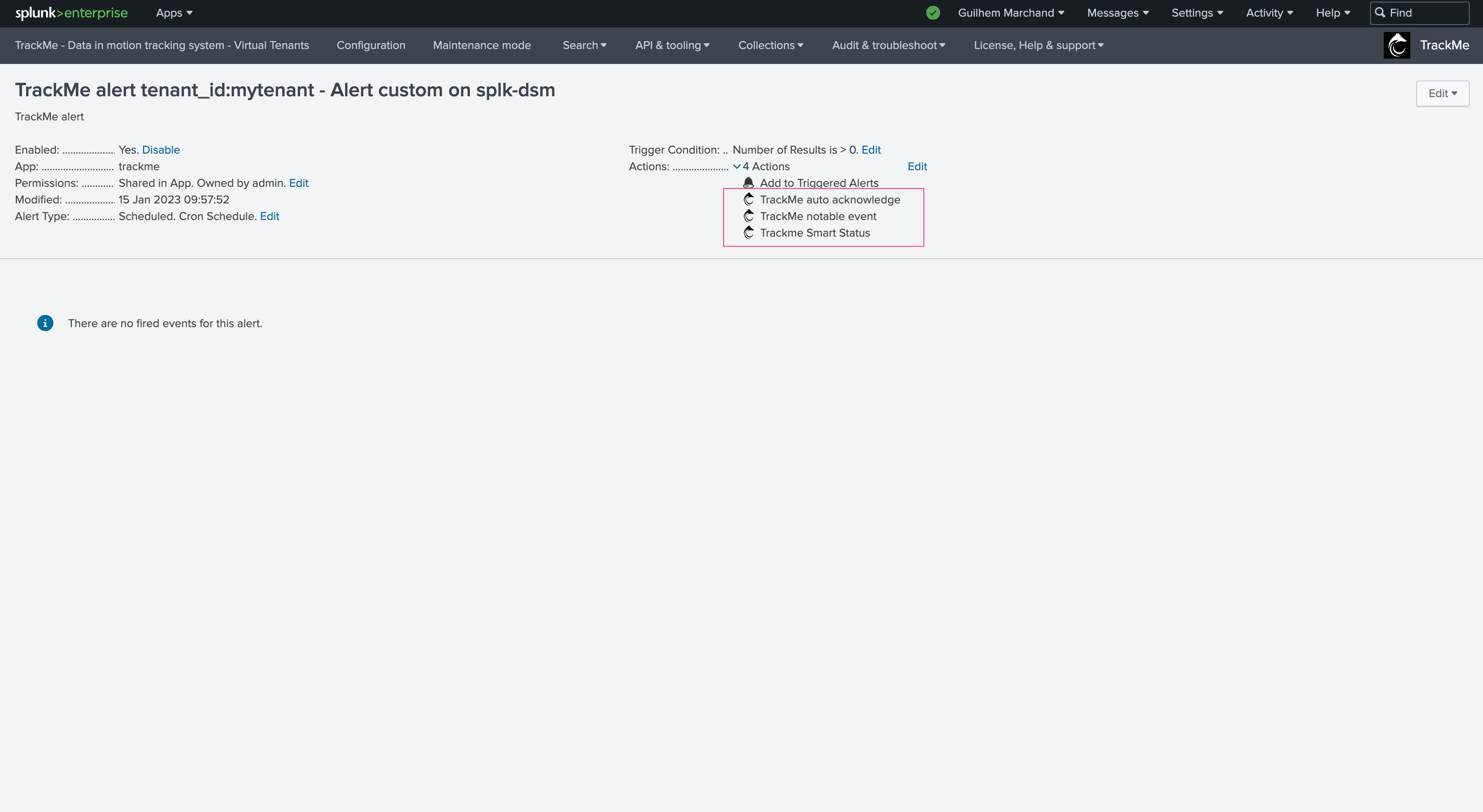

Available option may differ per TrackMe component, for instance with splk-dsm:

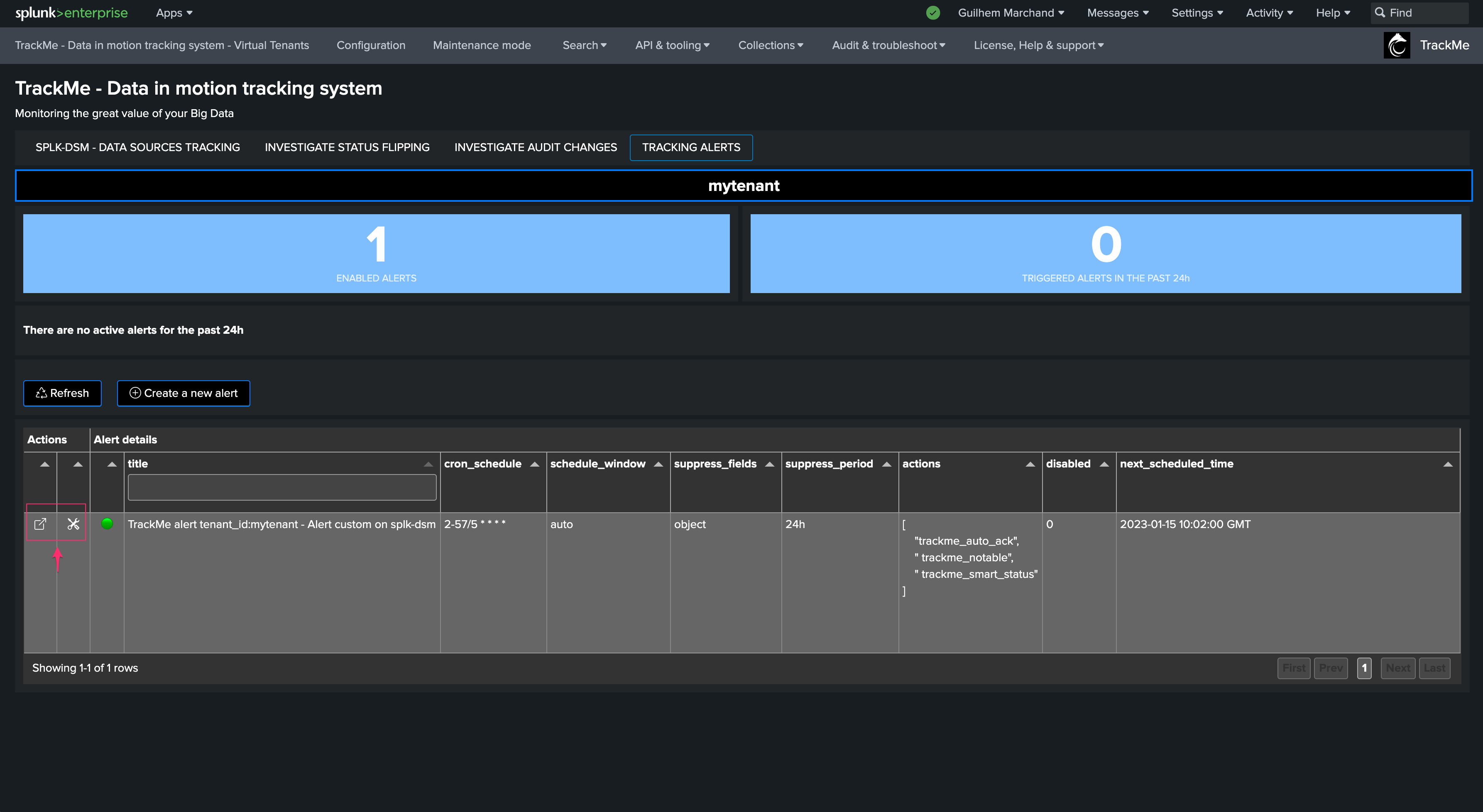

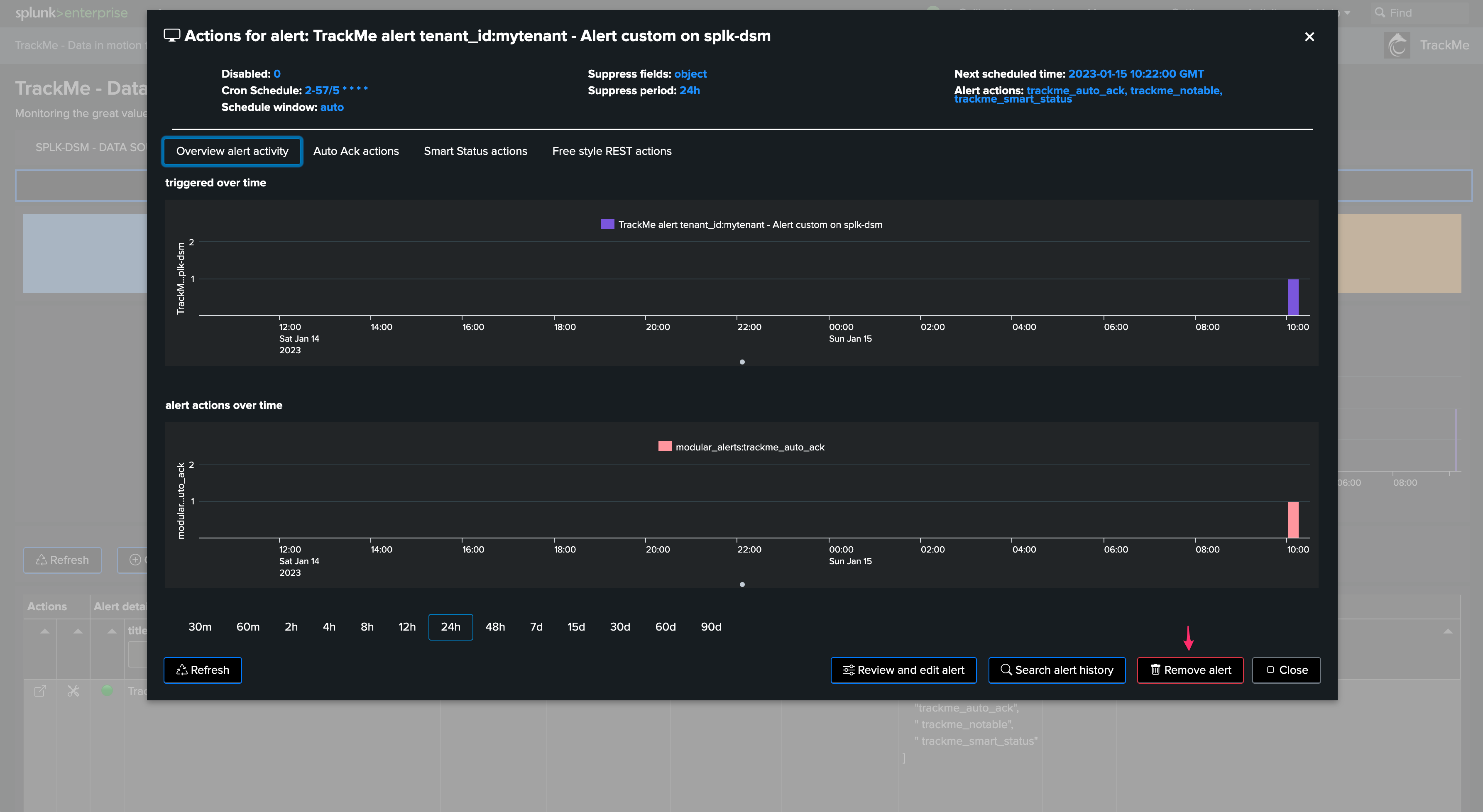

Once created, the alert reports in the alert user interface, and can be managed from this screen:

TrackMe modular alert actions

TrackMe has several alert actions that are leveraged when creating alerts:

TrackMe Notable event: This alert action generates a JSON event in the Virtual Tenant notable index summarizing the status of an entity when the alert triggers

TrackMe Auto Acknowledgment: This alert action automatically acknowledges the entity in TrackMe’s workflow for a given period of time

TrackMe SmartStatus: This alert performs automated investigations when an alert triggers for one or more entities, to improve and speed up root cause identification and add context to an issue detected by TrackMe

The TrackMe Notable event is a mandatory alert action, others can be disabled during the creation of the alert if you wish to do so.

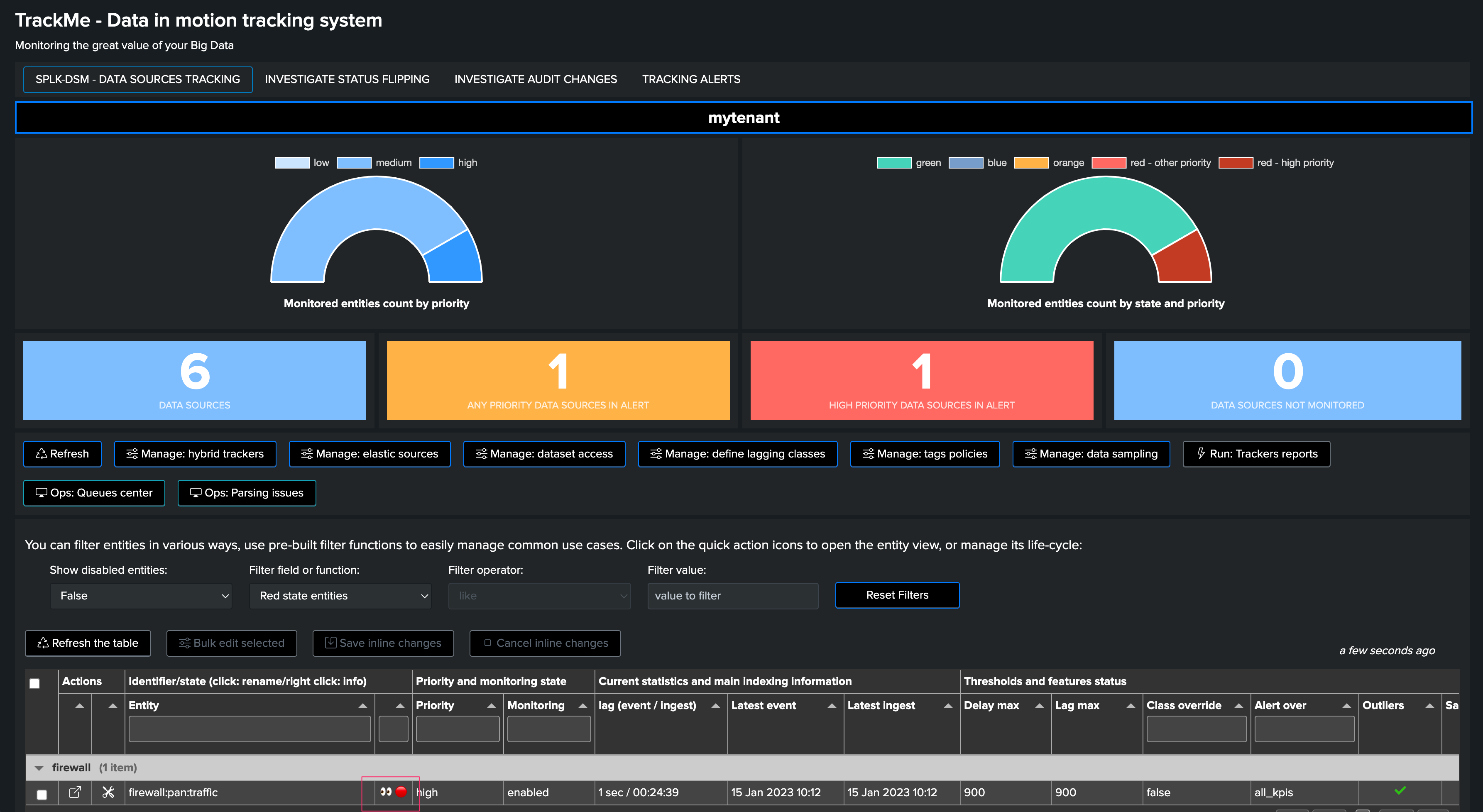

Alerts Triggering

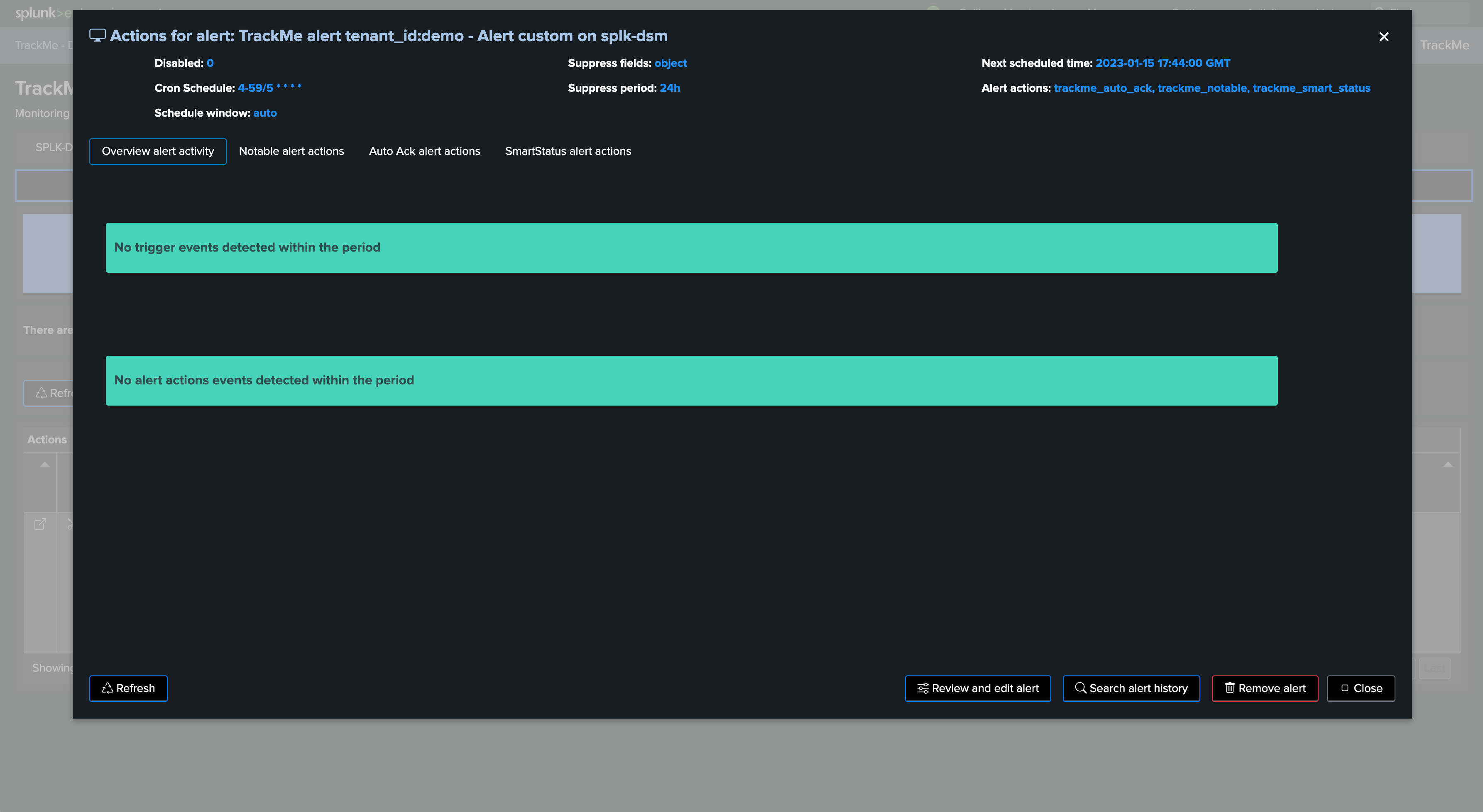

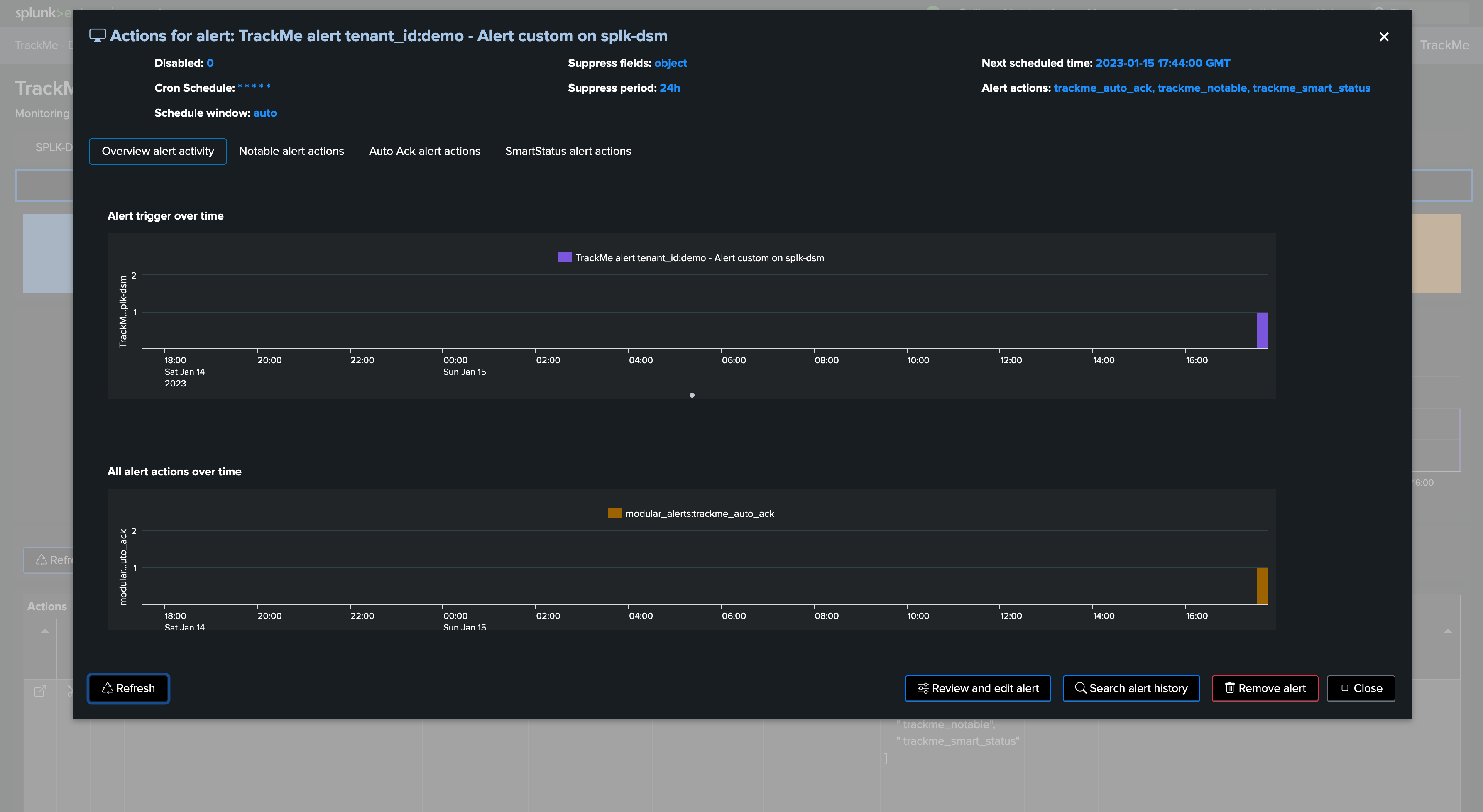

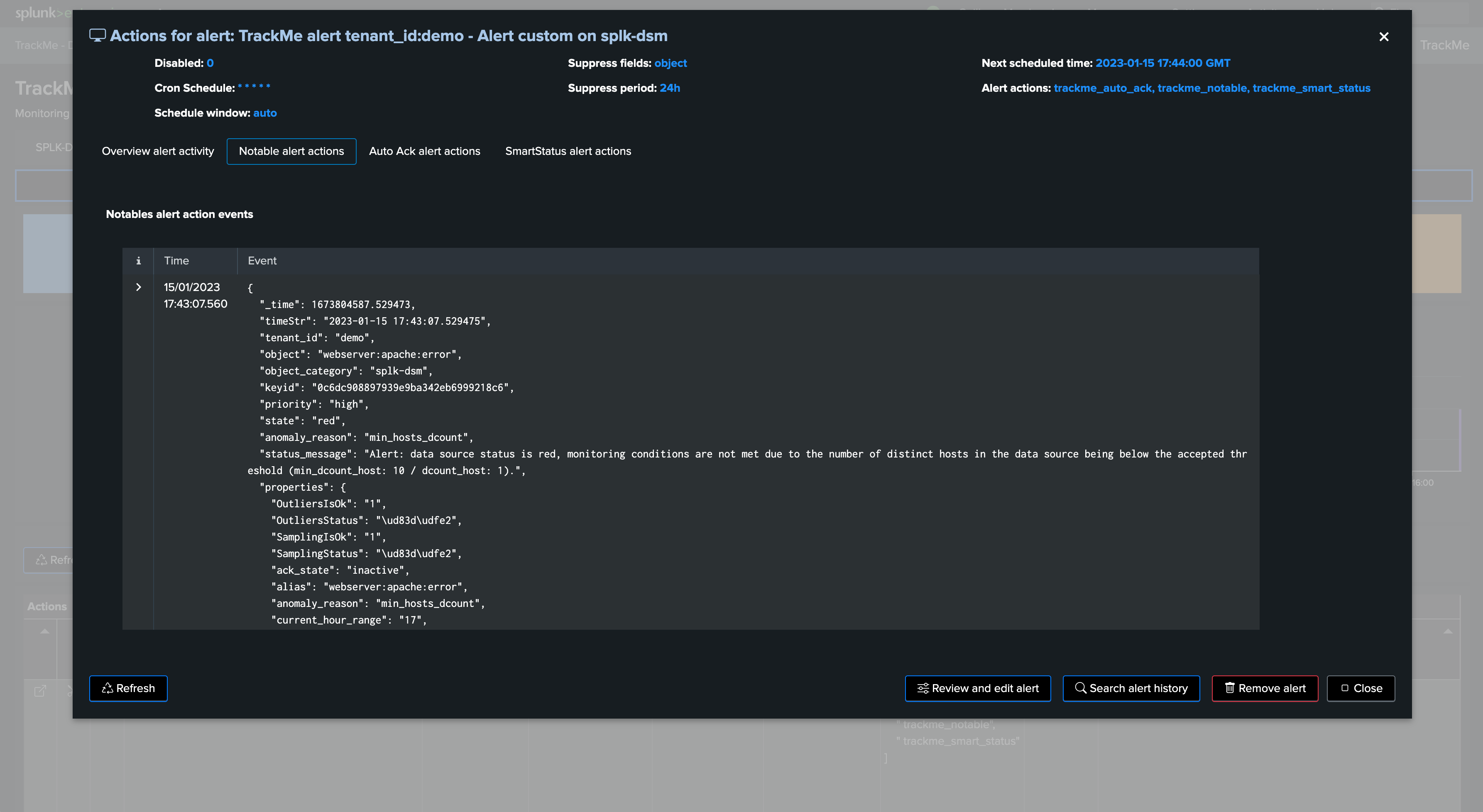

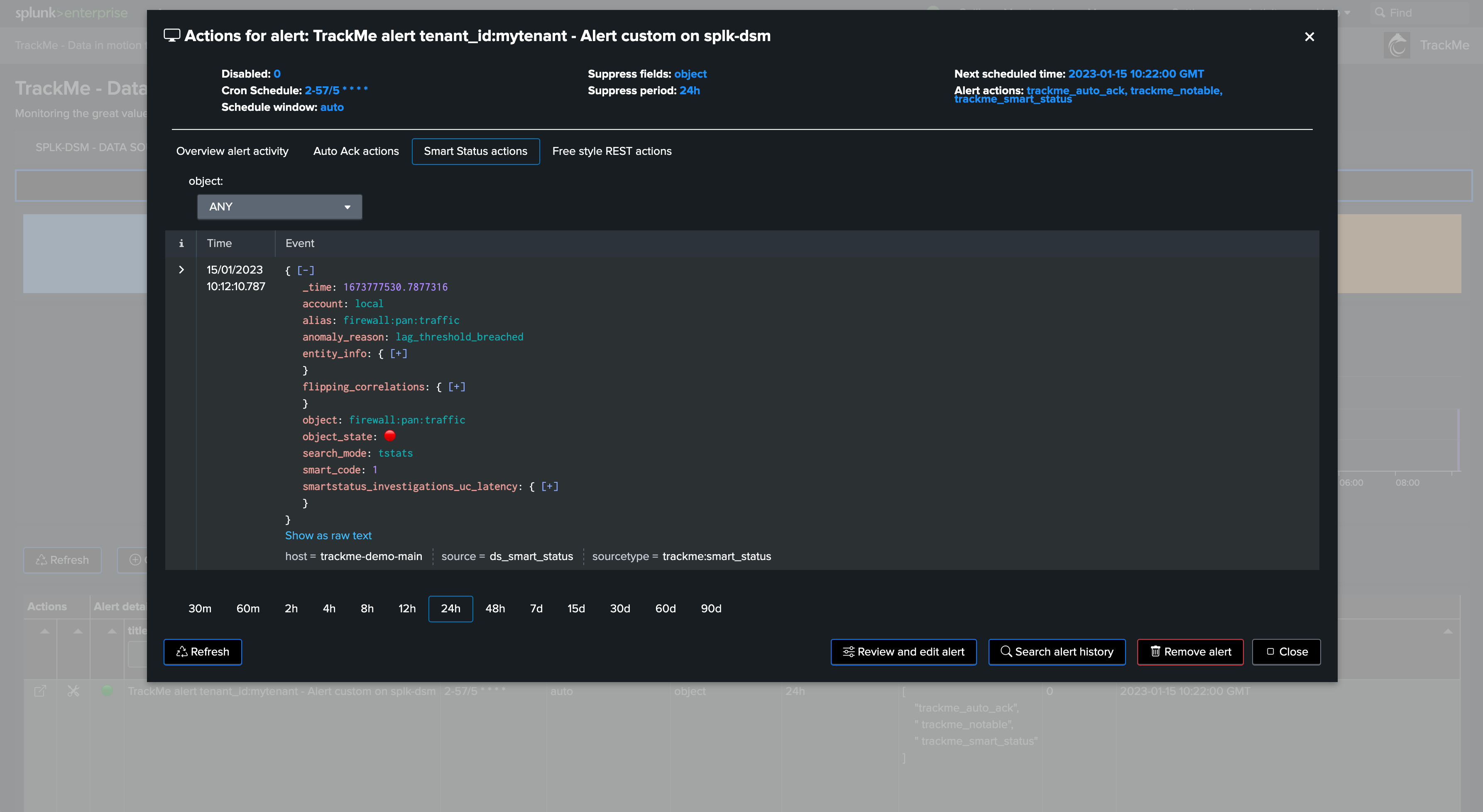

When the alert fires and triggers results, the user interface shows a summary of the triggers and the actions involved:

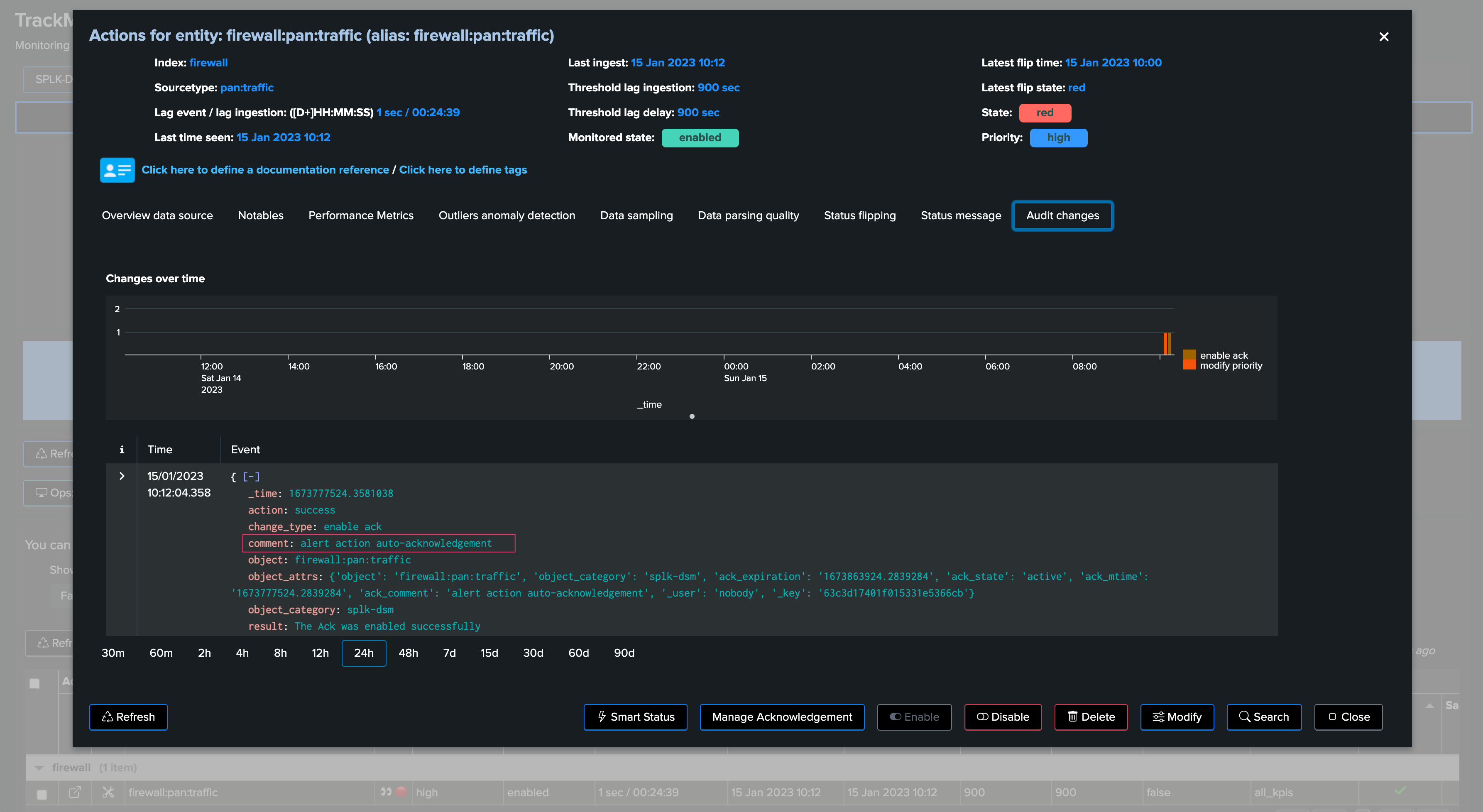

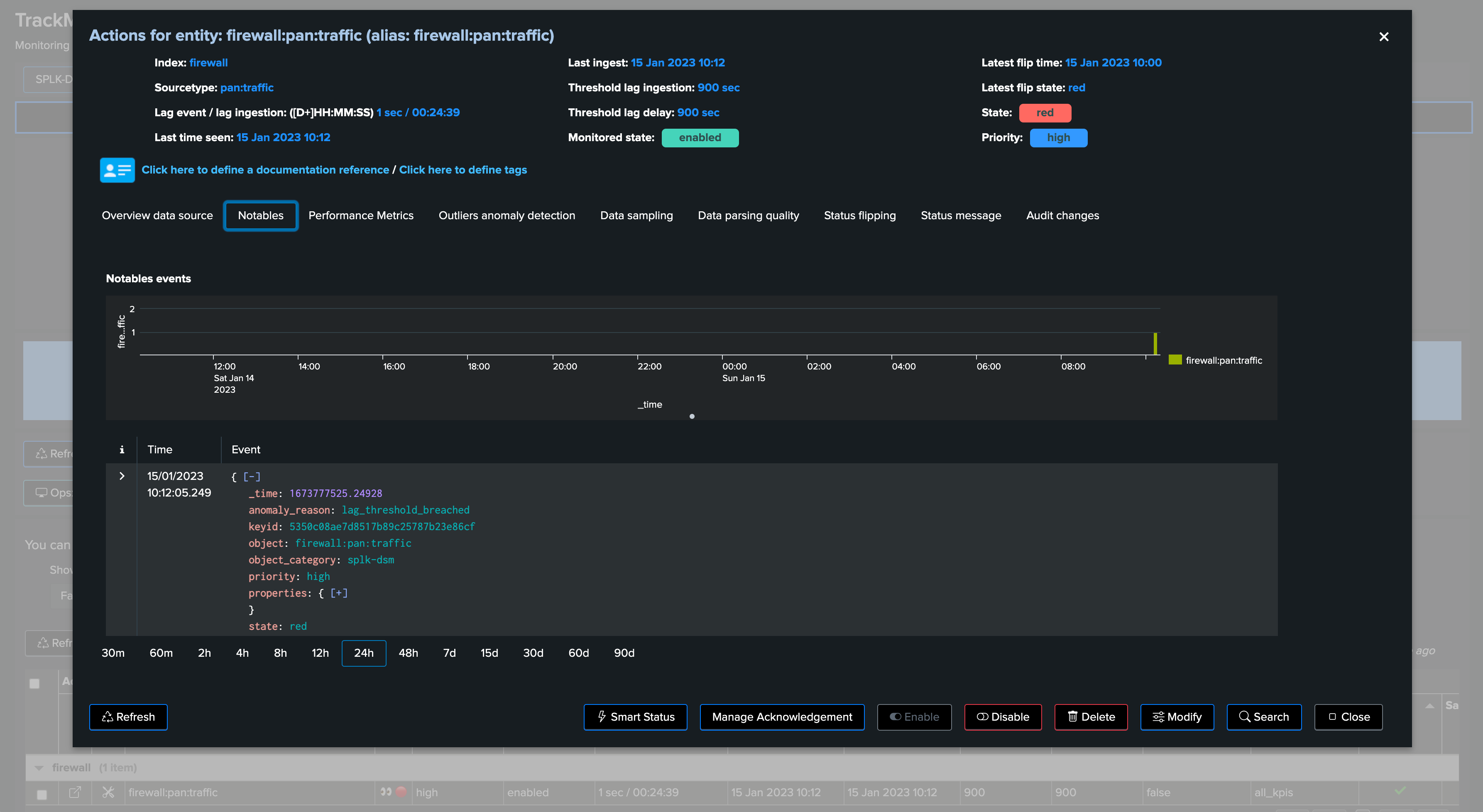

The alert user interface allows to investigate alert actions traces:

From the entities perspective, we can for instance observe that the entity alert was acknowledged automatically:

A notable event was created:

As well as a TrackMe SmartStatus event:

Third Parties Alert Integration

Introduction to Third Parties Alerting Notification

Any TrackMe alert can be extended to send notifications to a third party, typically:

Sending emails to a recipient, such as the owner of the entities/service, or TrackMe administrators and analysts

Opening an incident to your ITSM such as ServiceNow or Atlassian Jira

Creating a message in a Slack or Microsoft Teams channel

Note

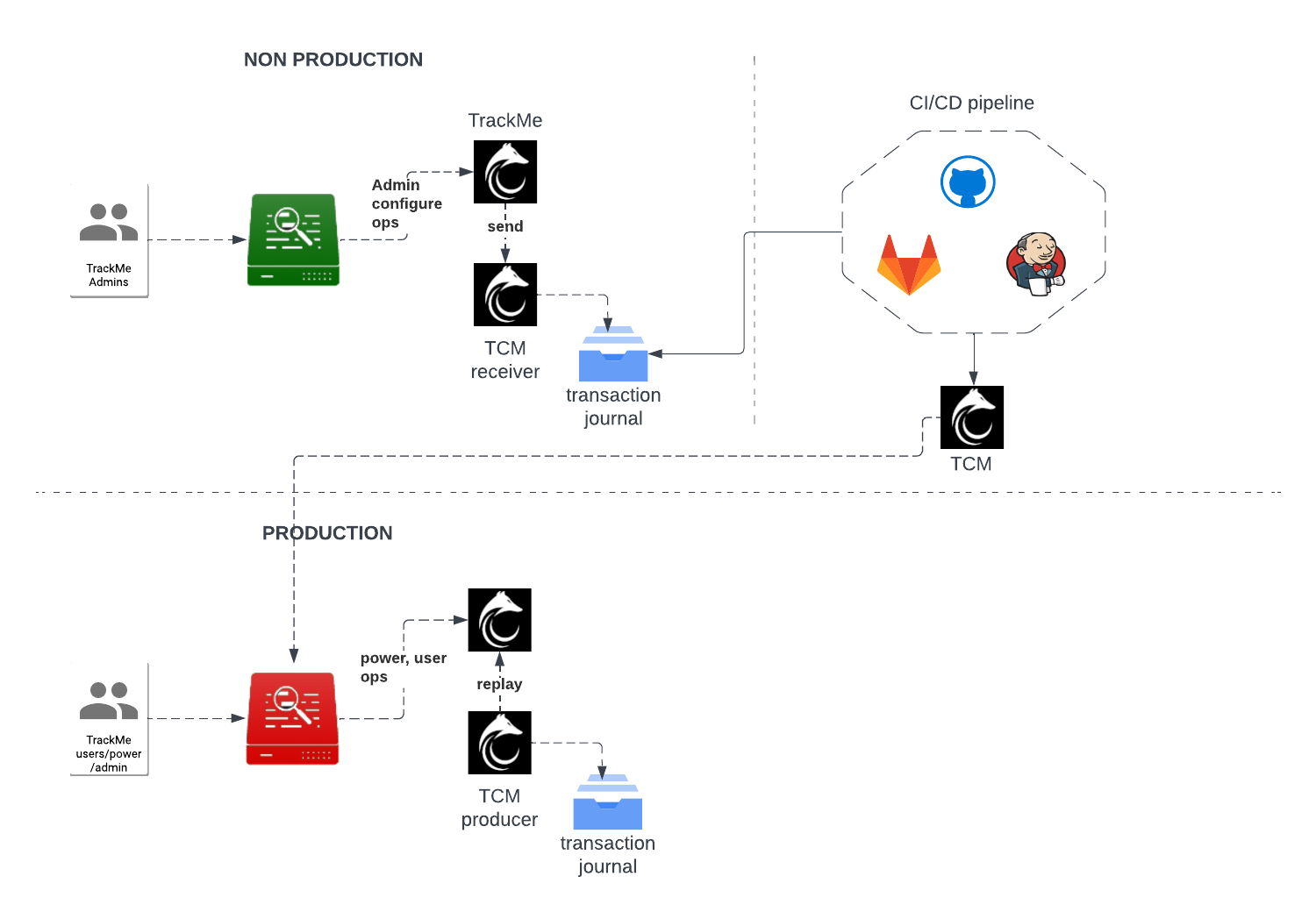

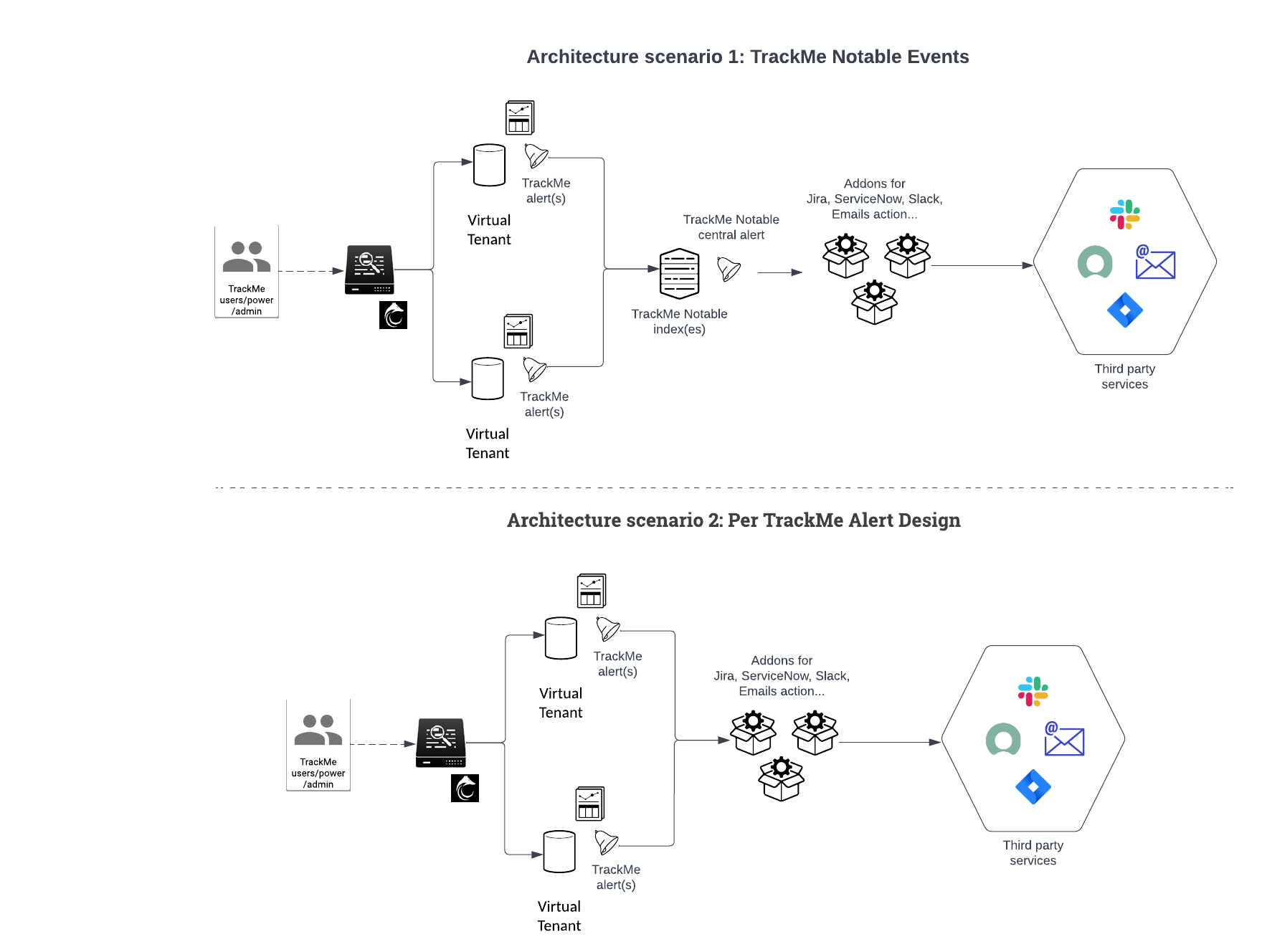

Two main alerting architecture design can be implemented:

Architecture scenario 1: Notable based design TrackMe alerts generate notable events, a centralised Splunk alert monitors for TrackMe notables and run alert actions for the third party

Architecture scenario 2: Per alert design TrackMe alerts are configured to run the third party alert action and perform the associated action directly

While the two options are possible, the Architecture scenario 1 based on Notables has multiple advantages:

Each TrackMe alert handles all entities, ensuring the creation of TrackMe notable events and other features such as the acknowledgment

Allows centralising the definition of what should lead to the creation of an incident, sending an email or sharing channel message

Notable events are normalised and provide all necessary information in a consistent fashion

For instance, you can have a single “TrackMe forward notable” alert which restricts to

highpriority entities and any additional logic of your own, easily in SPLThis logic can easily be maintained over time, without having to modify multiple alerts and provides a deeper and more consistent control

This documentation covers both approaches in the next sections.

Architecture scenario 1: TrackMe Notable Events

Introduction: Anatomy of TrackMe Notable Events

Hint

Forwarding TrackMe Notable to Splunk SOAR

If you are looking to forward TrackMe Notable Events to Splunk SOAR, consult the following: :Forwarding TrackMe Notable Events to SOAR

TrackMe Notable Events creation

TrackMe notable events are generated when a TrackMe alert triggers against one or more entities, relying on the built in TrackMe Notable modular alert action.

This alert action configuration is also visible when editing a TrackMe alert:

Note

Notable events are ONLY created when a TrackMe alert is configured and triggers; in this scenario, all eligible entities should therefore be covered by an alert per tenant and component.

TrackMe Notable Events structure

TrackMe Notable events are JSON formatted events, these contain the following key information:

Field |

Description |

|---|---|

tenant_id |

The tenant identifier this Notable event is related to. |

object |

The object name for this entity, this basically is the name of entities in TrackMe |

object_category |

A TrackMe identifier for each component, this describes which type of TrackMe component this notable is related to. |

keyid |

The unique identifier for this object in the tenant / component central KVstore collection |

priority |

The priority definition for this object (low / medium / high / critical) |

state |

The status of the object (blue / green / orange / red) |

anomaly_reason |

A normalised field describing the reason behind the status of a given entity |

status_message |

A human readable detailed description of the reason why TrackMe generated an alert for this entity |

timeStr |

The time of the event in human readable format |

drilldown_link |

A link to the TrackMe Home user interface which filters on the tenant, component and entity, and automatically opens the entity overview screen (from TrackMe 2.0.88) |

event_id |

The unique identifier for this notable event, as the sha-256 hash of the notable event content (from TrackMe 2.1.0) |

properties |

The complete TrackMe object record in a nested JSON structure, as it was when the alert triggered |

Therefore, TrackMe Notable events contain all necessary information in a consistent and normalized manner, to interact with any third party.

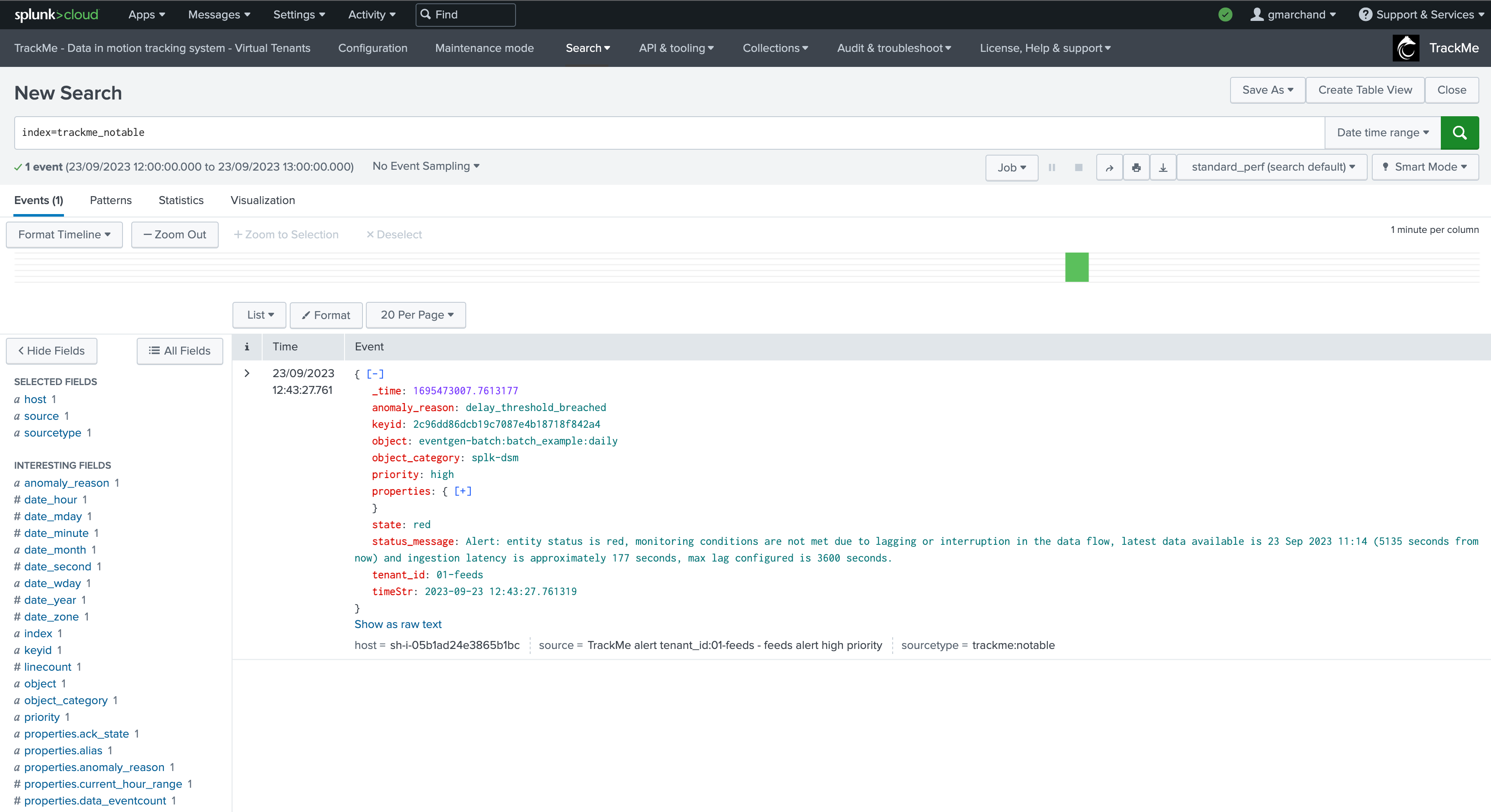

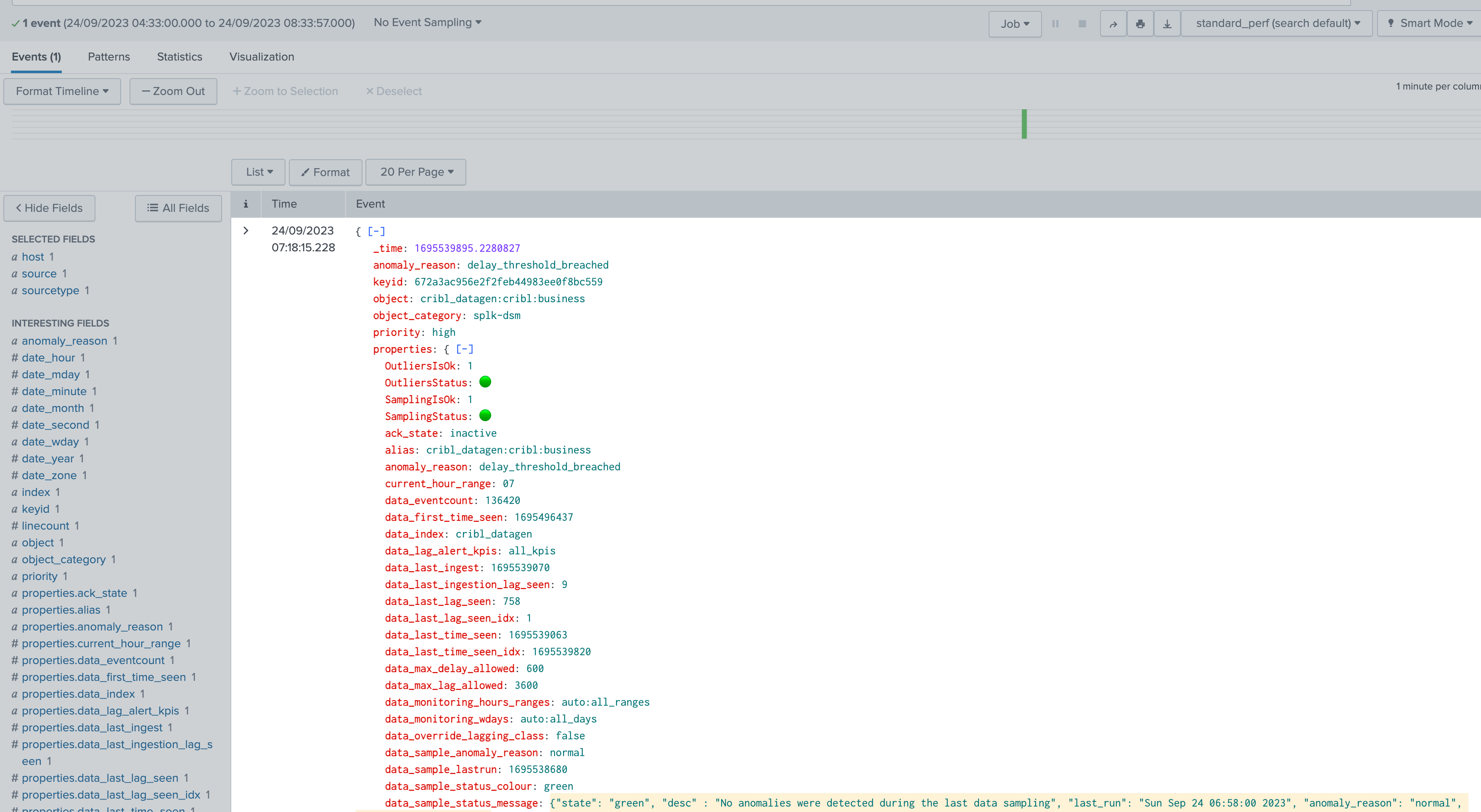

Example of a TrackMe Notable event:

The properties field contains the entire entity record as nested JSON; it is also extracted automatically so you can use fields as needed:

TrackMe Notable events are easy to access and review in TrackMe’s user interface:

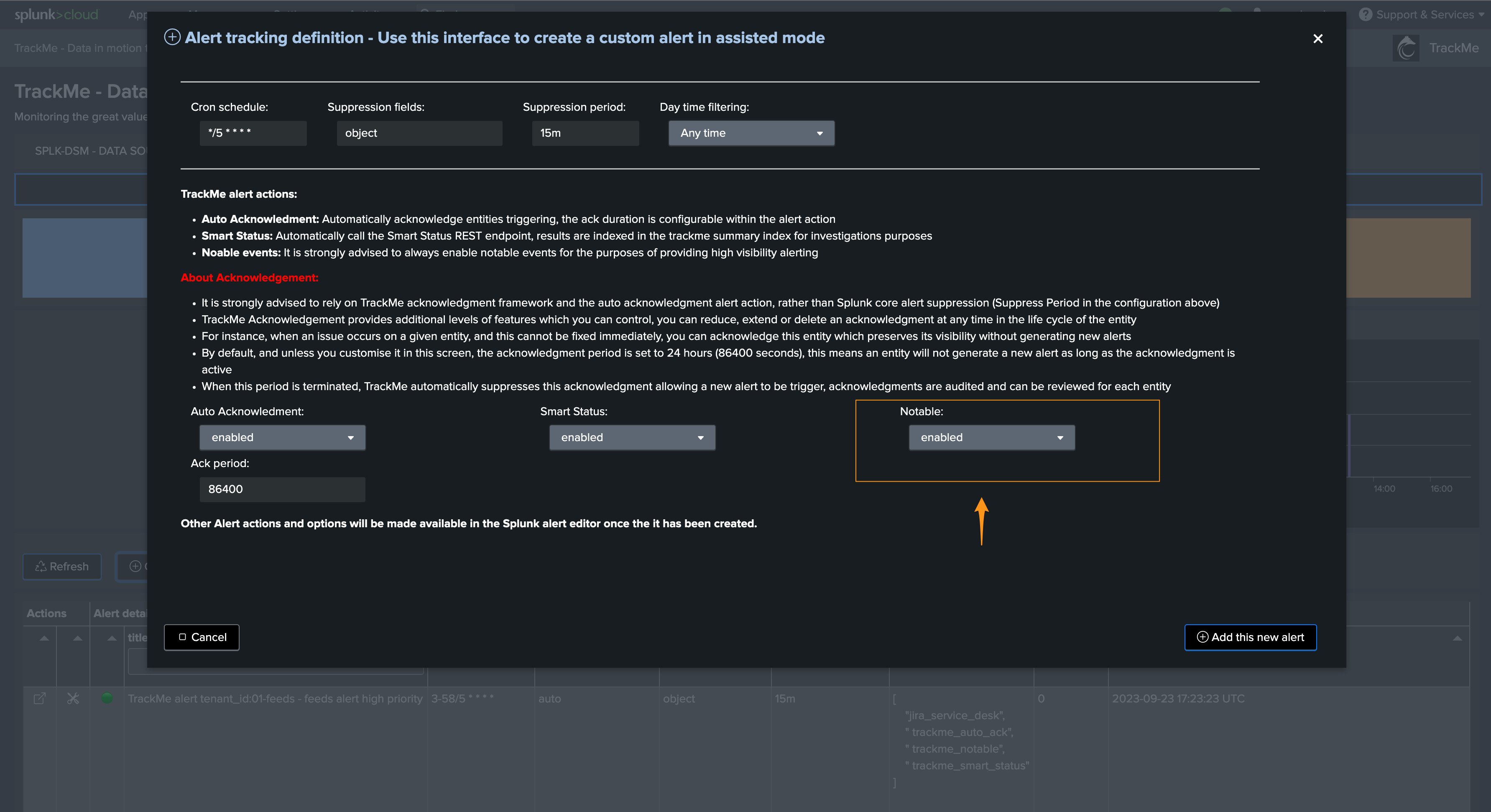

Step 1: Create TrackMe alerts for tenants and components in used

The first step is to create a TrackMe alert for every tenant and component that is in use.

It is not necessary at this stage to filter on concepts like the priority because filtering will be made while monitoring the TrackMe notable events.

For each tenant, access the TRACKING ALERTS tab, and use the wizard to create an alert, you can leave all options to their default:

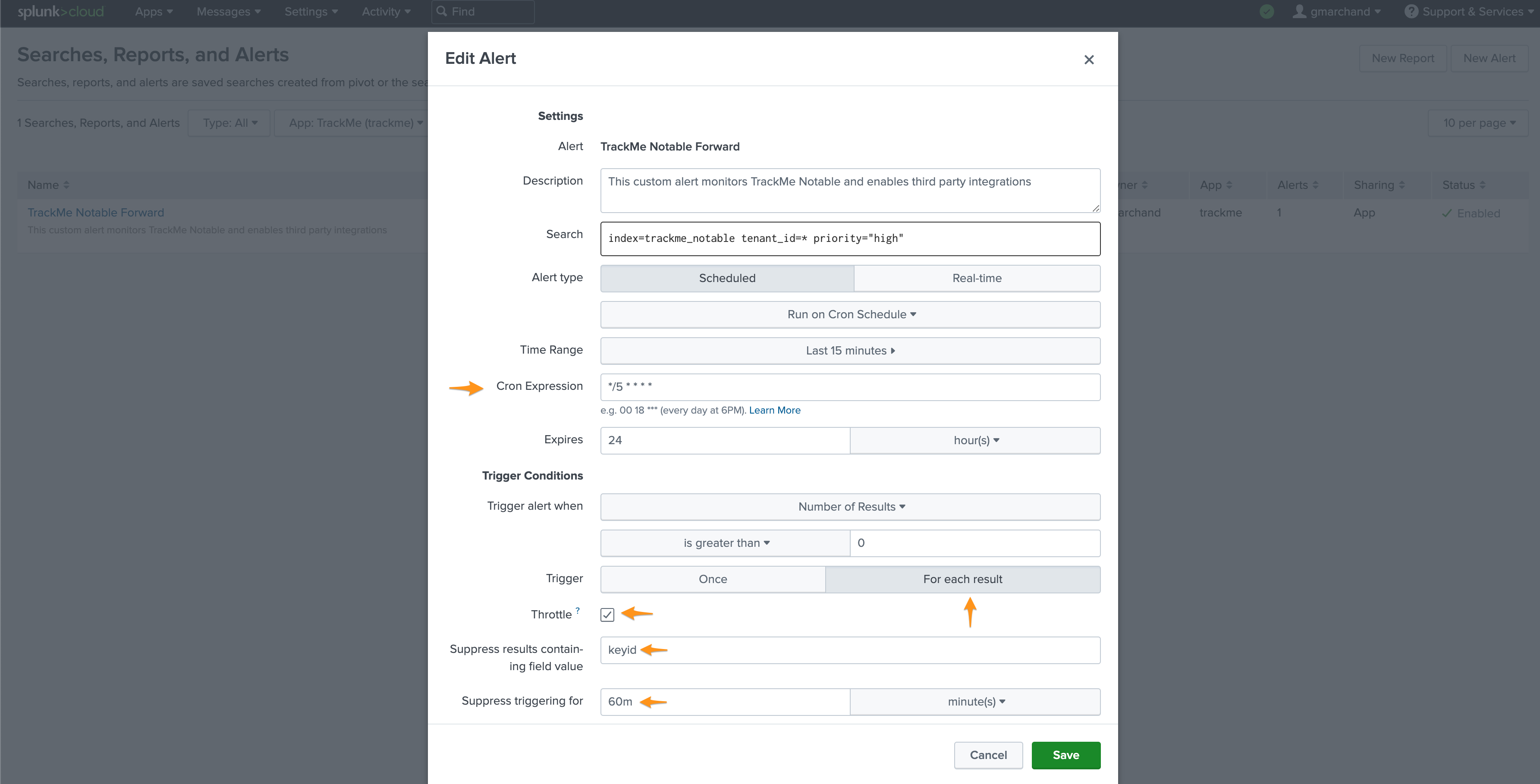

Step 2: Create a custom alert monitoring TrackMe notable

The second step is to create a custom Splunk alert which searches for TrackMe notables and enables third-party interactions, for instance:

As a basis:

index=trackme_notable tenant_id=* priority=*

| table tenant_id, keyid, event_id, object, object_category, state, priority, anomaly_reason, status_message, drilldown_link

For instance, a typical use case would be to create incidents only for high priority entities in alert:

index=trackme_notable tenant_id=* priority="high"

| table tenant_id, keyid, event_id, object, object_category, state, priority, anomaly_reason, status_message, drilldown_link

Note

In TrackMe, you can define different notable indexes per tenant, if you did so you will want to adapt your search.

Create the Splunk alert:

Cron every 5 minutes: */5 * * * *

Earliest time: -15m

Trigger for each result

Throttle against the keyid for 60m

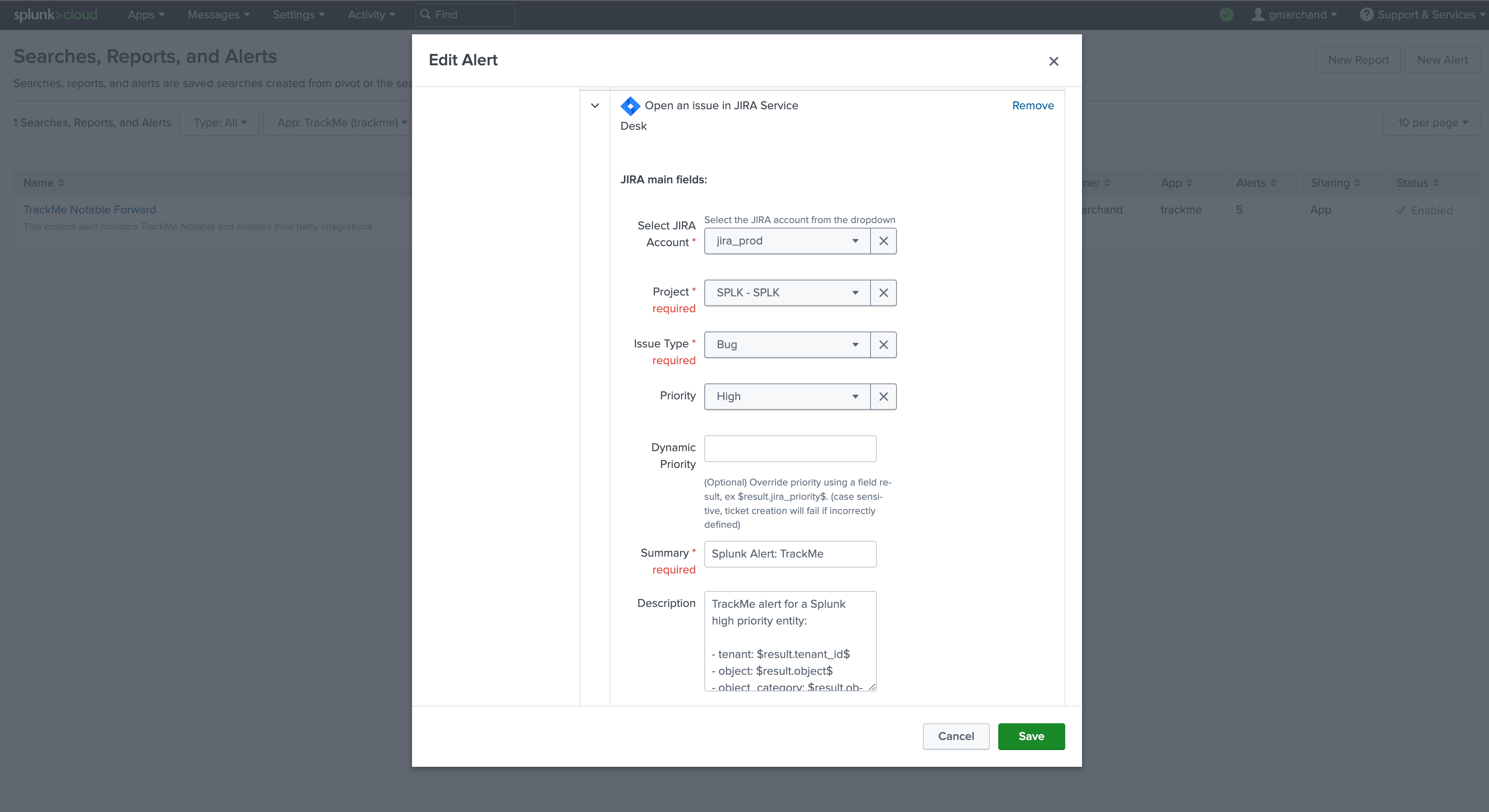

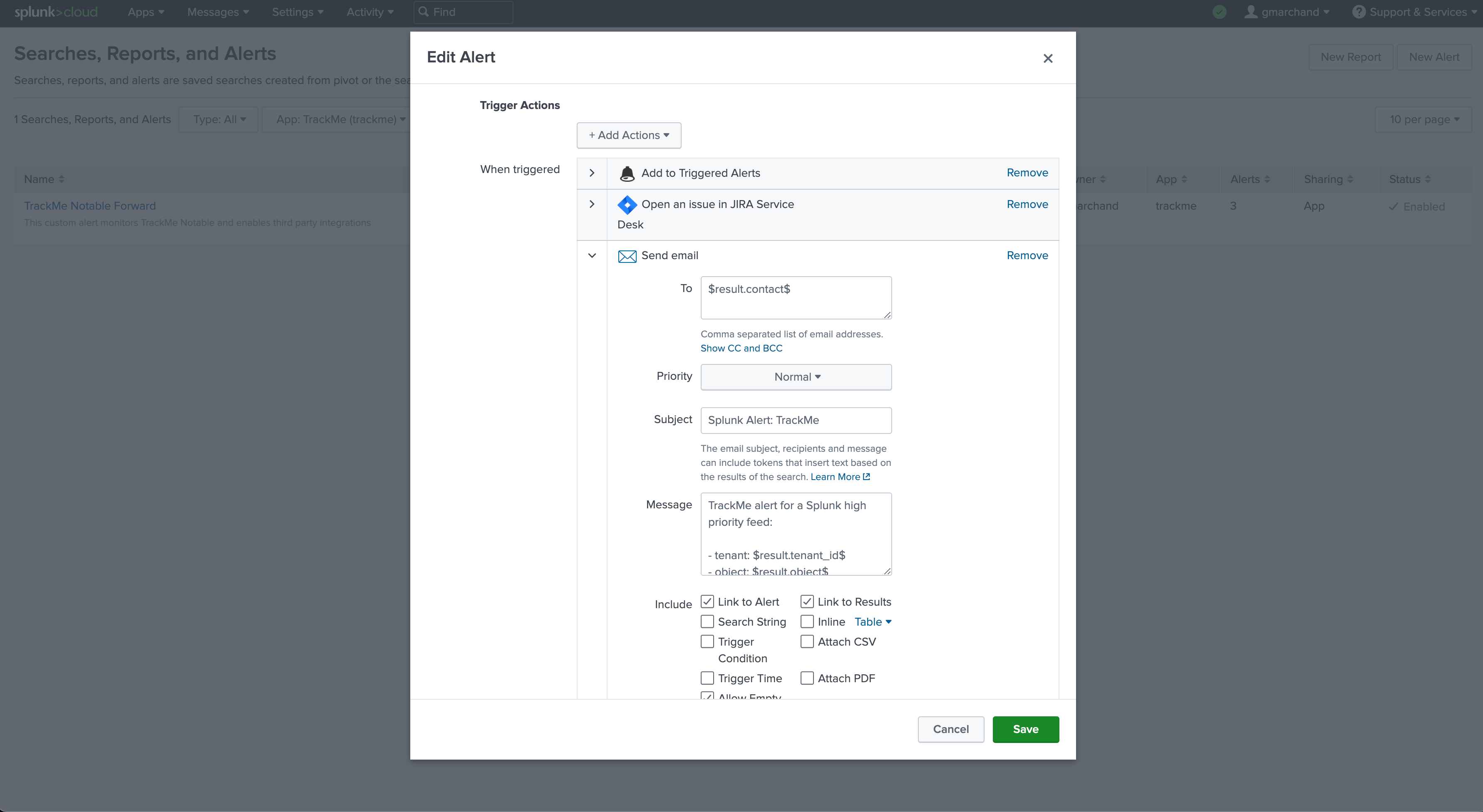

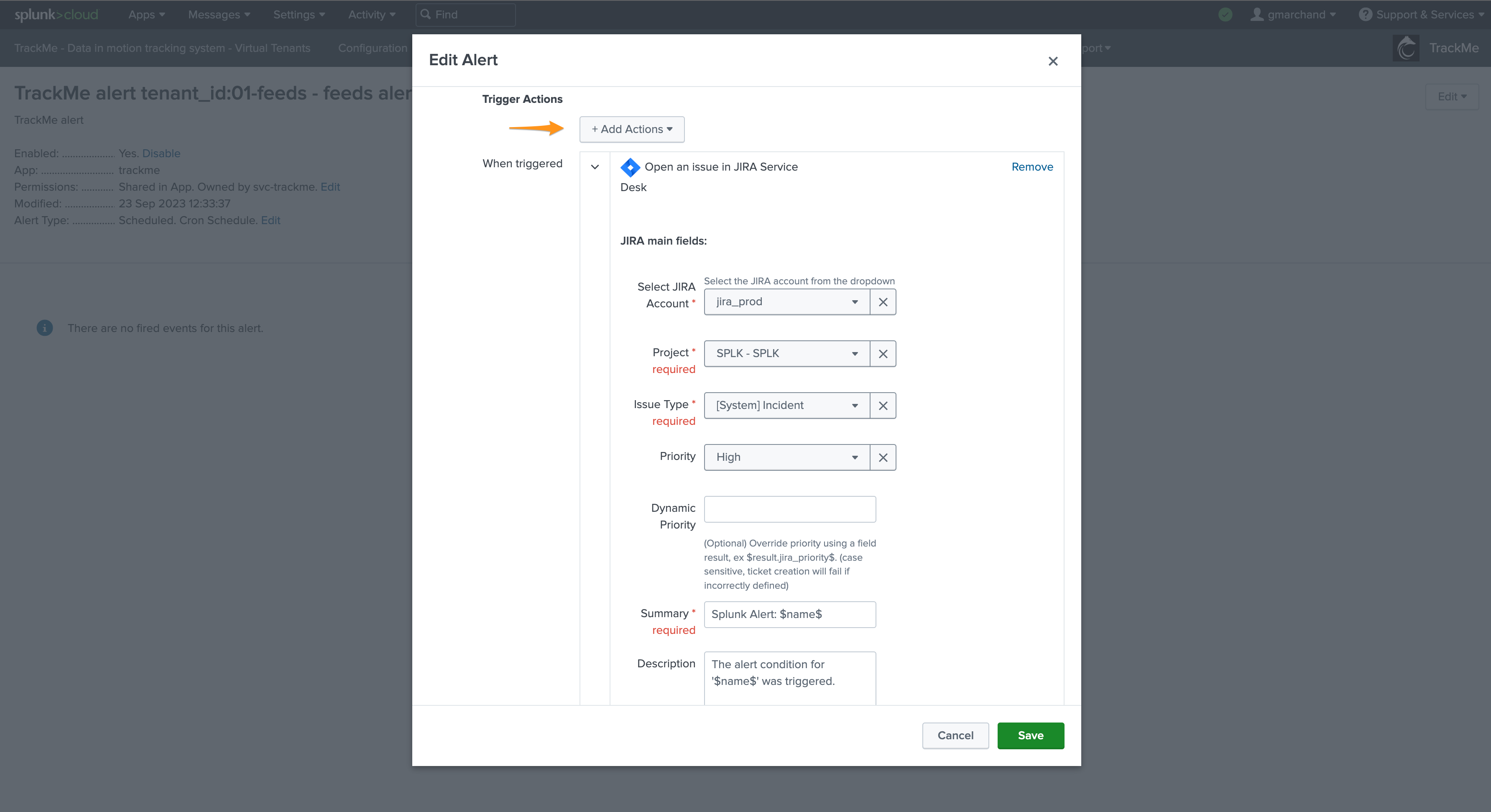

Then enable the third party alert action, for example we will enable creating incident in JIRA, the following shows an example of content for the alert which calls tokens replacements:

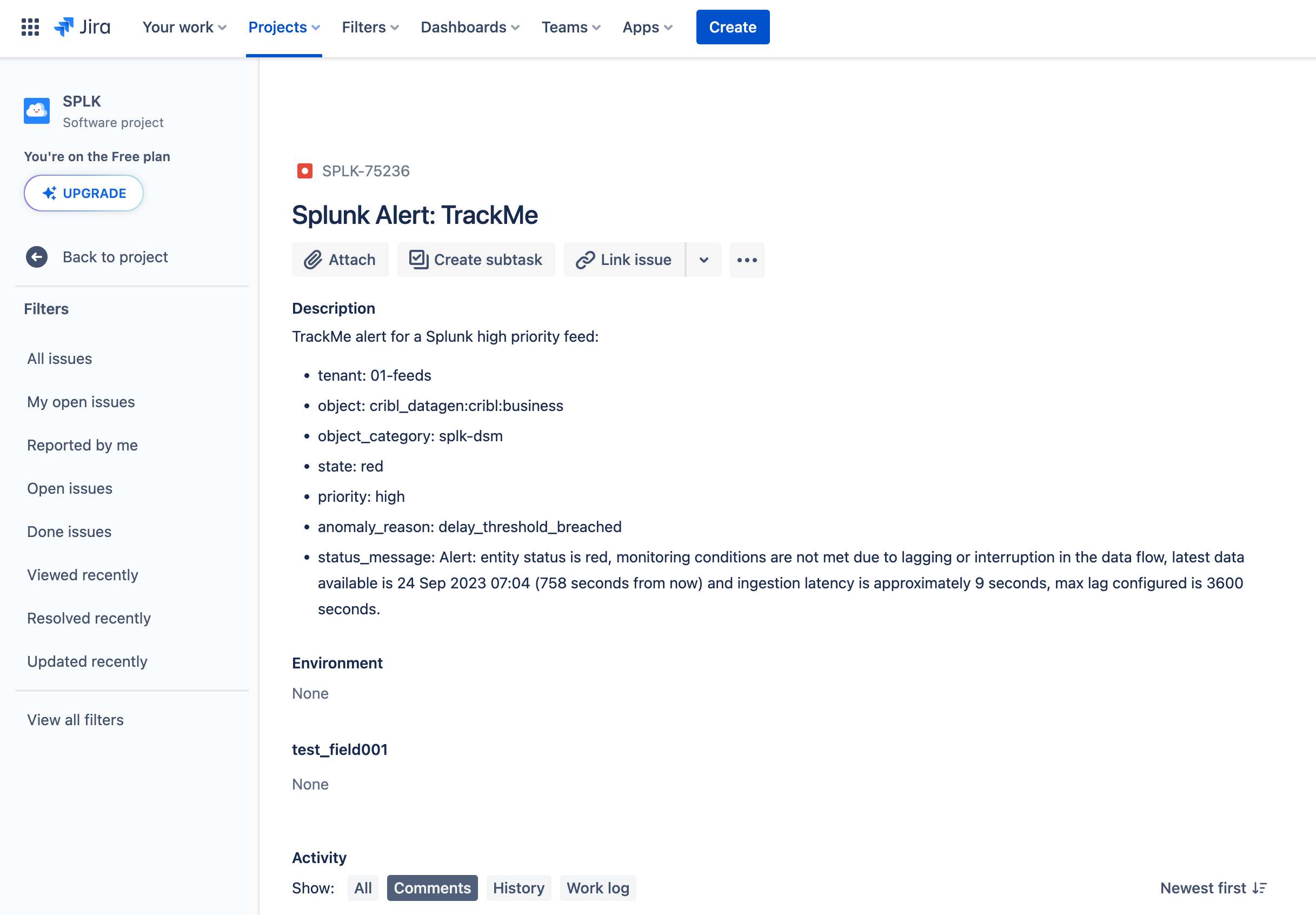

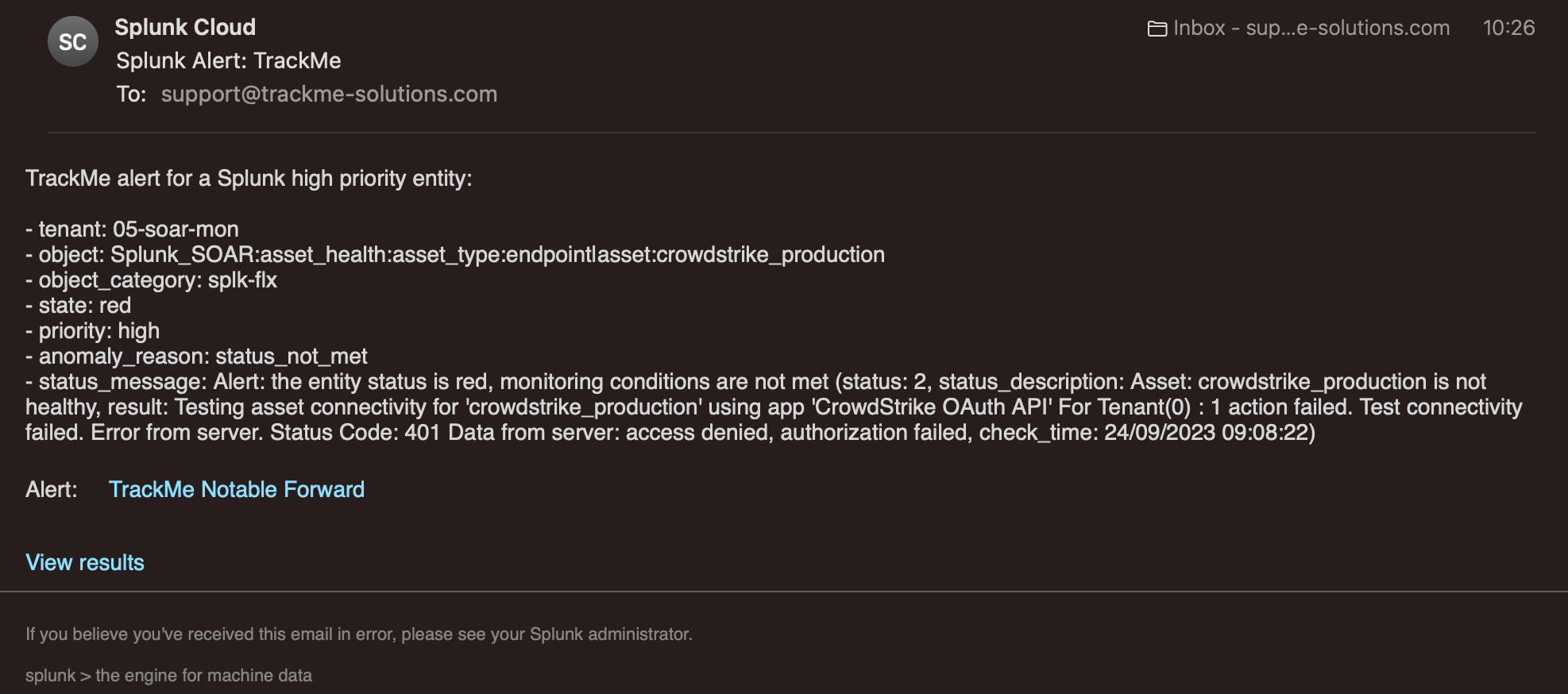

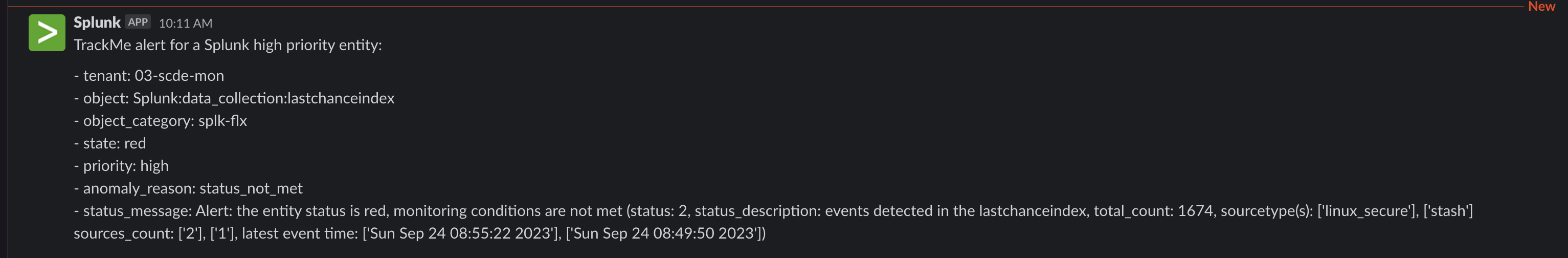

TrackMe alert for a Splunk high priority entity:

- tenant: $result.tenant_id$

- object: $result.object$

- object_category: $result.object_category$

- state: $result.state$

- priority: $result.priority$

- anomaly_reason: $result.anomaly_reason$

- status_message: $result.status_message$

- drilldown_link: $result.drilldown_link$

Hint

Re-assigning to a service account

As a good practice, you should re-assign the created Splunk alert to the TrackMe service account user, or nobody

You should avoid running alerts on behalf of normal users

Adding third parties actions:

Atlasian Jira example:

Note: this integration relies on the following Add-on for Jira: https://splunkbase.splunk.com/app/4958

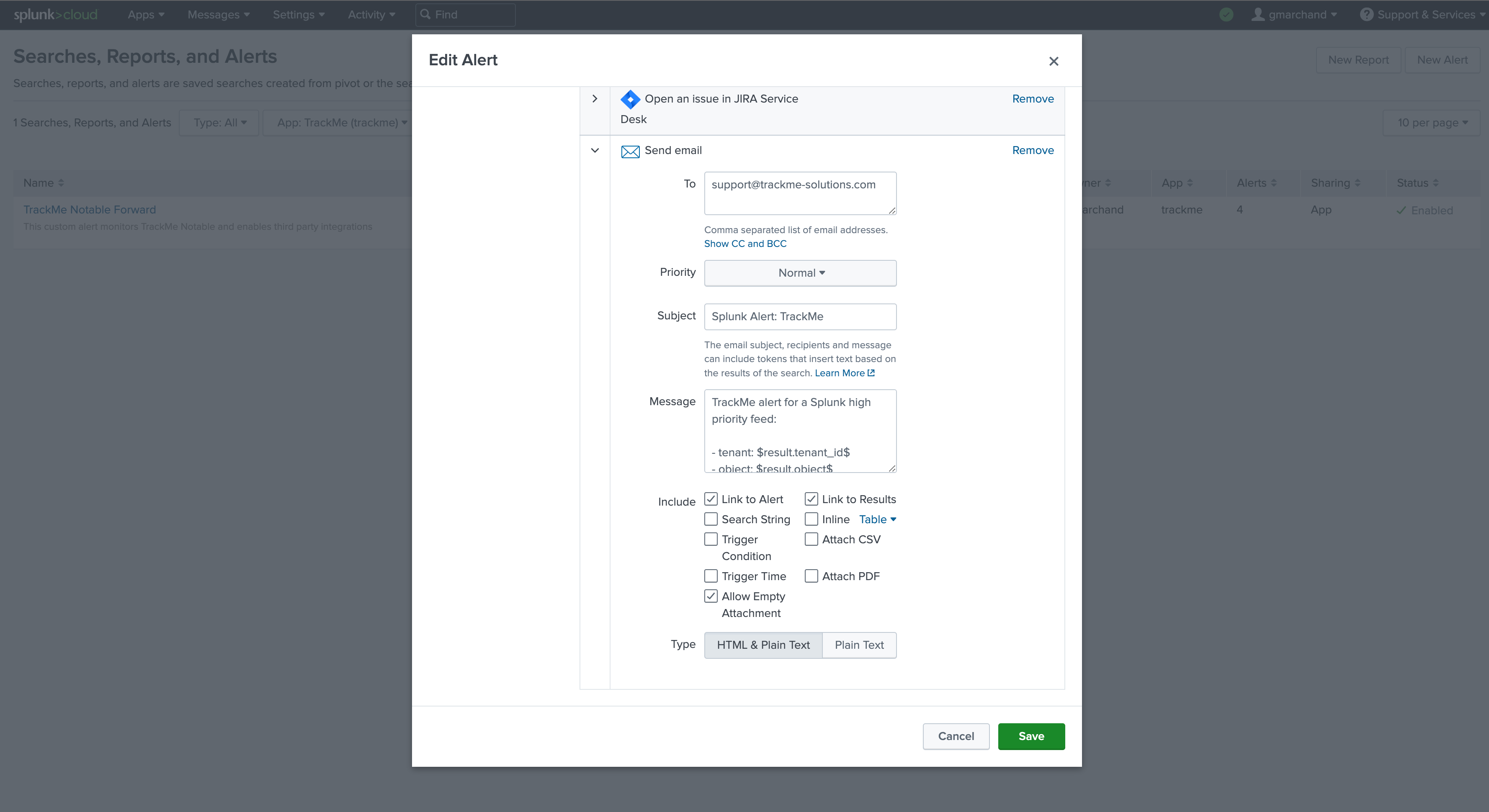

Email example:

Note: Sending emails is a builtin alert action in Splunk

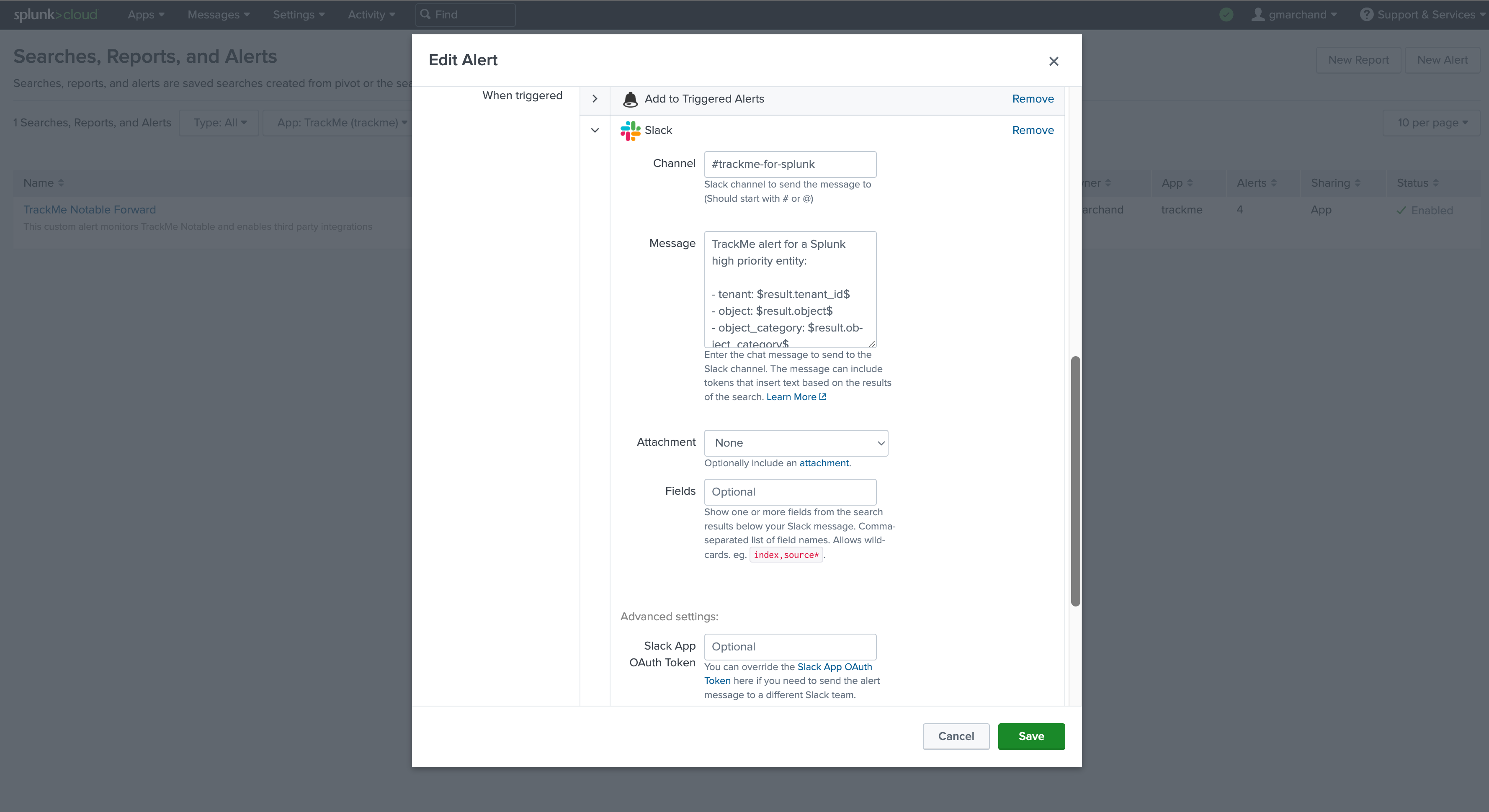

Slack example:

Note: this integration relies on the following Add-on for Slack: https://apps.splunk.com/app/2878

Optional: Notable events enrichment

When using Notable events as the central means for third party alerting, further logic can easily be implemented such as custom normalisation and enrichment.

With enrichment for instance, you may want to leverage your CMDB knowledge to add context information such as the owner or contact address email for the notification, there are plenty of options and designs possible.

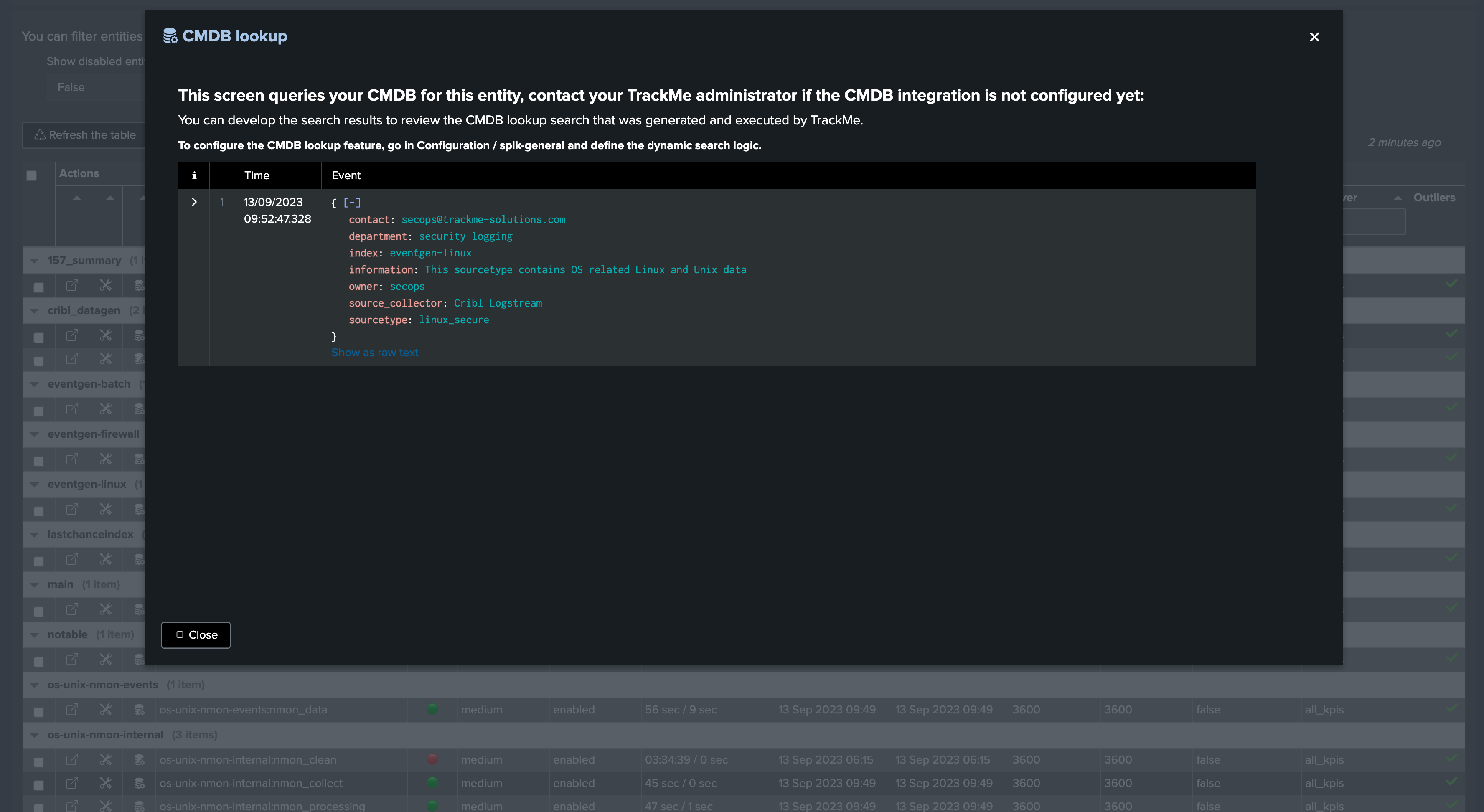

TrackMe allows to lookup easily in your CMDB to provide context information handy in the user interface, you can leverage the same content from the central Notable alert, for more information about the CMDB Lookup integrator see: CMDB Lookup Integration

CMDB integration in the user interface:

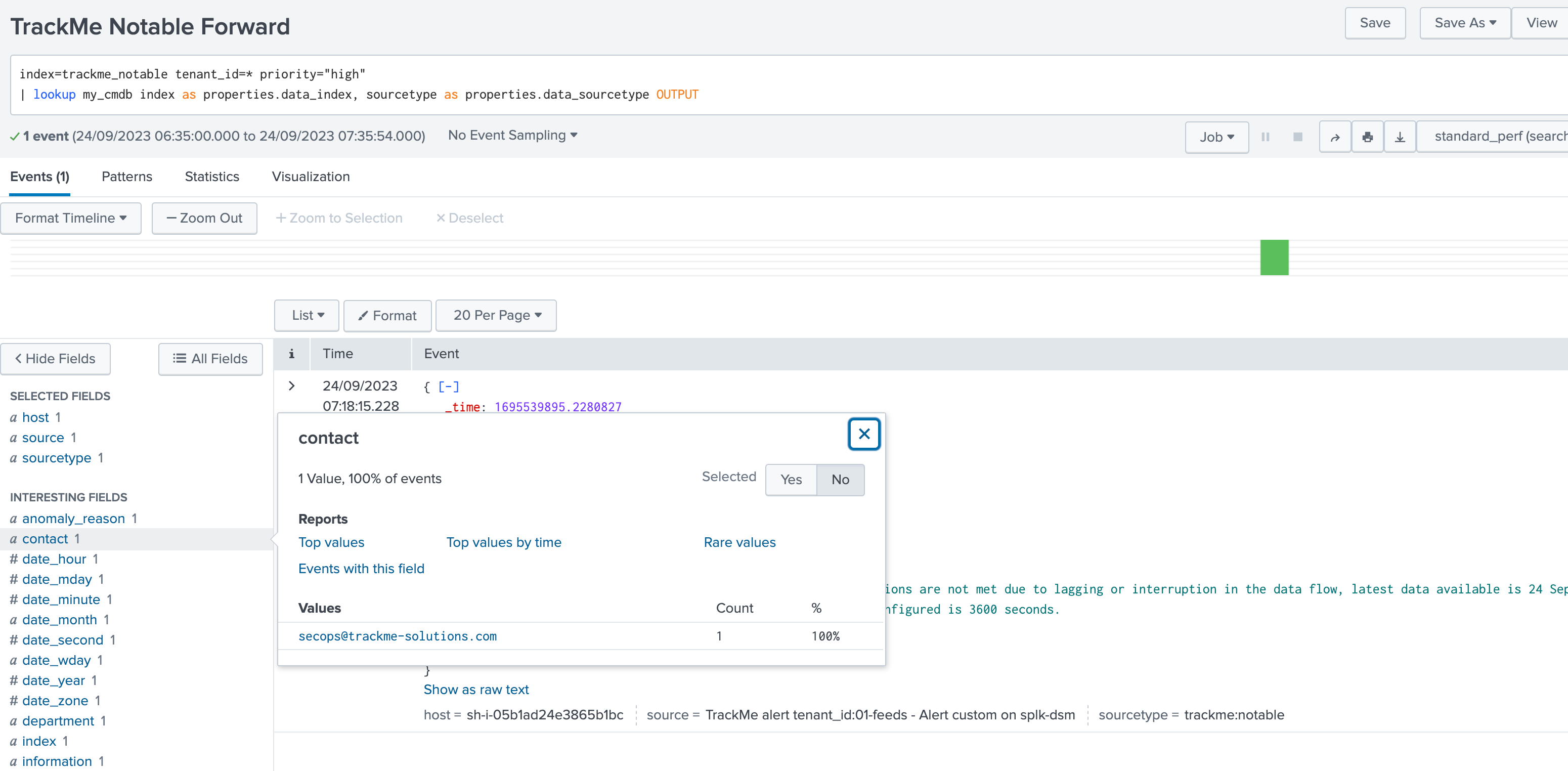

For instance, we could update our Notable event alert:

index=trackme_notable tenant_id=* priority="high"

| lookup my_cmdb index as properties.data_index, sourcetype as properties.data_sourcetype OUTPUT

| table tenant_id, keyid, event_id, object, object_category, state, priority, anomaly_reason, status_message, drilldown_link

Enrichment information are automatically available:

Any of these fields can be used equally in your alert configuration using tokens, for instance we could update the recipient email address and add some logic to handle unknown entities:

index=trackme_notable tenant_id=* priority="high"

| lookup my_cmdb index as properties.data_index, sourcetype as properties.data_sourcetype OUTPUT

| eval contact=if(isnull(contact), "support@trackme-solutions.com", contact)

| table tenant_id, keyid, event_id, object, object_category, state, priority, anomaly_reason, status_message, drilldown_link, contact

And recycle this token instead:

Architecture scenario 2: Per TrackMe Alert Design

Another design choice is to configure third party alert actions on a per TrackMe alert instead of relying on the Notable events.

Note

This integration can also be considered as a valid choice, eventually easier and more logical, however it does not present some of the advantages in comparison with a TrackMe Notable based design:

Each alert needs to be configured individually, which can be more challenging to maintain over time

Entities that are not covered by any TrackMe alert will not lead to TrackMe alert actions being executed (Notable, Ack, SmartStatus), for instance if you restrict alerts using entities priority or tags and some entities are out of the scope

Normalisation, enrichment or the introduction of custom SPL logic is decentralised opposed to the central alert based on Notables

Finally, you may need more alerts to be created (for instance to cover entities by priority and have alert actions executed for all entities)

For the purposes of this documentation, the following scenario will be implemented:

A first alert is created which targets

highpriority entities, when triggering a notification is sent using a Splunk alert action to open an incident in our ITSM tool (we will use Jira but this is applicable to any Splunk supported third party)A second alert is created which targets

mediumpriority entities, this time an email will be sent when the alert triggersEntities set as

loware not subject to a third party integration, however an alert should also be active for notable events and other TrackMe alert actions to be triggered

Step 1: Create a TrackMe alert for high priority entities

We start by creating an alert in TrackMe for high priority entities:

Repeat the same process for other priority levels, all entities should be covered by alerts, in this example we would therefore need 3 alerts in total.

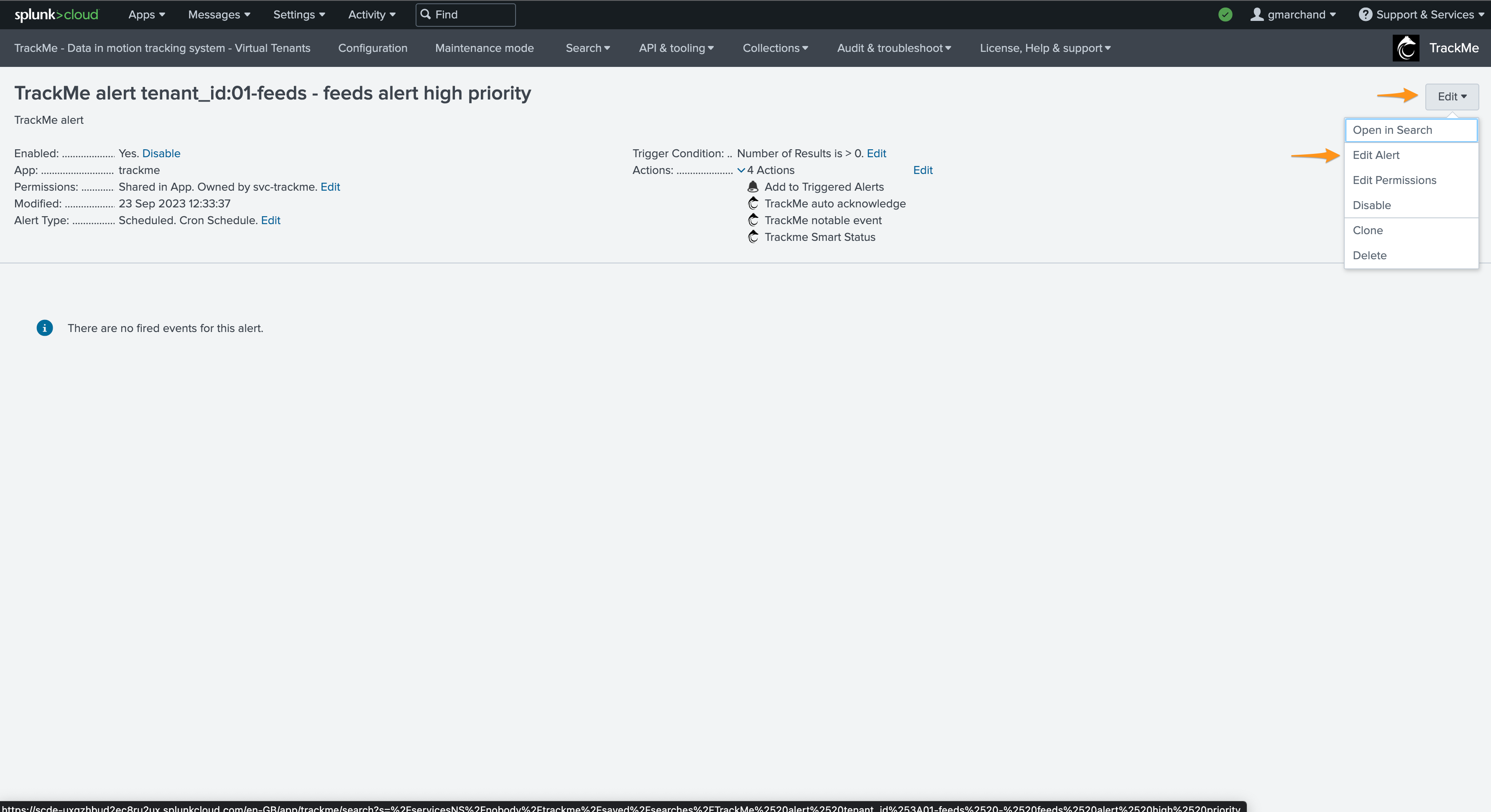

Step 2: Enable alert actions per alert

The next step is to update TrackMe alerts to include the alert actions to be enabled:

This opens the Splunk alert edition management screen, we will add a new alert action:

We add for instance add the Jira alert action and define some of the most use fields returned by TrackMe:

These steps are the same as in the architecture scenario 1 Notable based, consult the previous section for more insights.

Context body example:

TrackMe alert for a Splunk high priority entity:

- tenant: $result.tenant_id$

- object: $result.object$

- object_category: $result.object_category$

- state: $result.state$

- priority: $result.priority$

- anomaly_reason: $result.anomaly_reason$

- status_message: $result.status_message$

- drilldown_link: $result.drilldown_link$

The same process needs to be achieved for every alert that should monitor TrackMe entities, per tenant and component.

When alerts trigger, Splunk automatically configured the alert actions according to your settings.

Deleting TrackMe alerts

Warning

TrackMe alerts are orchestrated by TrackMe and should be deleted in TrackMe

When creating a TrackMe alert, it will preserve knowledge of it

This allows automatically managing assignment and Roles Based Access Control settings, according to the Virtual Tenant setup

Therefore, you must delete a TrackMe alert via TrackMe, and not directly via Splunk

Deleting an alert from the user interface

You can delete TrackMe alerts from the user interface, because TrackMe maintains the knowledge of objects associated with a given Virtual Tenant, alerts should be deleted through the user interface or the REST API:

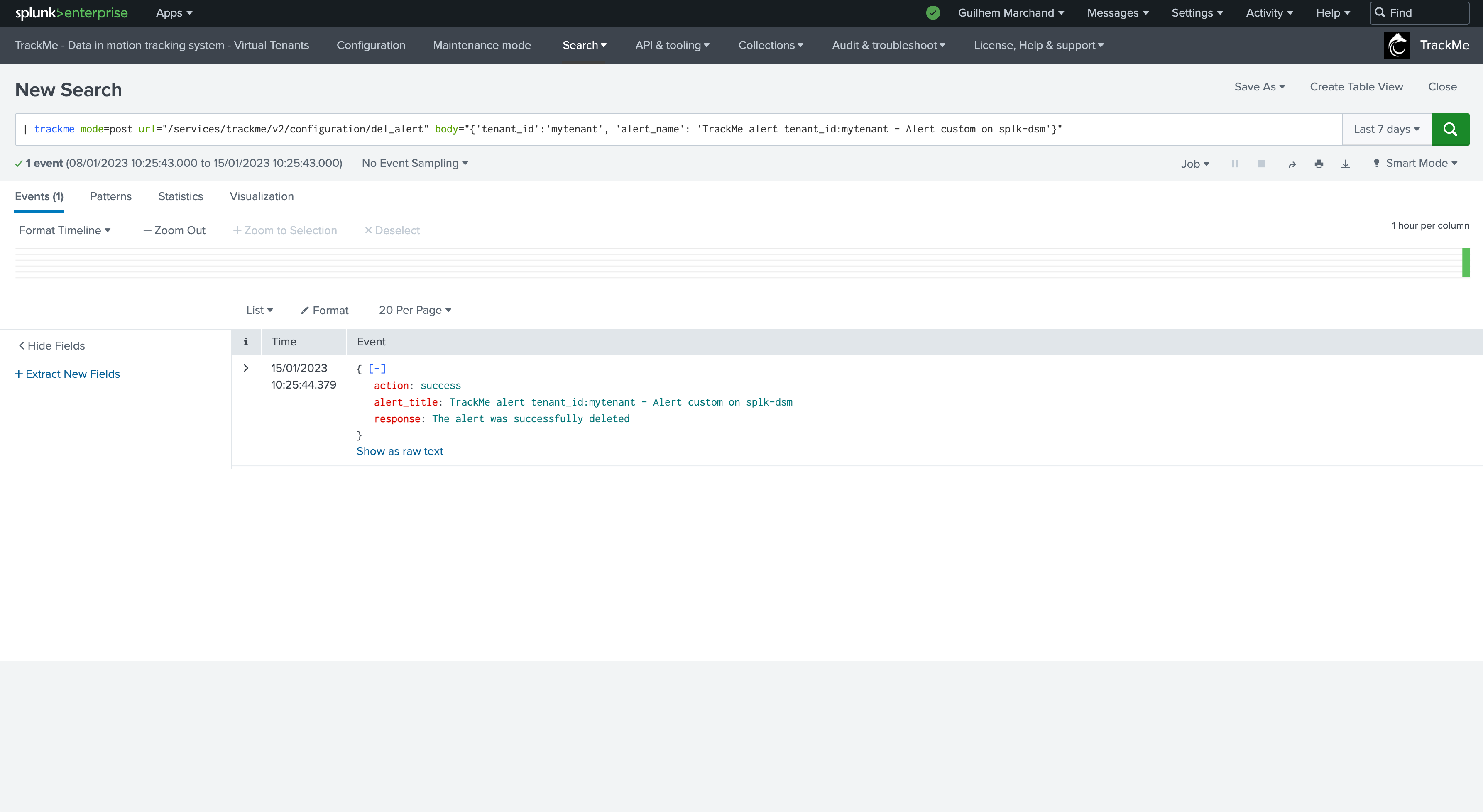

Deleting an alert from the REST API

You can as well delete an alert using the REST API and the following endpoint:

| trackme mode=post url="/services/trackme/v2/alerting/admin/del_alert" body="{'tenant_id':'mytenant', 'alert_name': 'TrackMe alert tenant_id:mytenant - Alert custom on splk-dsm'}"